How to execute an API fetch inside a public web page

When I use:

In DevTools, I see

I'm new to Firecrawl, but it looks like an impressive tool.

var aResult = await app.scrapeUrl( aURL, { ... } ) I get a nice table of many rows. The web page has a form for filtering rows. The form's key value pairs get sent to the web server via an API call.In DevTools, I see

this.ajax( aURL, "GET", { data: { key1: value, key2: value, ... } )

How can I use Firecrawl to submit this API call from inside the page and return a sub-set of the rows. I believe it is failing because the old jQuery ajax GET method is re-using credentials created when the initial page is loaded. Should I be using executeJavascript or is there a simpler way?I'm new to Firecrawl, but it looks like an impressive tool.

6 Replies

@Robin Mattern I think the simplest way will be to use

Actions to interact with the form's key value pairs, instead of executing arbitrary Javascript https://docs.firecrawl.dev/advanced-scraping-guide#actions-actions, maybe you can also share example you're using, we can help check further.Firecrawl Docs

Advanced Scraping Guide | Firecrawl

Learn how to improve your Firecrawl scraping with advanced options.

Thanks for the reply. I have tried to use the actions. I'll send along some test that I have tried later today. Thanks.

Here is a what I have come up with to click on a button

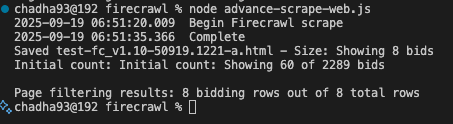

Here is output from the script: If the script returns HTML "Showing 8 of 2289 bids" then we'll know that the "StageId" filter is working.

If there is no simpler

.search-btn that submits the form after setting the select field stageId-field to 3.. The attached script is over-kill but it proves that the field value has changed. But clicking on the button with the click action or the executeJavascript action, isn't working. Perhaps you can give me a simpler pTest1 object that works?Here is output from the script: If the script returns HTML "Showing 8 of 2289 bids" then we'll know that the "StageId" filter is working.

If there is no simpler

pTest1 object that works, then I suspect that the API call

this.ajax( aURL, "GET", { data: { key1: value, key2: value, ... } )

being performed by the a script needs to be authenticated. And I would have no idea how to accomplish that.@Robin Mattern thanks for sharing the script, I see the issue the website is changing it's its display format from "X of Y total" to "X bids" when filters are active.

here's the updated script, where the filter is working and shows

8 bids" instead of "2289 bids initially.

the filter is working fine but given the site is changing it's format the bids won't show all 2289 at one after filtering. refer to the initial count.That's amazing. The real key is these two lines

And simplifying the "Capture initial total before filtering" was also nice. What will it take for me to get as good at Friecrawl as you. There isn't much documentation of more advanced techniques and I've never used

field.dispatchEvent( new Event('change', { bubbles: true } ) );

field.dispatchEvent( new Event('input', { bubbles: true } ) );

This enabled the new value to be used for the click action. Can you explain why this is necessary? Is it the accepted practice for making a changed value in a form useable by a subsequent click action? I see that there is a write action for <input> fields, it these dispatchEvent also need for it.And simplifying the "Capture initial total before filtering" was also nice. What will it take for me to get as good at Friecrawl as you. There isn't much documentation of more advanced techniques and I've never used

puppeteer . Is that knowledge a prerequisite, or is Frecrawl pretty different?@Robin Mattern great to hear this. appreciate the feedback.

the reason of using

dispatchEvent is because all the modern browsers or web pass works on event-driven programming. I won't go deeper into technicals, but basically when you type in a field, the browser automatically fires events like input and change to update the internal state.

and previously when you were using field.value=3 you wre only changing the DOM bot no events are fired.

we'll ensure to update the docs for more complex use-cases like yours, also you can always leverage AI to update these action events, it works pretty well, but some times requires manual changes like the above case.

the method of query will be similar in Firecrawl and pupeeter, given in Pupeeteer you'll have to use add and maintain the args (arguments) separately which is already managed by Firecrawl infra within .scrapeURL