How can I make sure that my self-hosted firecrawl can handle large amount of request?

If the request is plenty how could I handle the large amounts of requests?

6 Replies

you can simply increase the limits and configure it within the

docker-compose.yaml example if you want to increase it for api worker:

Add this, and ensure your system (docker instance) has enough memory to spin up a bigger instance.Ohh so its more on the docker-server that I need to set to handle concurrency right, sir @Gaurav Chadha?

I need to increase the limits?

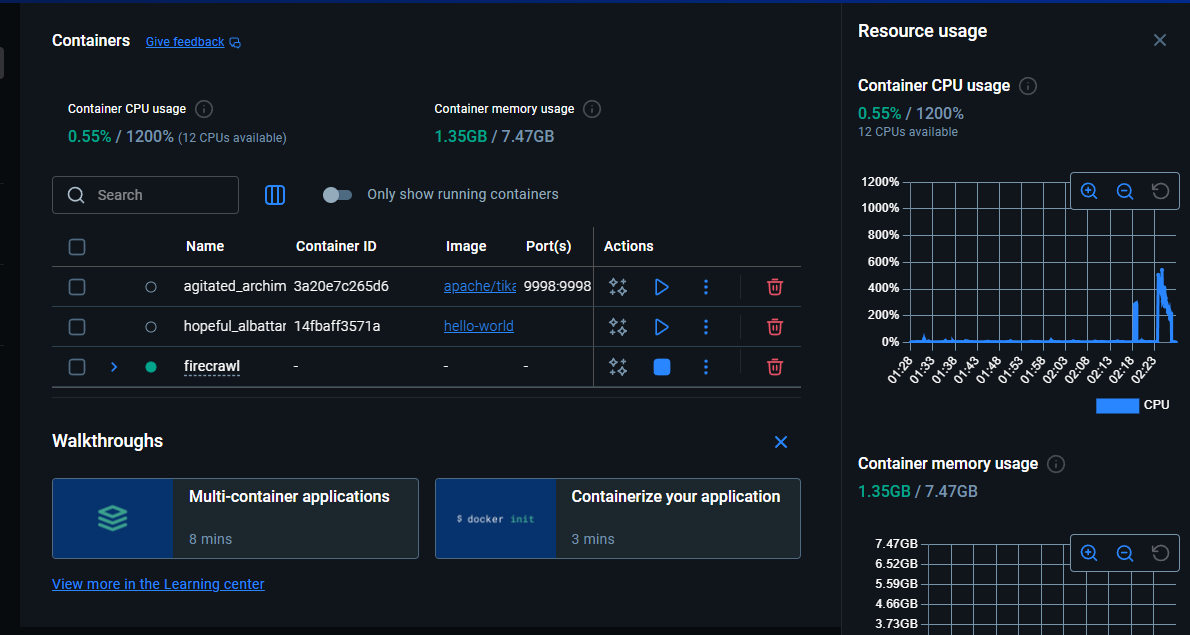

yes, only if you want to scale, first check your current firecrawl container usage instead.

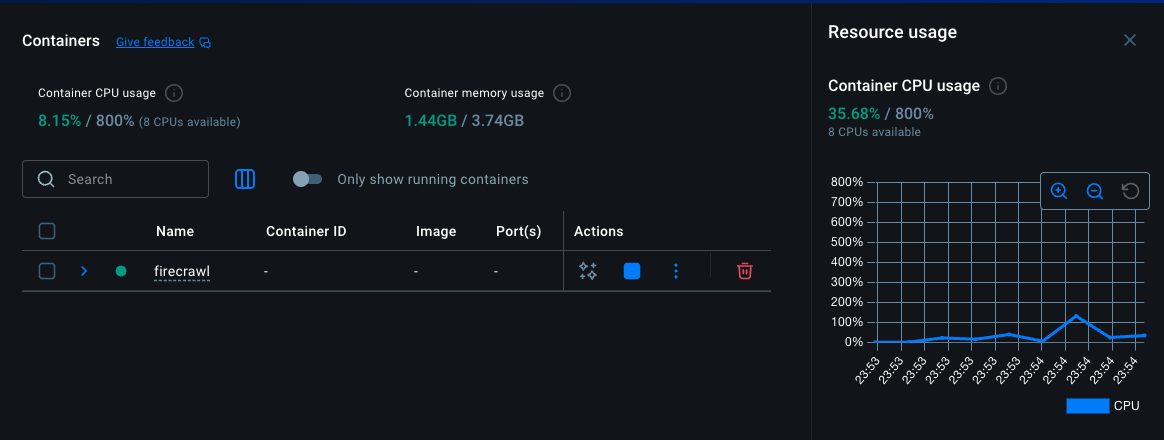

This is my container so its so far good right?

Yea more than enough

But what if I want to limit the jobs how could we do it? Is the delay parameter enough?