sidecar in kubernetes template

My setup:

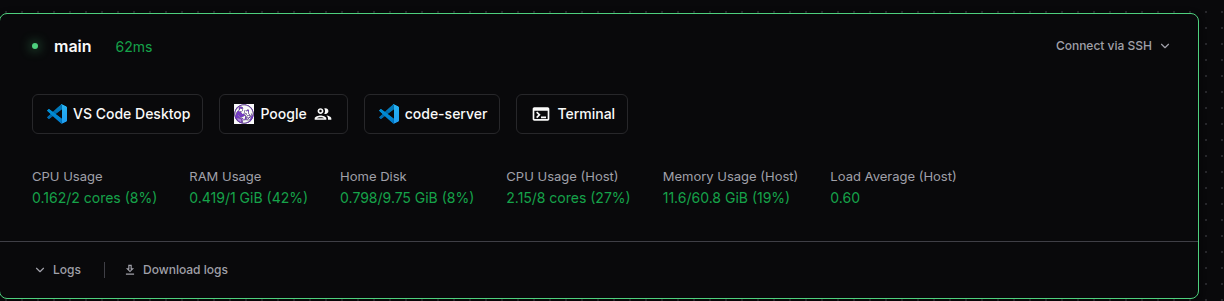

my server(coder in kubernetes k3s>traefik)>cloudflare tunnel. Usually with the default kubernetes templaet, everything was fine in the last couple of weeks, no issue.

goal: I'm trying to have a nodejs project that can be run/debug in realtime with coder

so my problem is:

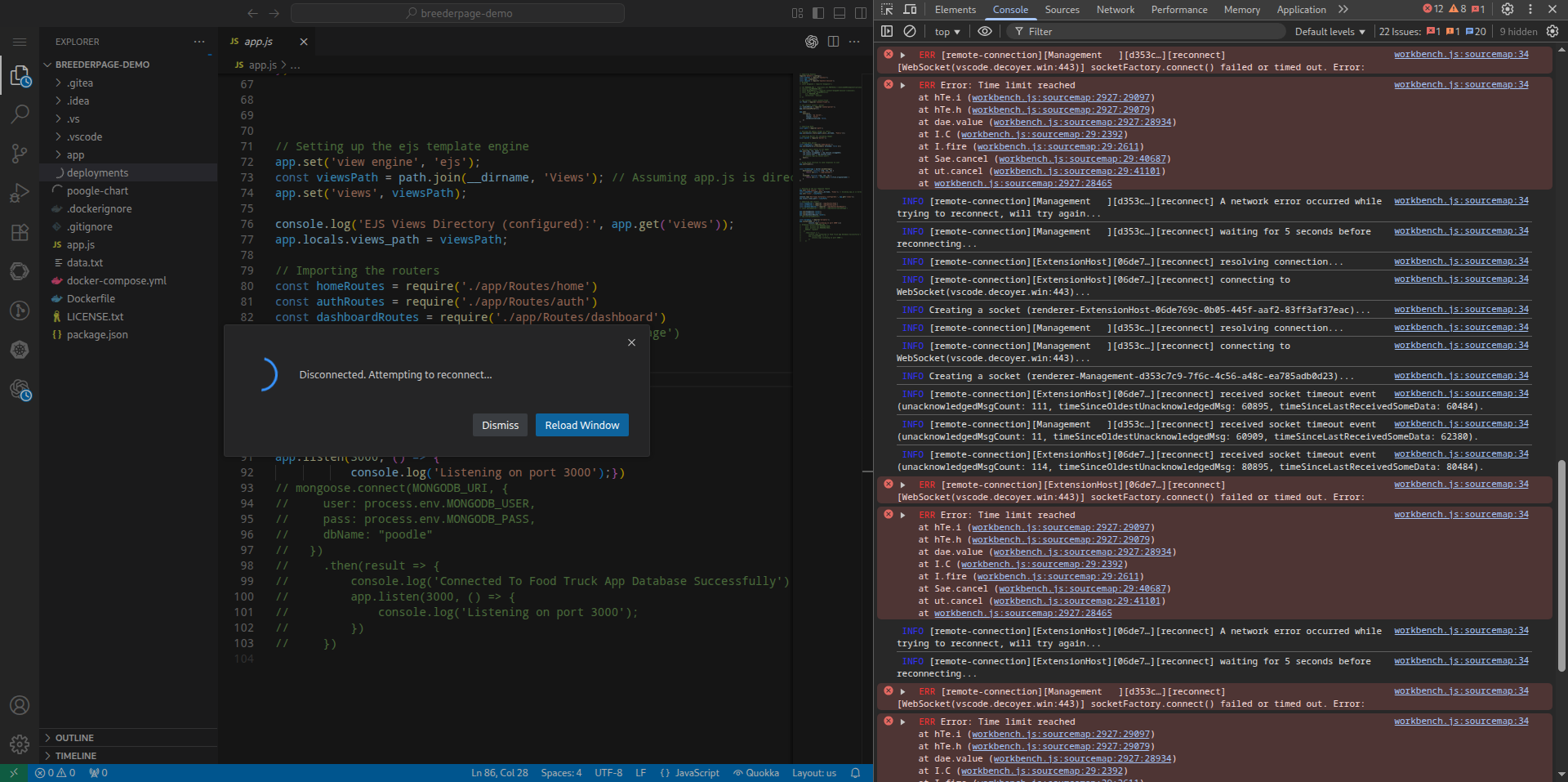

it boot up just fine, I can access my "web" container through the "Poogle" button app, it works as expected. While i got my hope up, dev console was throwing wss 1006 and failed websocket like every 2s and agent unhealthy->crash. That happens like 1m after I'm just trying open some dir at /home/coder/breederpage-demo

upon checking with kubectl, I found this logs right after it crashed:

at first I thought it was the issue between traefik<->cloudflare, but after update coder domain -> my server local ip (editing /etc/hosts), it still got the same issue, so here I am. My best guess is something has to be done between traefik<->coder?

this is as far as I can go debugging this issue, can someone help?