Hi!

Hi!

Let’s say I need 3-4 streams (as per the current limits which are 5MB/s) to handle spikes in load

- Is it possible to direct the corresponding pipelines to a single Sink ?

- If the Sink is an R2 bucket, can I ensure, using custom partitioning, that files are written in lexical order ? (I had issues with pipelines legacy where files would be created in R2 out of order)

Use case : events ingestion using Cloudflare Pipelines -> R2 -> Clickhouse Clickpipes S3 integration with continuous ingest (requires lexical ordering of files)

11 Replies

Okay so for first bullet, I managed to direct 2 streams to 1 pipeline using SQL like :

For your first bullet, I think the old version had that issue because of the way it sharded partitions and not having any coordination between them when writing to R2 - in this case we are now using something completely different to write to R2 (based on Arroyo) and this shouldn’t be the case but @Micah | Data Platform or @cole | pipelines can confirm for me

It is possible to union multiple streams, but we can also increase limits for your stream so that's not necessary — DM me your account and stream id and we can discuss

We write files by default with ULID names (https://github.com/ulid/spec), which are are lexicographically sorted. We support custom partitioning by date/time fields (see https://developers.cloudflare.com/pipelines/sinks/available-sinks/r2/#partitioning), and in general there will be a single writer so files will always be written in order.

Hi @Micah Wylde , thanks, I realise just now that I missed your last message, thanks for the explanation!

I was wondering what kind of limits we could expect in terms of MB/s from a single Stream once the beta is over?

We currently have a baseline of 10-15 MB/s with spikes at 30-40 MB/s sent to AWS Kinesis straight from cloudflare workers

I am guessing there also has to be a limit in throughput at sink level, where at some point several sinks have to be used?

For GA, we're targetting 1GB/s per stream. Today we can up your streams to 50MB/s.

Also—the ingest limits are computed over an hour, so short spikes above the limit aren't a problem.

There are no throughput limits for sinks (although in practice it's somewhat limited by how much data you can get in)

Hi @Micah Wylde , I have DM you the stream ID I am going to use to test, if it's possible to up it

Sure, I'll up that stream to 20MB/s

Thanks!

Hi @Micah | Data Platform,

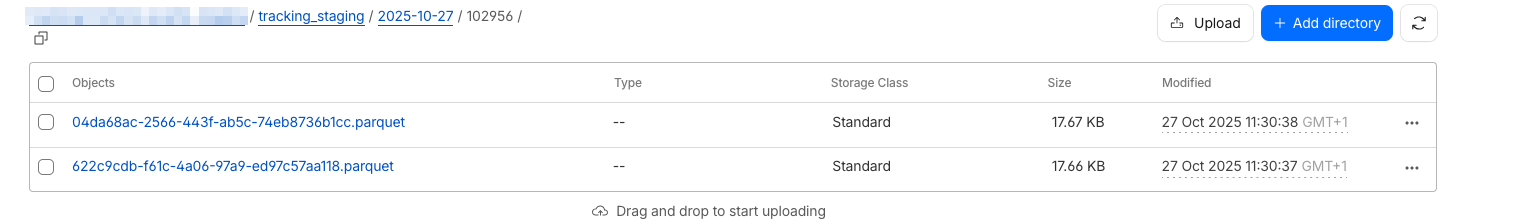

do you know how to enable ULID format ? Because on my side it looks like UUIDs so files are not sorted by time

I am using

%F/%H%M%S partitioning so it means in my example those 2 files belonged to the same "second" partition despite containing relatively few events 🤔

Hi Stephane, we actually just changed the default format from uuid v4 to uuid v7 which has the same time-ordering property as ulid. If you want to specifically use ulid you can specify the format via

file_naming.strategy option on the sink config (supported options are serial, uuid, uuid_v7, and ulid).Oh that's even better, thanks