**Pipeline ID** -

Pipeline ID - 8428c7b24c4b44609b11c8bd9319f7b1

Event production method - Worker Binding - tesdash-signal-processor

Sample events - {"signal":"FAAAAAAAD.....=","received":1759446232076,"session_id":"c985bc9d-d018-4176-ab42-6e04c84e770b"}

Wrangler version - 4.41.0

I am trying to publish events to a pipeline for simple R2 archival. I ahve tried various iterations of the pipeline (different schemas, batch sizes, rollover sizes) but my pipeline is reporting no events and not creating any files.

I am using the default SQL insert - I do not intend for any transformation to occur.

INSERT INTO tesdash_signal_archive_sink SELECT * FROM tesdash_signal_archive_stream;

I also had required fields before but made them all optional:

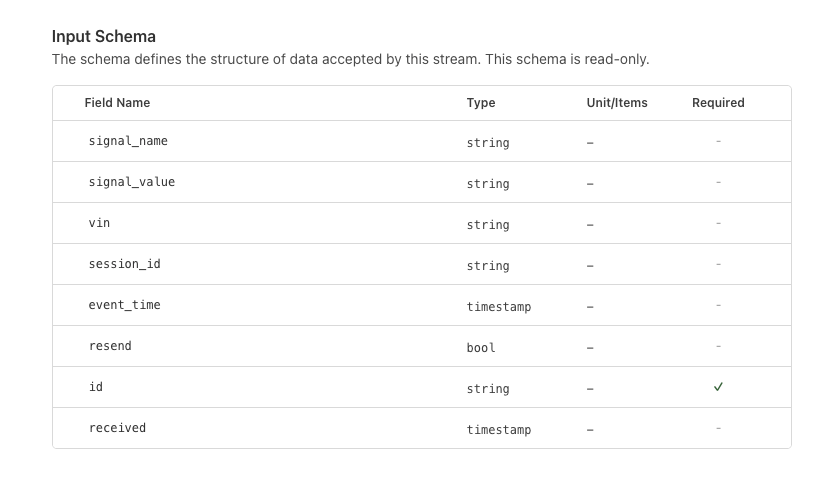

Stream input schema:

┌────────────┬───────────┬─────────────┬──────────┐

│ Field Name │ Type │ Unit/Items │ Required │

├────────────┼───────────┼─────────────┼──────────┤

│ signal │ string │ │ No │

├────────────┼───────────┼─────────────┼──────────┤

│ received │ timestamp │ millisecond │ No │

├────────────┼───────────┼─────────────┼──────────┤

│ session_id │ string │ │ No │

I also had the signal set as binary but move to a string and also have no events showing.

The worker is not throwing an exception when invoking the binding.

17 Replies

Ideally I would like a long and large batch (6h and 1024mb) but I can;t event get this one working with a short lifed roll

✔ The base prefix in your bucket where data will be written (optional): …

✔ Time partition pattern (optional): … year=%Y/month=%m/day=%d

✔ Output format: › JSON

✔ Roll file when size reaches (MB, minimum 5): … 5

✔ Roll file when time reaches (seconds, minimum 10): … 10

✔ Automatically generate credentials needed to write to your R2 bucket? … yes

Stream: tesdash_signal_archive_stream

• HTTP: Disabled

• Schema: 3 fields

Sink: tesdash_signal_archive_sink

• Type: R2 Bucket

• Bucket: tesdash-signal-archive

• Format: json

✔ Create stream and sink? … yes

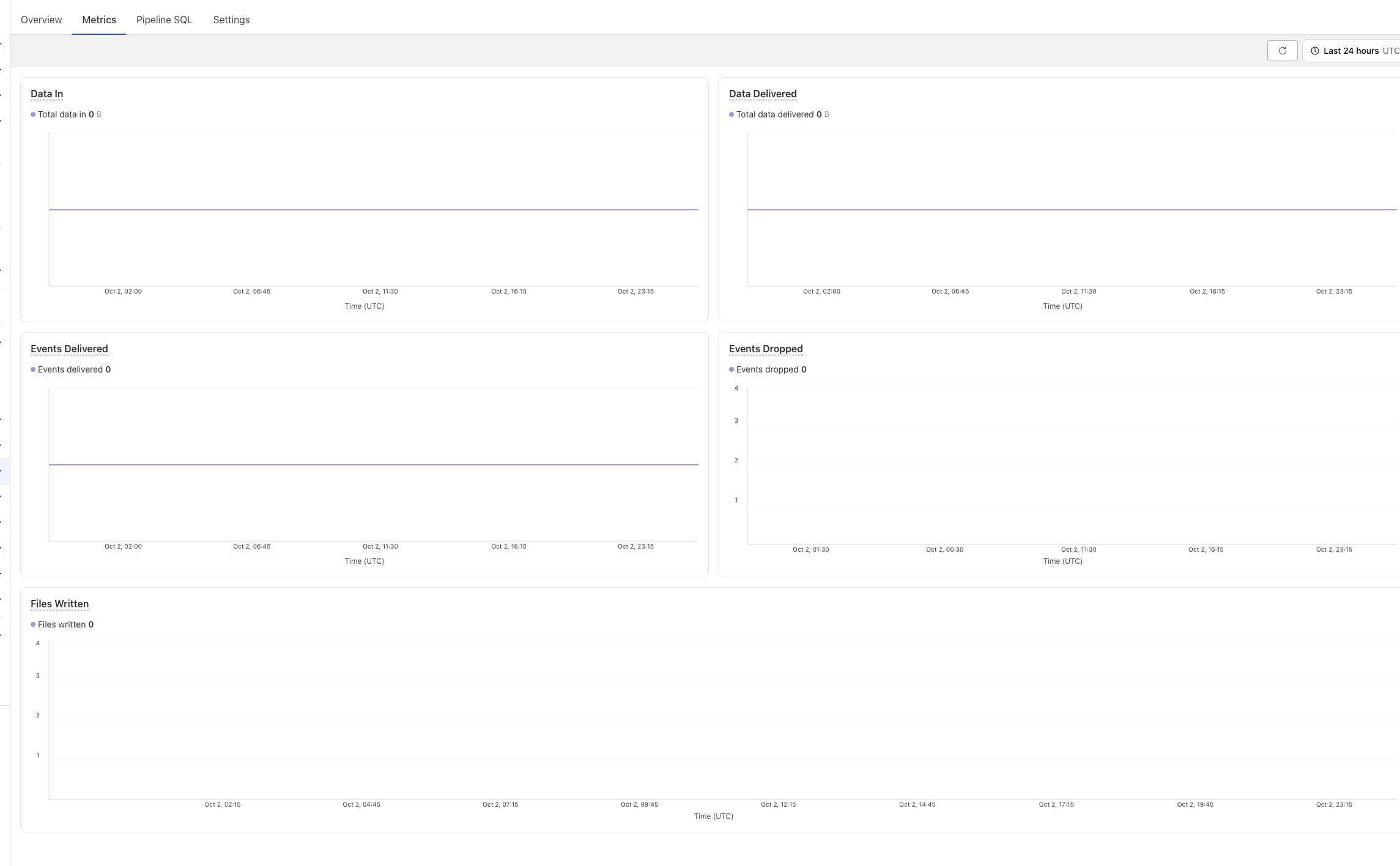

Metrics are flat despite publish a couple dozen events

I tried a couple other things also with no luck

1.) Create a new pipeline, stream, and sink with a web endpoint and post multiple requests to it.

Got {"success":true,"result":{"committed":1}} every time and posted at least 5MB of data, dashboard still shows no events and there are no file operations in R2

2.) Created a new stream with the config mentioned above with the received key set to int32 instead of timestamp.

Same behaviour as above,

I should also note that when creating these in the UI, I did receive an error message on create, however when I looked in the UI after I saw all three instances (pipeline, stream, and sink) were created with the right settings.

Created test instances

095dfcdd6ead4e618d297d6b04d6a2e2

2a4d34cca2d34864914797d4242c70fb

Hey Adam - we'll look into this today

Looks like there might be some deserialization errors - can you share the schema and some sample messages you're sending over?

Hey @Marc

I was able to resolve my archival pipeline issues. I stood up another pipeline to use data catologue and I ams seeing the same issue:

{

"signal_name": "NUMBRICKVOLTAGEMAX",

"signal_value": "13",

"vin": "5YJSA1...",

"received": 1759523454876,

"session_id": "e7cd8293-10e2-4bac-bac3-94369f4d94c4",

"event_time": 1759523451438,

"resend": false,

"id": "229c5e74-4efc-4fbf-9953-5e8bbcd43ec6"

}

Pipeline 0ad556f26a2f4376a313a6a3a4c45549

It is going to be an issue with signal_value being a quote wrapped non-string? It can be a bool, number, or object but will always be passed as a string.

It shouldn't matter if it's a string, let me try to recreate

Thanks, fwiw I did recreate it and the ID is now 05eaf40598214de19fdcf26b81b833df - the Target Row Group Size was not configured right

Still not seeing any events flow

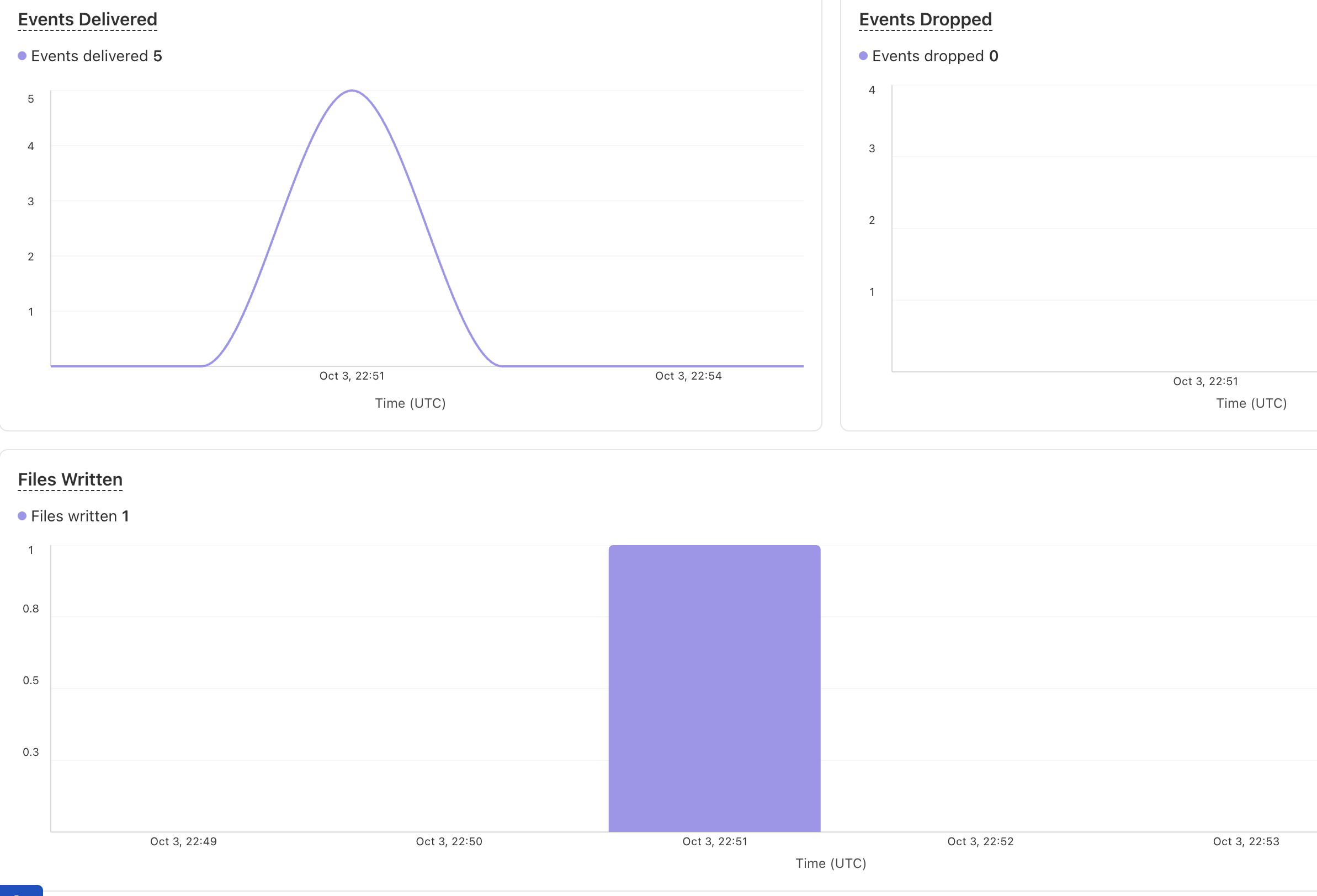

ok, started my test

I copied and pasted that payload and posted it 5 times -

so something else seems to be going on then

I actually am now seeing events in mine after recreation (no change in payload). I may be completely off base here, but I wonder if there is a provisioning fault issue. When I created it before I got an internal server error. The recreation I did not. Both times the pipeline, stream, and sink all listed in the UI correctly.

Eitherway my issue is resolved, but I have been getting a lot of errors using the UI for provisioning, metrics viewing, and viewing pipeline config over the last 24h

did you delete the older sinks before doing it this time?

I have deleted the pipeline, sink, and stream on every recreation

Eitherway, all good now. Everything is created and working as expected. Thanks for the help!

There was probably a validation issue with one of the earlier pipelines - that or we fixed a bug in between tests (we fixed a lot this week heh) - let us know if you need anything else!

Hi Adam, you mentioned trying binary earlier and not seeing events. I wanted to let you know that we had a bug with binary fields that is now fixed.

Ahh perfect, thank you for the heads up