"RuntimeError: memory access out of bounds" in Rust queue consumer

I'm seeing a recurring issue where, after multiple hours of being up, a Worker consuming from a Cloudflare Queue will start erroring with

RuntimeError: memory access out of bounds.

Once the Worker is in this state, all of invocations fail with this same error until we deploy the Worker again.

Prior to getting into this state, I have observed two other categories of errors:

1. An error indicating that the "Workers runtime canceled this request"

2. A RangeError: Invalid array buffer length error:

The stack trace for this one will vary, which I suspect is indicative of a broader memory issue within the Wasm instance/V8 isolate.12 Replies

A couple of other things to note:

1. We are not using the

worker crate. We have our own library that we've written atop wasm_bindgen bindings to the Cloudflare Workers APIs.

2. We have a number of different queue consumers, but this issue is limited to one specific consumer.

3. We are using console_error_panic_hook as our panic hook.While investigating this, I found this GitHub Issue that seems to describe the same scenario: https://github.com/cloudflare/workerd/issues/3264

GitHub

🐛 Bug Report — Runtime APIs: large wasm memory allocations bri...

Details Reproduction code with steps in README. We're using automerge library. When we make a large number of allocations a: Range Error: Invalid typed array length: undefined Error is thrown i...

And while I would like to ultimately get to the root cause of why the Worker ends up in this state in the first place, right now I would be happy with a solution that allows for getting the Worker back into a healthy state.

I've already successfully been able to have the Worker detect when it is in this unhealthy state, but I don't know what the best way is to get it back into a working state without requiring a redeploy.

Is there a way that I can signal to the runtime that it should terminate the isolate and start a new one?

I've tried using a

panic! to do this, but that doesn't seem to help (as the panic will still be on the Wasm side of things).

Would throwing an error on the JS side of things be enough?I haven't figured out a solution here yet.

I did, however, increase the

max_concurrency of the queue consumer from 5 to 10, as well as limit the max_batch_size to 1:

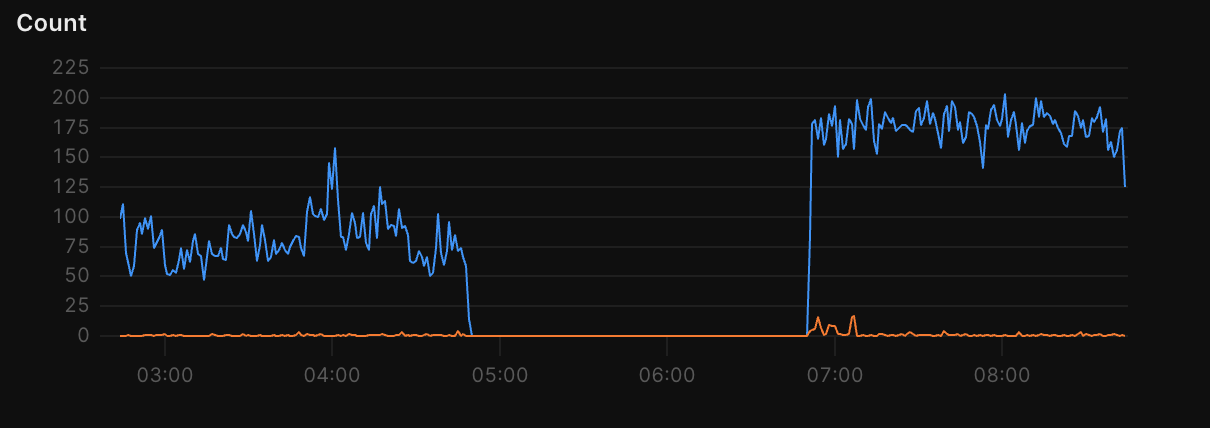

After doing this, the failure mode changed, with the queue consumer getting into the invalid state much more quickly after a redeploy (the valleys on this graph are when it is in the bad state and not processing any messages):

This seems to support my working theory that this is due to some sort of memory leak, and having more consumers exacerbates the problem.

Right now my line of inquiry is following these docs to do some memory profiling of the queue consumer locally to see if I can spot where a leak might be.

Unfortunately don't have a concrete reproduction of the problem yet.

Cloudflare Docs

Profiling Memory

Understanding Worker memory usage can help you optimize performance, avoid Out of Memory (OOM) errors

when hitting Worker memory limits, and fix memory leaks.

Make sure your compat date is set to a recent date for finalization registry support and you are running the latest workers-rs and wasm bindgen. You likely have a memory leak due to a binding that cannot be disposed. This can be easy to spot in the chrome inspector memory profiles. All Wasm objects are usually wrapped in finalization registry handling.

another thing to check here is if there is a panic happening resulting in undisposed memory

we are working on adding unwind support to wasm bindgen to ensure recoverable panics

but if it is that, you can plug into the new reinitialization support we added to wasm bindgen which we use by default for workers-rs now (https://github.com/wasm-bindgen/wasm-bindgen/pull/4644)

this effectively resets the wasm back to as if it just started, but also requires a proxy on the entry points to ensure proper handling which we carefully do in workers-rs

in summary:

- Ensure finalization registry is working and testing for leaks with chrome profiles. Miniflare can make this easier.

- Perhaps add a Rust panic handler with some logging to see if you are getting panics resulting in undisposed state, and if so implement panic reinitialization. There's also some other sneakier approaches to informing runtime termination here I'm happy to share too if it is this.

But it's likely going to be one of the two above.

Thank you! I'll pursue some of these avenues tomorrow and see what turns up

Update on this:

On Tuesday I did a bunch of work to get us setup to use the reinitialization support (had to implement it ourselves, since we're not using

workers-rs). That was then deployed to production on Tuesday.

On Wednesday and Thursday morning when I checked our logs, we were still seeing the same gaps in queue processing that we were seeing when running into the memory access issues, however, this time the issue seemed to self-heal after a couple hours.

I'm also not seeing the same errors in the logs that I was previously seeing whenever the Wasm instance/V8 isolate would become unhealthy.

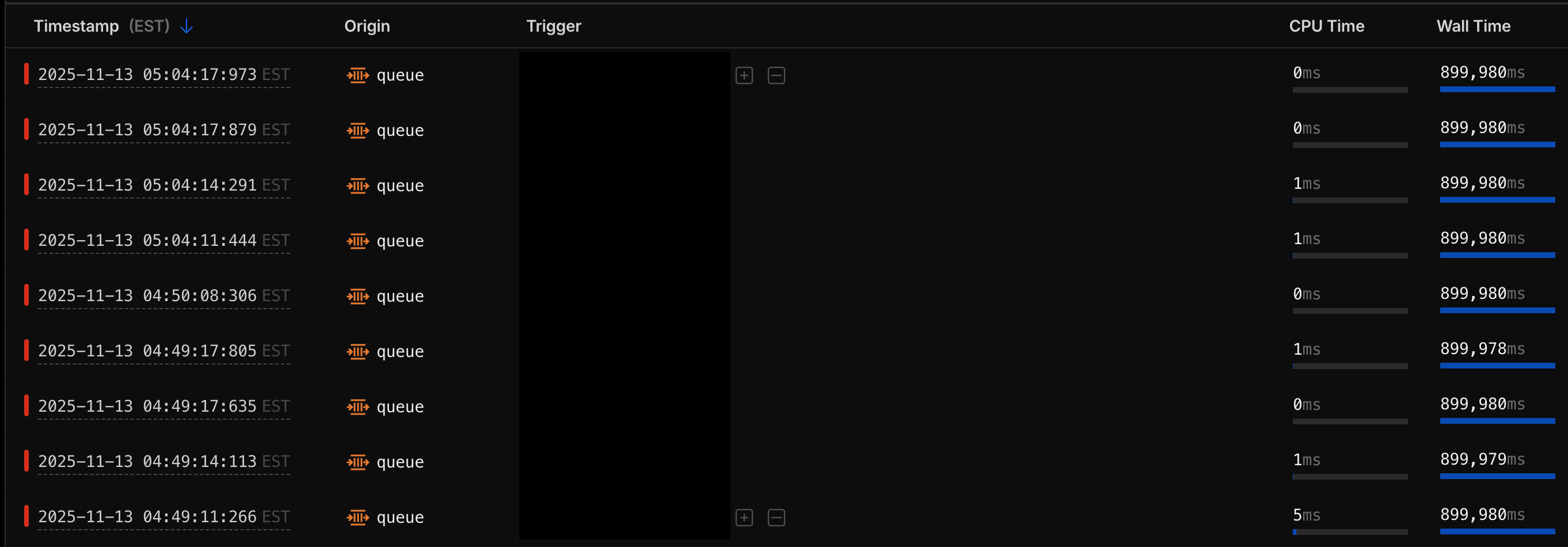

Interestingly, I'm also not seeing the messages in the logs that would indicate that the Wasm instance reinitialization path is being hit (still digging into this to figure out why).You can see here that the queue consumer stops processing any messages at 4:50am ET and then starts processing them again at 6:51am ET

The other behavior I noticed that is different than before is that during the period where no messages from the queue are being successfully processed, the logs I see in the Observability tab show a very high Wall Time, with no other logs in the entry:

All that to say, I think the situation seems better than it was before (especially since it appears that it can self-heal now), but doesn't seem like the issue has been entirely resolve yet

Right before the queue consumer stopped processing messages, I do see these two errors:

Thinking about this a bit more, I suppose it's possible that we're not actually going down the same panic/memory leak path, as a number of things have changed since the last time I saw it.

Because I did change the compatibility date from

2025-01-27 to 2025-10-11, which could have impacted the behavior (and I didn't have the chance to observe the failure case with the new compatibility date before I also added in the reinitialization support).

But this could mean that the change in behavior was the result of bumping the compatibility date (since we weren't using the finalization registry support before).The proxy reinitialization hander has to be able to handle all incoming paths, and also all types of errors. A global error handler (for closure errors on the task queue) and a JS error handler are also needed in addition to the panic handler. So getting full coverage is important for stability on all these cases.

The "Workers runtime cancelled this request" for a timeout error is expected with the reinitialization actually - any other in-flight requests are stalled as they are not tracked by the reinitialization process. This is actually why we are migrating away from full reinitialization and rather just termination at this point. When I earlier eluded to "tricking reinitialization" this is what I was referring to. I believe you can basically just allocate a buffer over the memory limit to force termination. We are working on first-class APIs for Wasm for this, which when combined with exception handling unwinds is pretty much the full story we are aiming for with workers-rs here.

The gap there very much still looks like degradation, which could be due to any invalid state or uncaught exception. Hard to say exactly what. If you're able to share a replication of some form I'm happy to take a look too, or help how I can.