Worker force exit when HyperDrive high concurrency connection

Hello, I'm try to use cloudflare features I didn't experimented, and reached to HyperDrive.

Currently, my service is growing very fast, so getting out of free tier, keep draining my budget double at month - so I decided to try HyperDrive instead of D1.

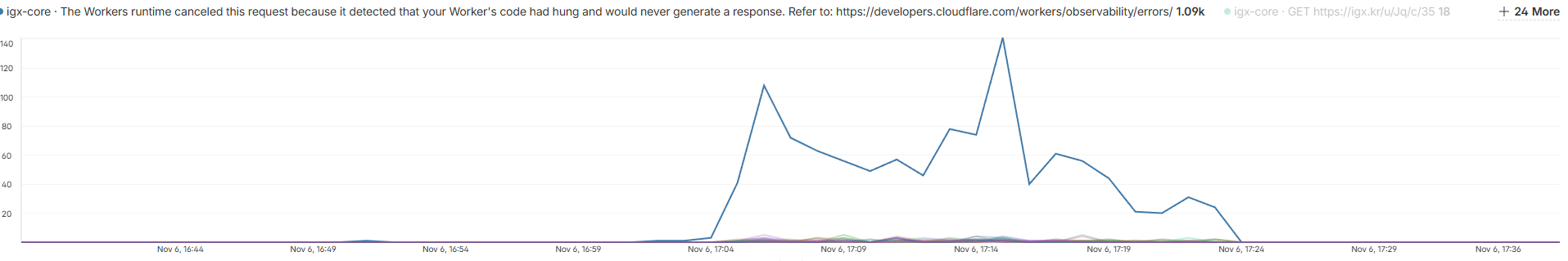

It works great at first time, but with 1 hours of monitering I see very big problem - HyperDrive connection occurs worker timeout with code force exit.

I don't know why it is, but guessing it's related to high amount of concurrent database connection.

My service is getting average 10 request per second, and this means I need 30+ concurrent connection at traffic burst timing.

How can I deal it with HyperDrive, can anyone give some advice to me?

Using PosgreSQL + TimescaledDB + HyperDrive for real-time analysis.

Getting this error for now.

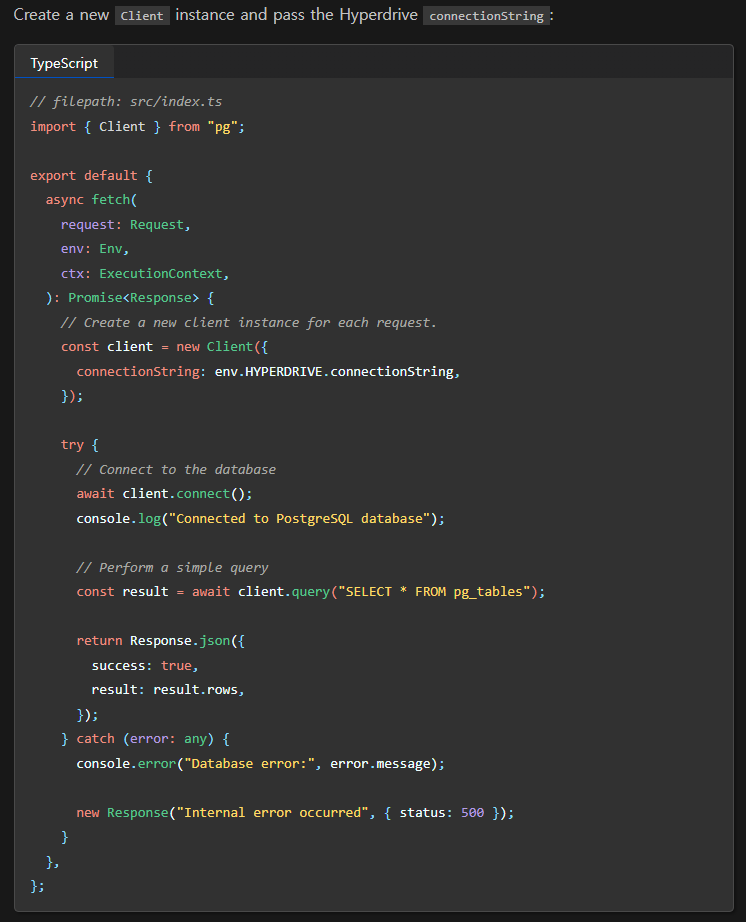

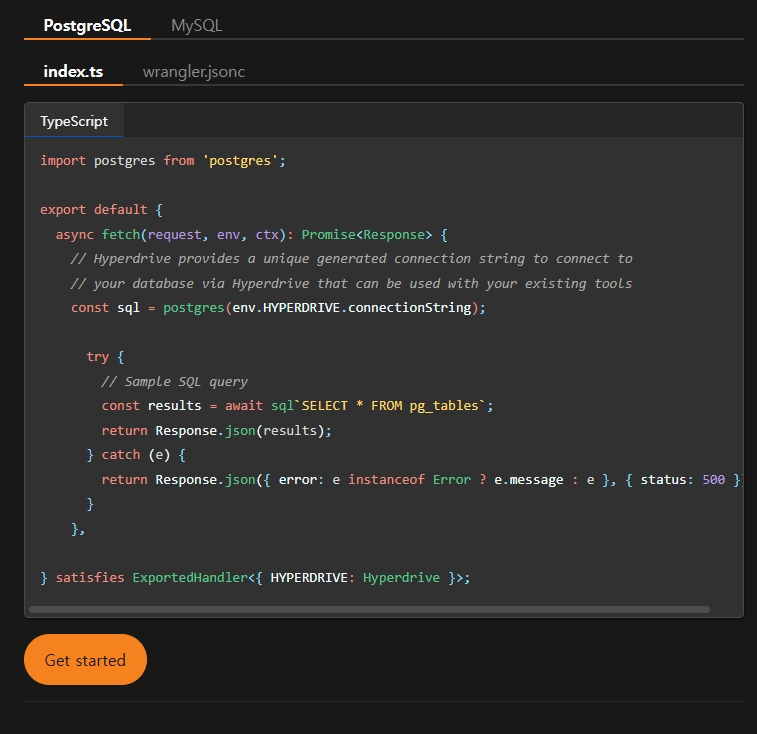

Using this test code:

8 Replies

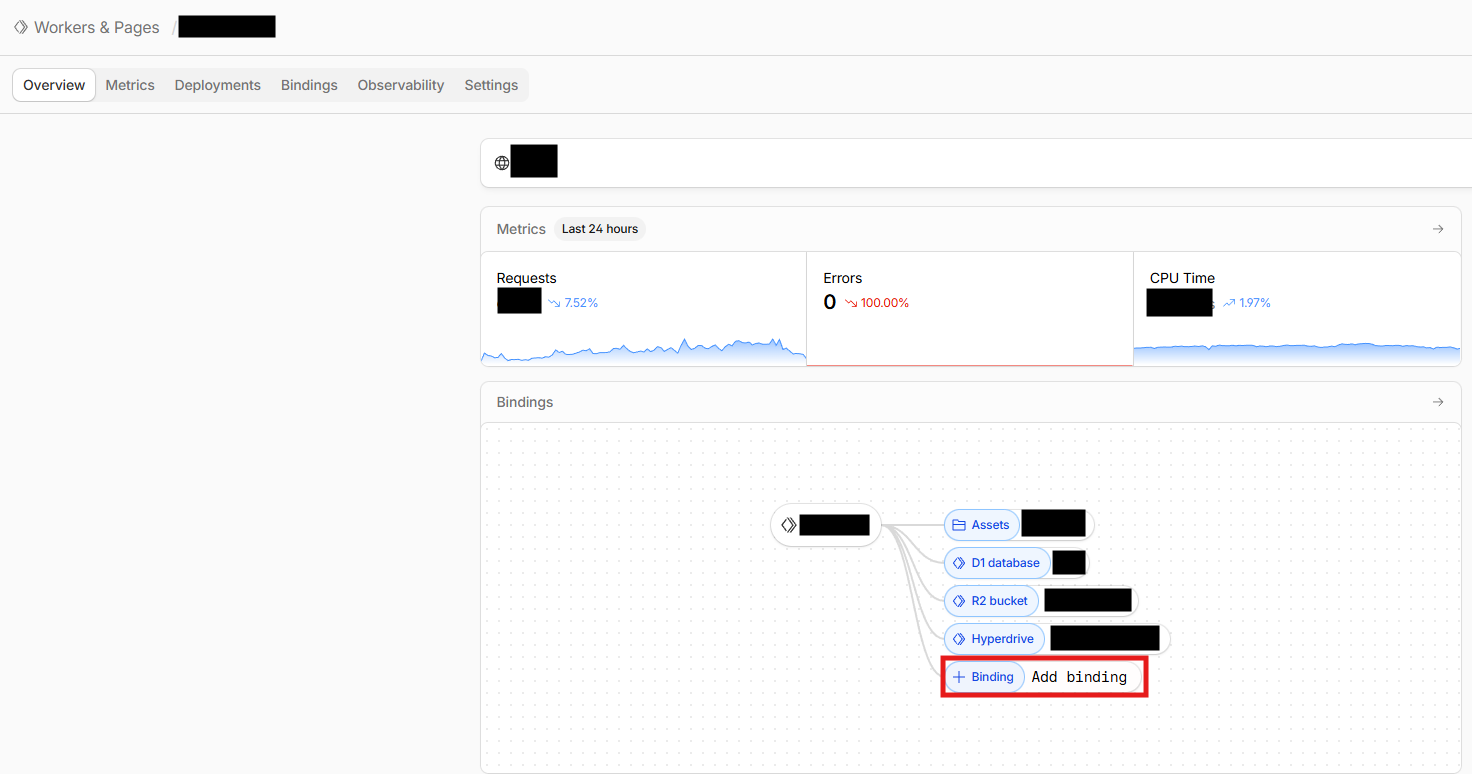

I'm very sure this problem came from HyperDrive, because after test deploy error increased.

Okay, I think I finally got this error : max connection was the problem.

After I manually destroy query pool, all errors gone and inner peace came to me.

The problem was, node-postgres didn't close the fully connection pool after worker end signal called.

I found this issue from here, but looks like it's not fixed and undocumented - Cloudflare documents need to mention close pool connection after task completed.

HyperDrive is great thing, but it needs more detailed document. You can see it from example code, document don't closing connection after task completed - it'll be cause big problem when more than 5 req/s.

Okay fine, It was omitted document from update.

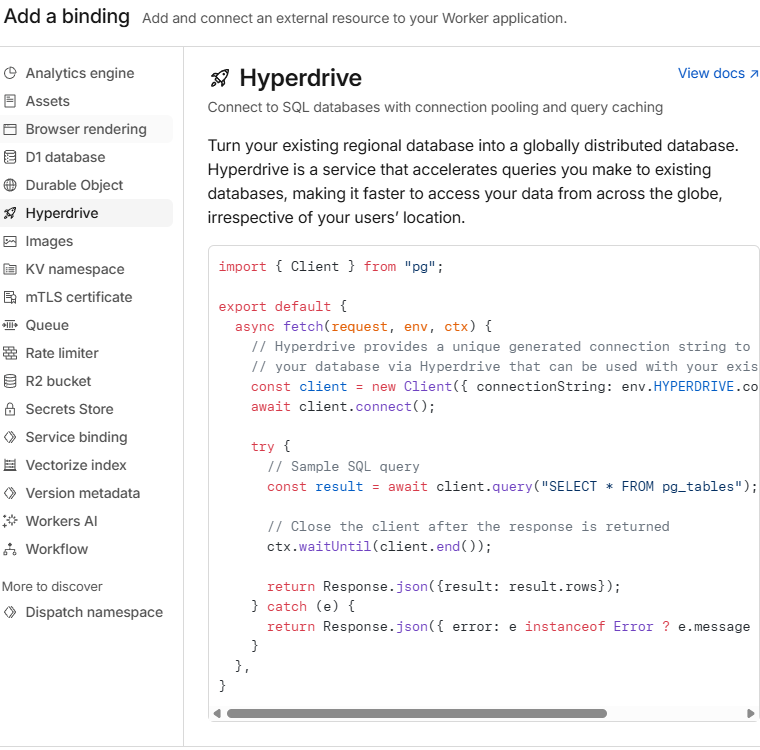

I found HyperDrive document from dashboard is closed correctly:

I found HyperDrive document from dashboard is closed correctly:

But the document at cloudflare docs are outdated.

Maybe I'll to pull request later, but not for now due to short time.

where is this from? i don't see it in my dashboard

It's from "Workers & Pages" modal. You can see it from "Add bindings" button.