Yobin

RRunPod

•Created by Yobin on 4/23/2025 in #⚡|serverless

type must be one of the following values: QUEUE, LOAD_BALANCER when i clone an endpoint

15 replies

RRunPod

•Created by Yobin on 4/23/2025 in #⚡|serverless

type must be one of the following values: QUEUE, LOAD_BALANCER when i clone an endpoint

Thank you so much hope it get fixed. We really need this feature badly

15 replies

RRunPod

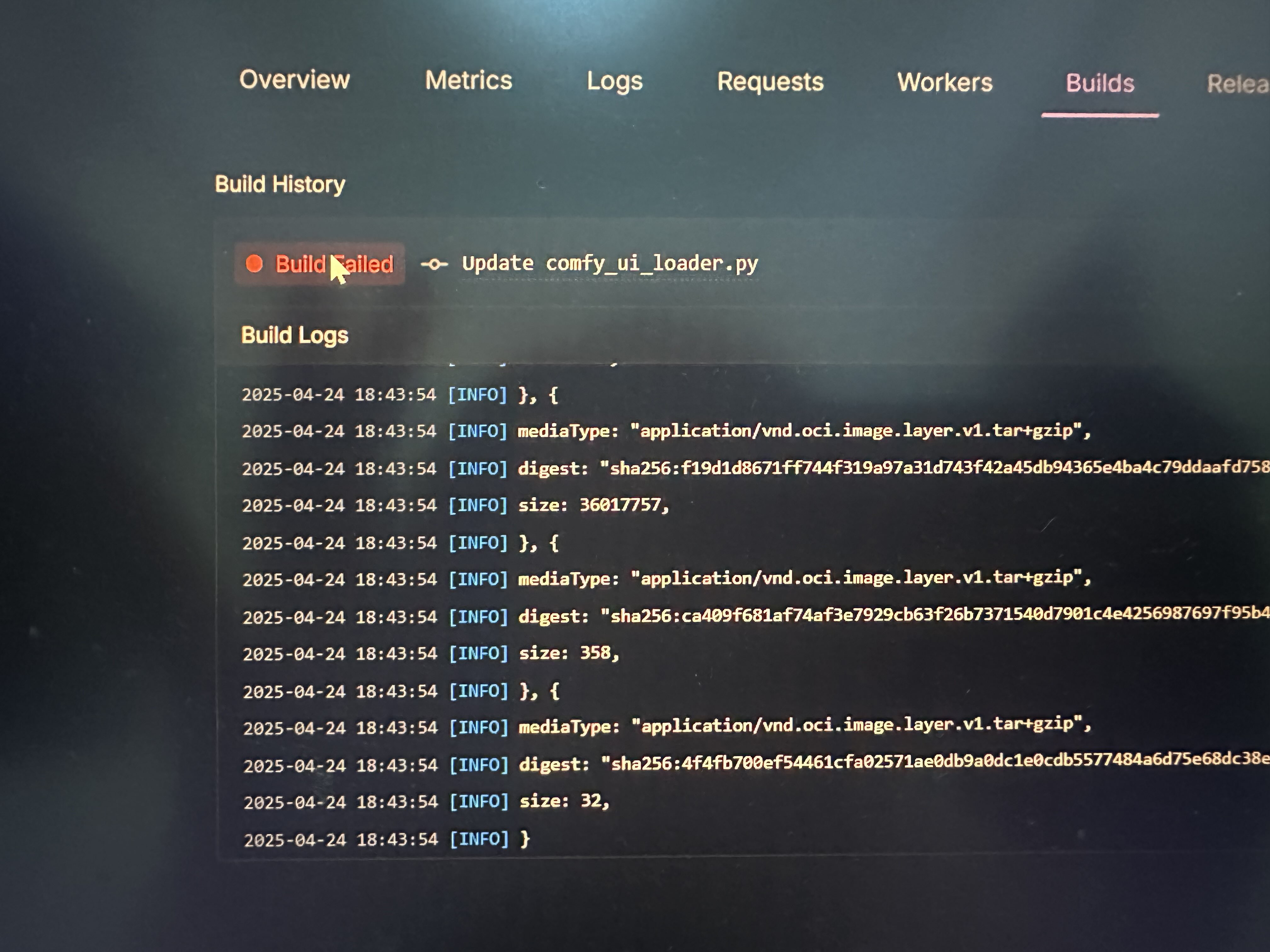

•Created by Yobin on 4/24/2025 in #⚡|serverless

stuck at waiting for build

failed again, I didnt even change anything

19 replies

RRunPod

•Created by Yobin on 4/24/2025 in #⚡|serverless

stuck at waiting for build

Rebuildingg

19 replies

RRunPod

•Created by Yobin on 4/23/2025 in #⚡|serverless

type must be one of the following values: QUEUE, LOAD_BALANCER when i clone an endpoint

15 replies

RRunPod

•Created by Yobin on 4/23/2025 in #⚡|serverless

type must be one of the following values: QUEUE, LOAD_BALANCER when i clone an endpoint

15 replies

RRunPod

•Created by Yobin on 3/17/2025 in #⚡|serverless

Can you now run gemma 3 in the vllm container?

Ollama

25 replies

RRunPod

•Created by Yobin on 3/17/2025 in #⚡|serverless

Can you now run gemma 3 in the vllm container?

I used ollana

25 replies

RRunPod

•Created by ammar on 3/17/2025 in #⚡|serverless

Ollama serverless?

is it as good as the runpod vllm template? in terms of performance and concurrecy stuff

7 replies

RRunPod

•Created by Yobin on 3/17/2025 in #⚡|serverless

Can you now run gemma 3 in the vllm container?

I used the preset vllm, llama 3.2b worked but the new gemma 3 didnt

25 replies

RRunPod

•Created by Yobin on 3/17/2025 in #⚡|serverless

Can you now run gemma 3 in the vllm container?

I deleted it but it seems bc gemma3 is a new model so transformer is relatively outdated afaik?

25 replies