Optimizing Firecrawl API for Extracting Embedding-Ready Data from GitHub Markdown Repos

I'm looking for advice on effectively using the Firecrawl API to process Markdown files from a GitHub documentation repo, with the goal of generating JSON outputs that can be used for creating embeddings. I've noticed that the default metadata extraction is somewhat limited, and I suspect that I may not be setting the parameters optimally. To improve metadata quality, I'm considering using a custom scrape prompt but am unsure of the best approach.

Specifically, I’m curious about:

Crawling Strategy: Should I have Firecrawl crawl the entire repository at once, or would it be more effective to process individual files separately for extracting meaningful content?...

long-page screenshot

Hi - do you have a technique for taking a screenshot (or multiple screenshots) of a long page - eg: scrolling down to get all of the content.

similar to the go-full-page chrome extension: https://chromewebstore.google.com/detail/gofullpage-full-page-scre/fdpohaocaechififmbbbbbknoalclacl...

html element crawl

Hi there, is it possible to hit the particular element in the webpage using crawl method i.e i want to get the content of all the pages inisde <div id="readable"></div> -- Right now crawl param does starts from very start that doesnot mean anything important...

LLM scrape all links in a website

I'm trying to get the below data based on a link:

1. Business details

- Name

- Email

- Contact info...

Cannot fully crawl https://ordwaylabs.stoplight.io/

Using the map endpoint on the playground returns only 9 results (despite first showing a "This job contains over 500 documents" warning). I also tried this website from FireCrawl's Python API and it only returns 4 results. I'm expecting many, many more.

Issue building docker image for self-hosting

Hi, I'm following the documentation for self-hosting setup, but running into the following issue:

``` => CANCELED [api build 1/4] RUN --mount=type=cache,id=pnpm,target=/pnpm/store pnpm install --frozen-lockfile 30.5s

=> ERROR [api prod-deps 1/1] RUN --mount=type=cache,id=pnpm,target=/pnpm/store pnpm install --prod --frozen-lockfile 30.5s

------...

scrape confluence and portal

Hi all

I want to scrape my company’s internal confluence pages to use in a RAG app.

I also want to scrape our research portal that sits behind a paywall.

How can I do this please?...

Not able to Crawl the website using Include Paths(Filter).

Hi,

I am trying to crawl the webpages from this domain https://www.peigenesis.com/ using path filters.

But i am not able to get the associated web pages with that path. I have been trying it from a long time. Can someone pls help me.

...

Rate Limit hit

Hi guys, I'm trying to scrape certain components and add it into my LLM. However, i keep encountering rate limits even after adding a delay of 1minute. Any ideas?

```

// Attempt to scrape the shadcn-vue components from the website using firecrawl

import FirecrawlApp from '@mendable/firecrawl-js';...

crawlUrlAndWatch working on local but fails on Production

I am using crawlUrlAndWatch to crawl through domains, it works perfectly fine in my local system but it fails in production. The error I get is:

SyntaxError: The URL's protocol must be one of "ws:", "wss:", or "ws+unix:"

The frustrating part is that the crawl gets registered in my activity and platform charges the credits for that action. It's breaking my production and charging me for it. Any solutions please?

...

Cannot scrape this website

Hi,

I'm trying to scrape this website:

https://www.eurosport.com/football/serie-b/standings.shtml

...

Scraping SPA didnt work

https://portal.iclasspro.com/emlerhouston/classes

didnt get the page content - even with 5sec wait. any tips?...

Map didn't work for this link

Hey! This map feature works for several dentist websites I tested. However, it does not work for this website, which clearly has many pages.

mississippismilesdentistry.com...

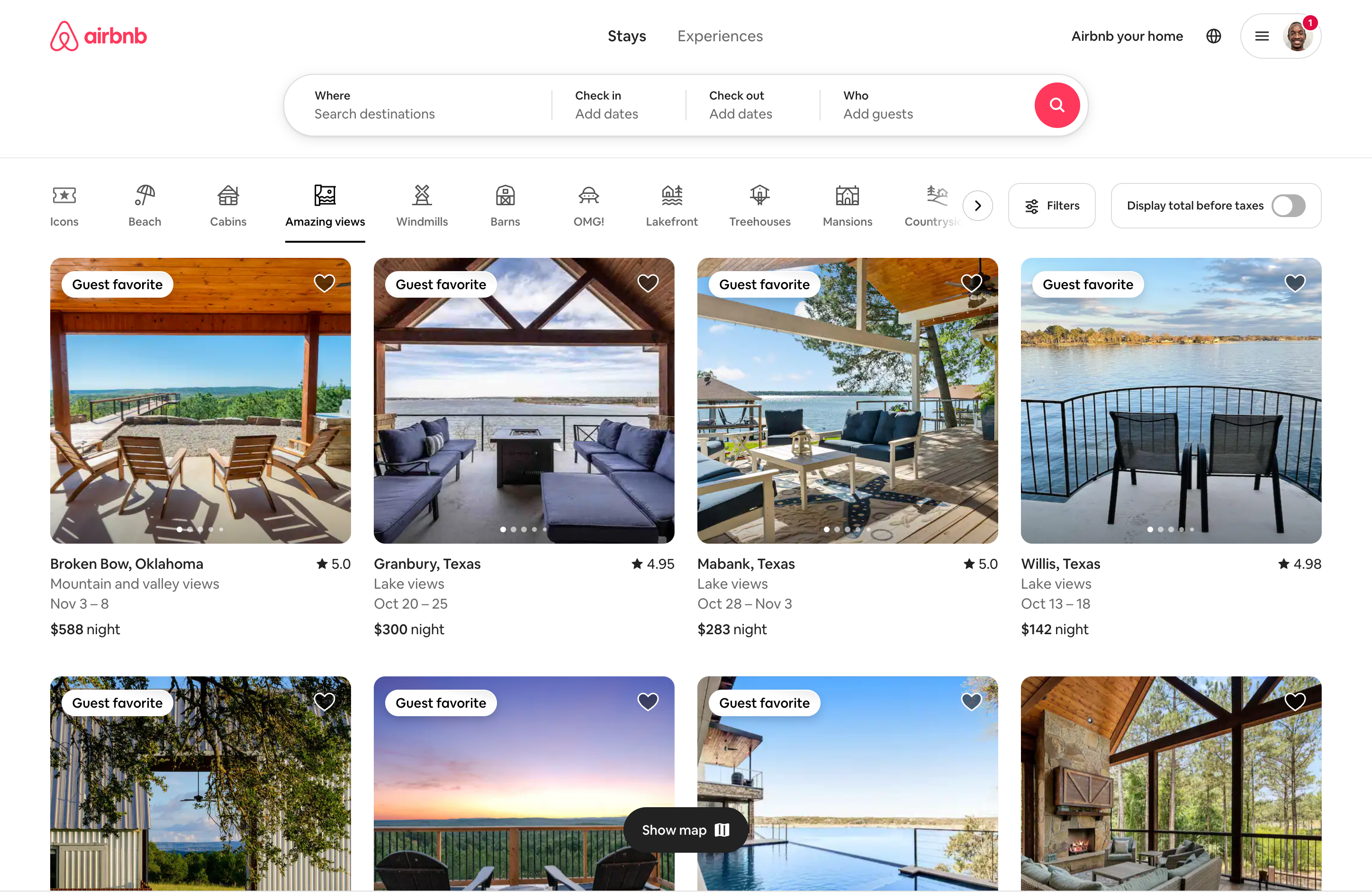

Proper workflow for single page apps that don't retain URL state

Hey team 👋🏿 I'm curious on the suggested workflow for single page apps that don't retain URL state. Take this Airbnb UI for example: how best should I traverse through each category like

Beach, Cabins, etc. and then scrape what's in the DOM at that point?

My only thought is to take advantage of actions and make a request after each button click on the filter but this means that i'd make x amount of requests for however many filter tags there are....

POST requests for /scrape endpoint

Hey guys , just a quick question. I was wondering if firecrawl also supports POST requests on the/scrape endpoint? I can see GET requests are supported but i'm curious if POST are as well

Self-host crawling return empty string

Try my website: https://intelidexer.com

With playground, it gets it perfectly.

With self-host, it returns with empty hands 😦...

I am trying to login to Amazon.com for my users and then crawl the orders.

Is htere a way to find the login button, click it, find username field, add UN from API, find the password field, add PW from API and then hit login using FireCrawl before navigating to the orders page?

Javascript doesn't load

Hello,

I'm not sure why but when I'm trying to scrape the following URL: https://www.4tharq.com/cart/48712001913165:1,48687198339405:1?checkout[email]=julie@smith.com&checkout[shipping_address][first_name]=Julie&checkout[shipping_address][last_name]=Smith&checkout[shipping_address][address1]=16%20Clare%20Street&checkout[shipping_address][city]=Dublin%202&checkout[shipping_address][zip]=D02TY72&checkout[shipping_address][country]=Ireland&checkout[shipping_address][province]=Dublin

It doesn't fill the form and then loads some javascript that should be loaded, could anyone help me please?

...

Scraping getting wrong data.

Hello guys im scrapping this website https://bluebungalow.com.au/products/nala-pink-floral-layers-dress and it returns the data for US and not for AU. In postman its working fine but when I call the api in my .net app i always get the data for US. I have the same page options and url in postman and in the app.