Not getting back the favicon icon as expected

https://www.firecrawl.dev/app/playground?url=https%3A%2F%2Fdocs.emplifi.io%2Fplatform%2Flatest%2Fhome%2F&mode=scrape&limit=10&excludes=&includes=&formats=markdown&onlyMainContent=true&excludeTags=&includeTags=&includeSubdomains=true&mapSearch=&uniqueKey=1732131665066

The response includes a rectanglar SVG, but I don't see the favicon icon anywhere (which is what I see next to a tab's title in a browser).

```

"ogImage": "https://docs.emplifi.io/__assets-853f5193-e996-4d6b-8e42-47a63d0c2dd9/image/logo-svg.svg",...

Exclude subdomain from being scraped

Is it possible to exclude a subdomain when scraping a website?

I have a client site I would like to scrape, but they recently added a subdomain which list specific products/services not directly related to their main service.

e.g...

Running locally: "The engine used does not support the following features: waitFor"

I run the latest version from Github on localhost. I can't use the v1 scrape with "waitFor". I get the warning:

The engine used does not support the following features: waitFor -- your scrape may be partial.The log says "scraping via fetch". Which engines are at play here and how to use them? I can't find a parameter for that....

Webhook V0 doesn't work

Hello,

I was using Webhook from V0 for weeks, it was working well and I noticed yesterday that it isn't working anymore: I'm not receiving any requests to the endpoint specified in the website settings...

Markdown includes ASCII, Unicode, and unreadable HTML

See Crawl data attached for reference. Thank you!

only cookies in scrape results

I'm encountering challenges with cookies while scraping and crawling various websites. For many websites, instead of retrieving the actual content, I am only able to extract cookie-related information or consent texts, without any meaningful content. This significantly affects the effectiveness of the scraping process.

Following previous advice (as discussed in this Discord thread https://discord.com/channels/1226707384710332458/1226707384710332465/1251760606504288388), I have excluded only the most apparent tags associated with cookie prompts. Unfortunately, this approach has not been entirely effective, as cookie prompts still obscure much of the main content. Interestingly, when I test the scraping on Playground, I achieve excellent results on the same websites.

If there’s a more recent solution or method for effectively handling these cookie prompts, I’d be eager to learn more about it....

account upgraded but limit still 10/min

I just upgraded my account just so I can run more tests but I still seem to be stuck at 10/min. Does it take some time to update/do I need to recreate the key?

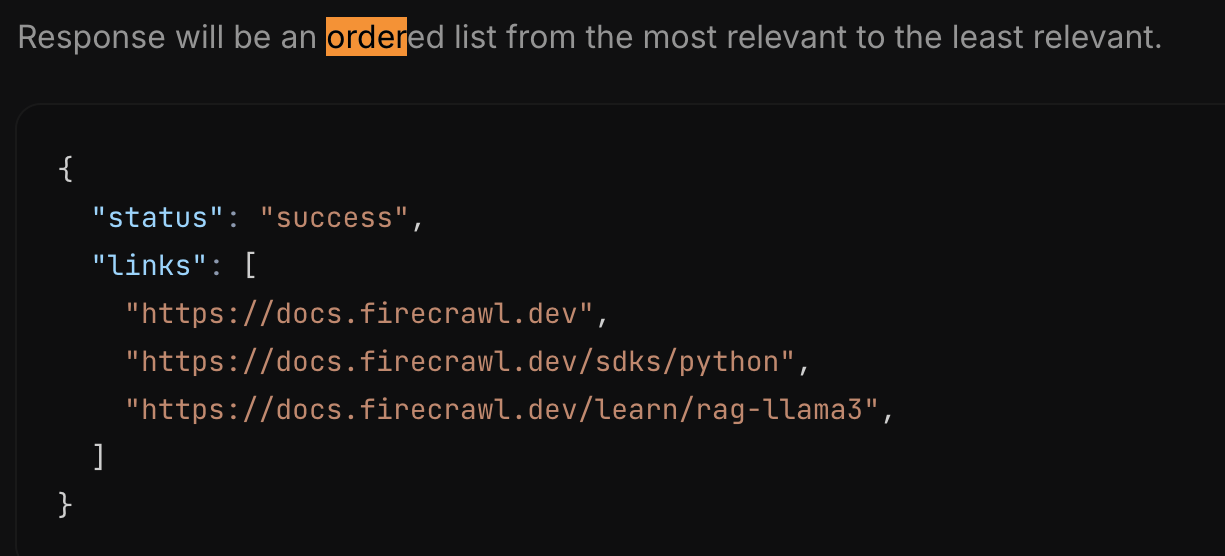

Python API doesn't provide nice way to iterate over crawled data

Checking the crawling status (https://docs.firecrawl.dev/sdks/python#checking-crawl-status) gives a "next" link (e.g. https://api.firecrawl.dev/v1/crawl/789e6a93-81b6-44f5-9f0e-67f6263059e8?skip=0), but there doesn't appear to be a way to use the FirecrawlApp to fetch this data and instead I need to use a separate Python library like "requests" to make the request.

Am I missing something?...

batch scrape repeating results

I shared this over X this morning but batch scrape seems to be failing for me today. I send three urls and I get three results but their all for the same url ( usually the first ). I am using the cloud API.

There is a gist here:

https://gist.github.com/kristoph/ee658b7d7fe0ea16a1d435a069be8295

...

Bug with auto-recharge credits

Hi, it looks to me like auto-recharge credits are getting consumed over "normal" account credits, meaning that the account never even gets within 1000 credits of the limit before getting charged again. If the auto-recharge credits roll over, it should consume the monthly credits first and then the recharge credits... or you should be allowed to wait to recharge until the account is completely out of credits. It is not helpful to have such a huge buffer.

Params Ignored on Batch Scrape API

Hi! Tried the hosted batch scrape API for the first time today and ran into some issues. The OnlyMainContent and timeout params are seemingly ignored when I use the batch scrape method. Is there any other variables that I need to include in the payload to enable these options?

Self-hosting Questions

Before I jump on the hosted product, I like to test locally. Does self-hosted Firecrawl support:

* Javscript / SPAs? In my testing, it seems no. The results are empty.

* Reading files from arbitrary URIs, including

file:///path/to/localFile.pdf? I tried something similar, but clearly wget

Thanks....Getting "Invalid cookie fields" error

I'm using the Python FireCrawl API and am trying to crawl a website behind authentication. It keeps failing when trying to scrape the first page. Am I doing something wrong?

```

app.async_crawl_url(

"https://portal2.vcinity.io/s/",...

How to secure FireCrawl Webhooks?

I am a newbie trying to implement crawling with Webhooks. I've setup a simple FastAPI endpoint to receive webhooks from FireCrawl, but I don't understand - how do I make sure that the webhook endpoint is secure?

Invalid PDF structure

I'm using the crawl endpoint and one of the URLs it discovered is https://www.gamweb.com/assets/files/lsk.pdf, however, I get a "Invalid PDF structure" error when the page is scraped by FireCrawl. I can see why, since it's webpage with an embedded PDF instead of just a raw PDF as the URL implies. However, I do think that FireCrawl should be able to gracefully handle this.

is there a way i can screenshot the mobile view of the site?

is there a way i can screenshot the mobile view of the site?

Cannot access bull dashboard when running worker

I'm self hosting and have built a docker image. when running without the FLY_PROCESS_GROUP env variable (set to worker) I can access the dashboard, however jobs do not process because there is no worker. when I set that FLY_PROCESS_GROUP variable, the jobs process but the dashboard is not accessible