Scraping sites built with Framer.com

/extract enpoint metadata per url

Change Tracking

API Extract not getting Up to Date / Live data - Lots of Lag Time

/scrape Endpoint fails with 500, can i see the error message somewhere in the dashboard?

Able to scrap markdown but not json

PDF with FIRE-1 agent

batch scrape

Removing 1000 limit cap on /map function

Filter Search (between two specific dates)

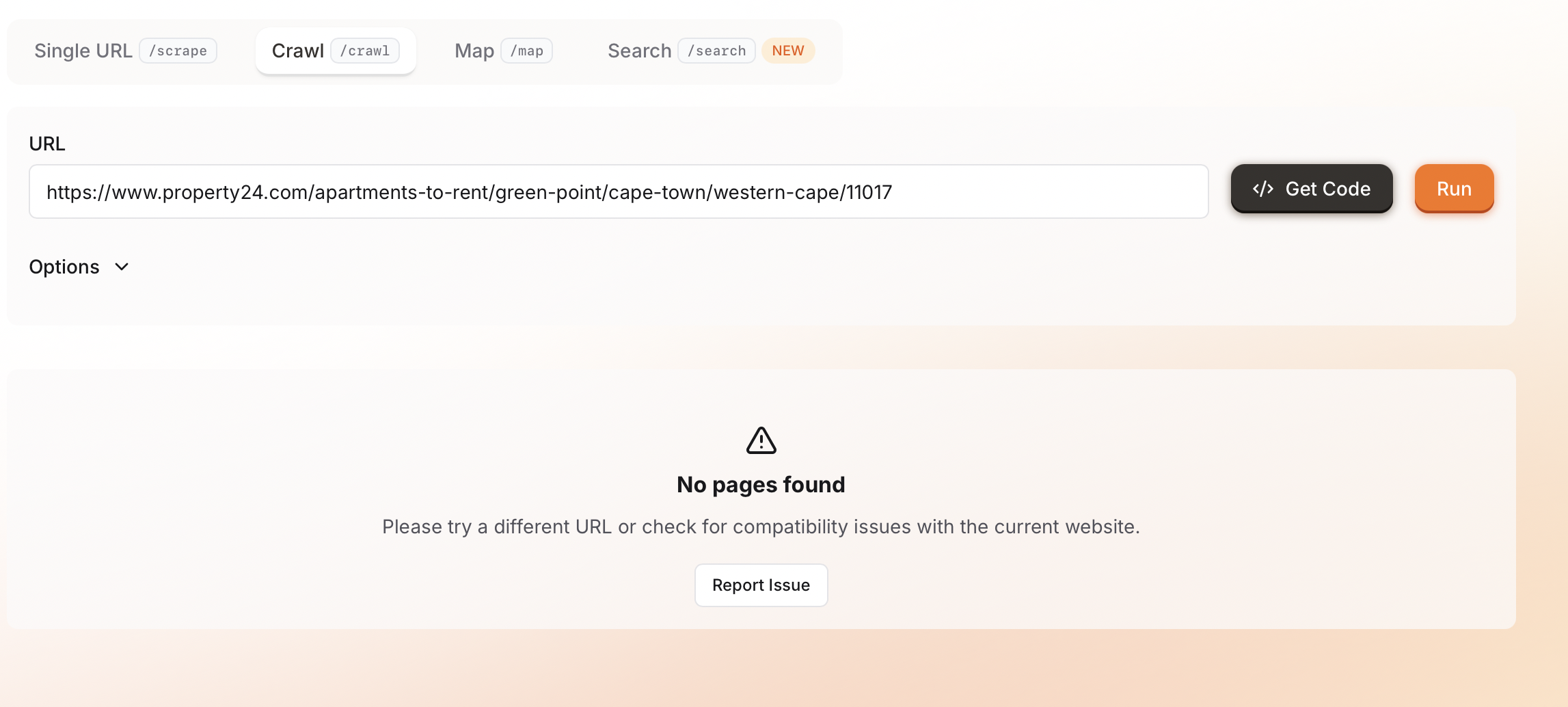

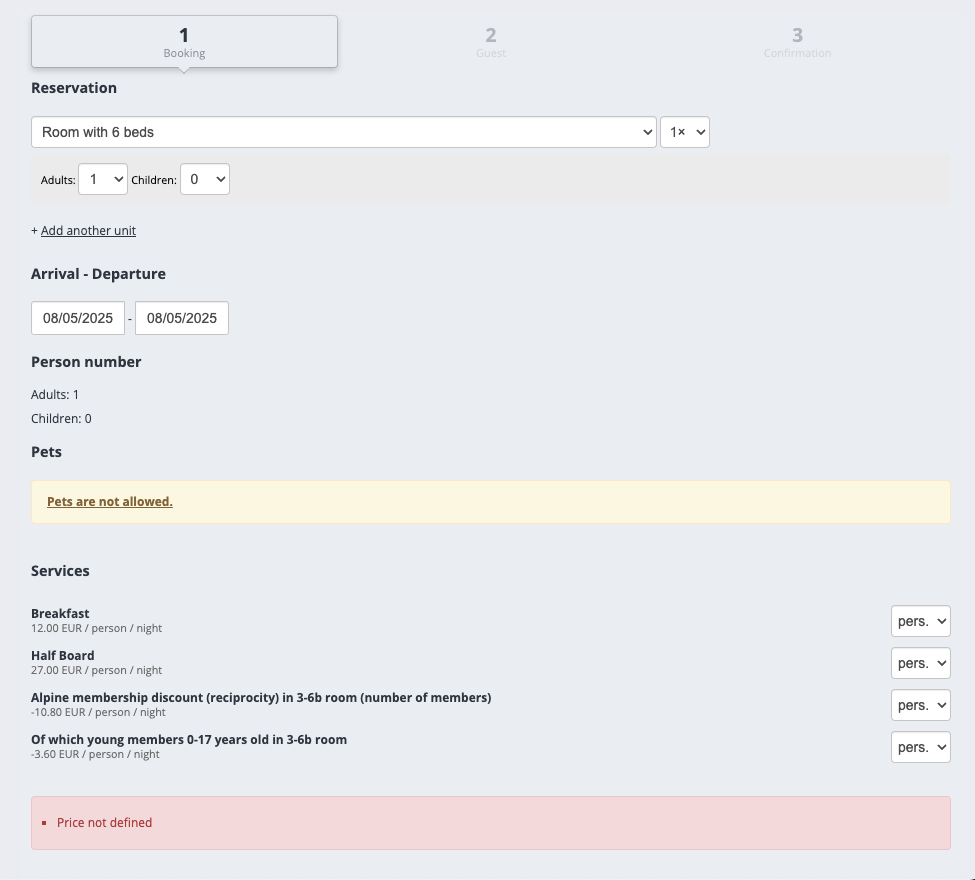

Crawling available bookings

A crawl on an ecommerce website with more than 500 products returns only 39 items. Do you know why ?

Crawls are taking a very long time

Make.com LLM module returning "empty"

I am using the LLM module on Make.com is running successfully, but the Output is returning "empty".

Does anyone know why this might be? I tested multiple websites and multiple output formats....

How to return 1 search result with Firecrawl <> Make.com API

Need help for headers and cookies

Anyone know anything about their hosted mcp server?

Crawling + Scraping Classifieds