Error code when trying to create API Key

Prevent crawling of .xlsx / non-text/html pages

How can i set headers when scraping with Python SDK?

/scrape works perfectly if including screenshot for some links but errors if not

Can’t get MCP to work with Docker locally. Any tips?

FIRE-1 agent is not able to scrape javascript dynamic content. It is erroring out every time.

402 Errors Randomly Started With Plenty Of Credits Left

Crawl Status Response type not available for import

Botpress

400 Response from /extract using wildcard

Crawl a lead website to create a knowledge base

Agent used up all of my credits...

EXTRACT API ALWAYS GET SAME EMPTY RESPONSE:

Request Timeout in Python SDK uses milliseconds but should be seconds?

Firecrawl API stopped working when using proxy – anyone else experiencing this?

How to set version on the Firecrawl node SDK?

It works when i make axios commands but doesn't when i instantiate the application and then try to execute any jobs. Seems to be maybe a version mismatch. The FirecrawlAppConfig does have a version parameter, but not initialized in the constructor, I don't know if that's something that can be set, with the app but it can be specified in other cases. Crawling doesn't work when using in SDK but works whne using SDK via local host ...

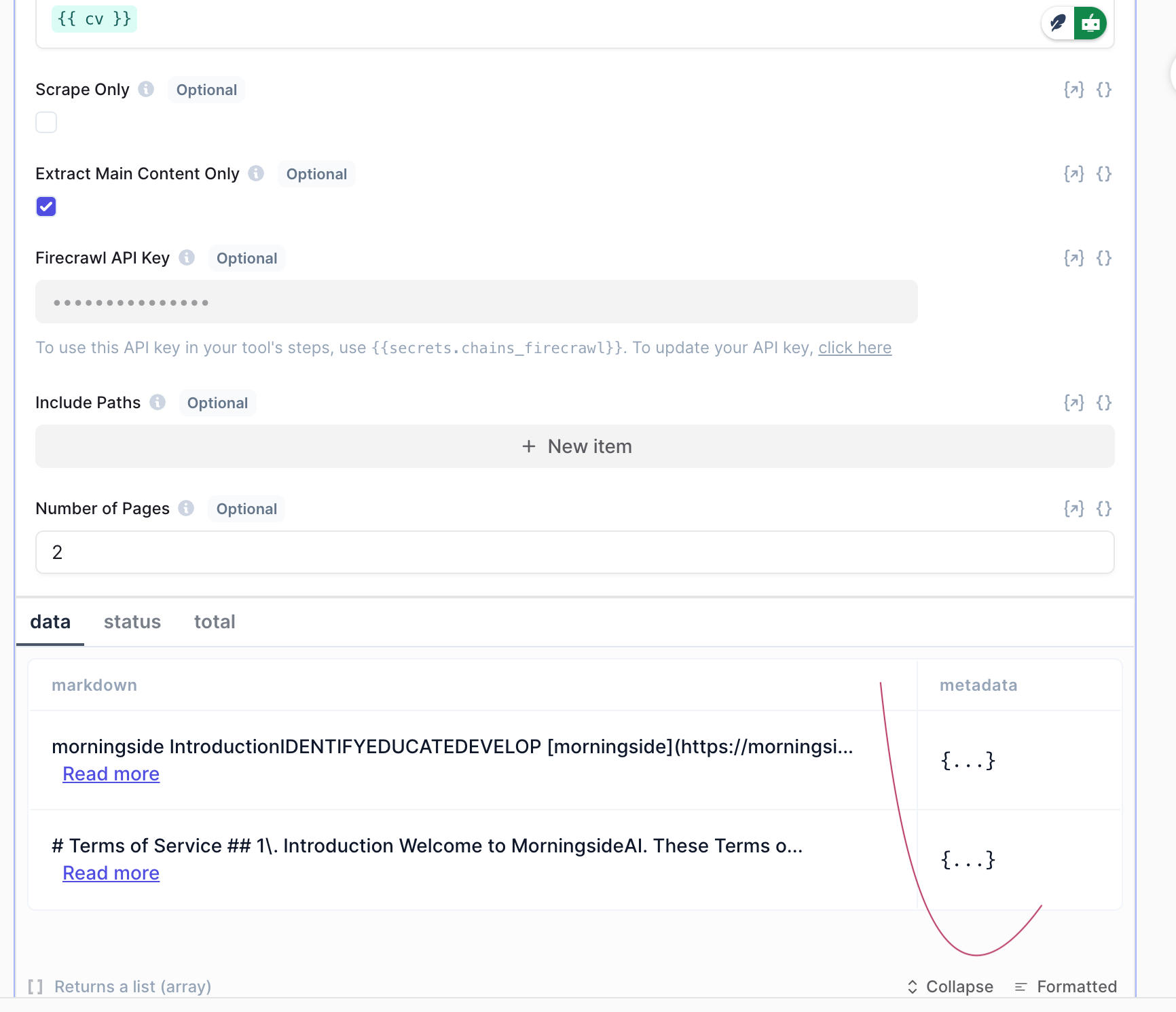

Metadata structure of using firescrawl on relevance.ai

External Webpage Scraping

Using firecrawl crawl inside Make.com