MastraAI

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinMastraAI

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinRAG pipeline example - workflow

Anthropic System Prompt Caching Regression

Agent class in mastra and it seems like in multiple places the providerOptions are not being passed for system role messages.

The work around would of been to pass the system role messages as part of the message list when .streamVNext is called, as below:

```typescript...How to address several running instances of a workflow

waitForEvent step:

```

import { createWorkflow, createStep } from "@mastra/core/workflows";

import { z } from "zod";...Mastra RAG with sources advice needed:

Mastra products

Mastra serverless concurrency

Working Memory Updates Not Always Additive

Why persisting Message Thread implementation ignored the messages for role - System?

Web Socket Connection Errors in Prod Environment

workflow inputs resulting from a zod intersection are not rendered in the playground

Mastra deploy error - cannot find @mastra/cloud

experimental_ouput support for other models

Memory (RAM) issues

forEach step that handles each page of the website and examines the HTML / attributes.

With a very large site, I got this error in the Mastra logs (attached).

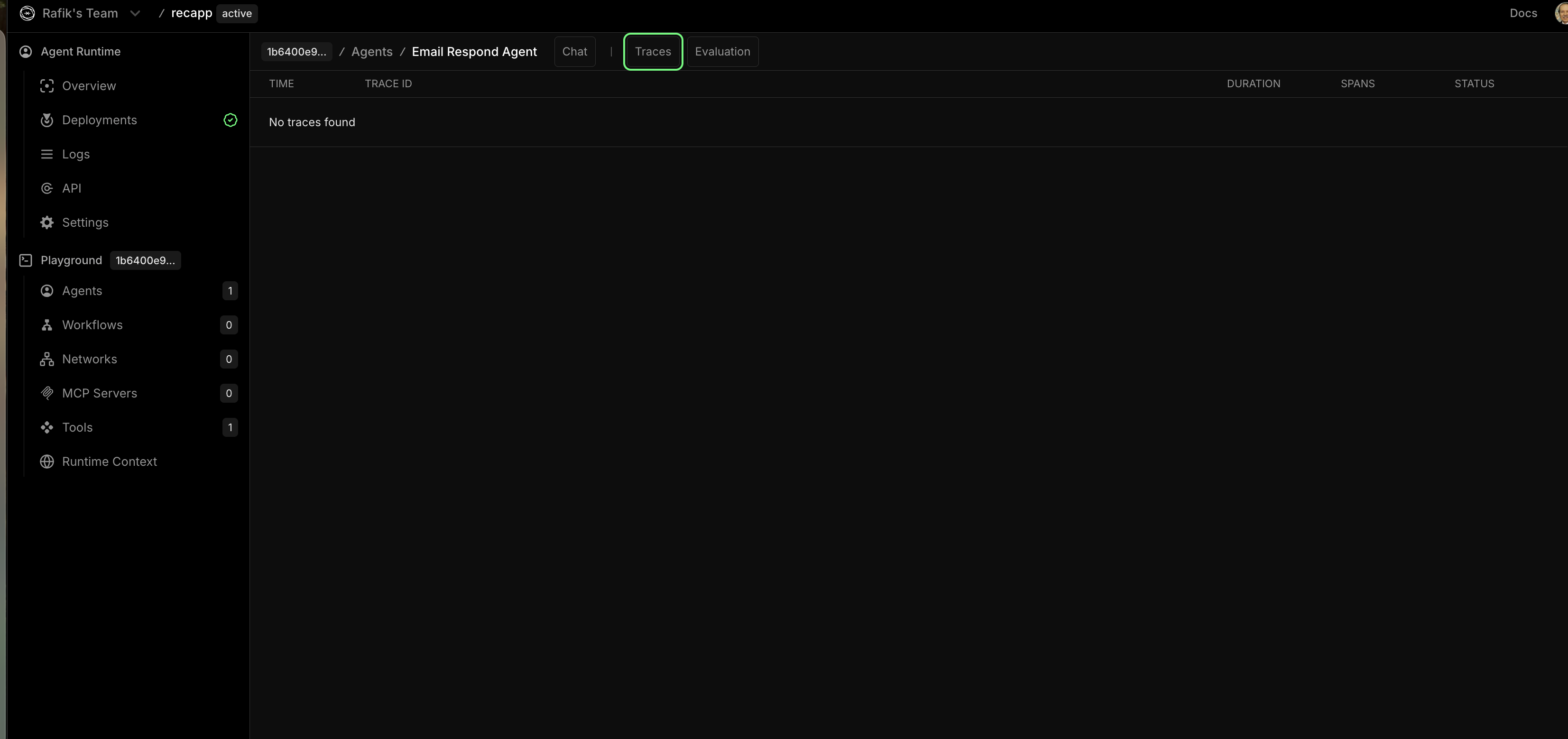

I have a few questions:...How do the Traces work ? (mastra cloud/local)

Mastra vNext Network Stream Format Incompatibility with AI SDK

Logging in the console

Tool calling & Structured output

Support for format: 'aisdk' in streamVNext for mastra/client-js

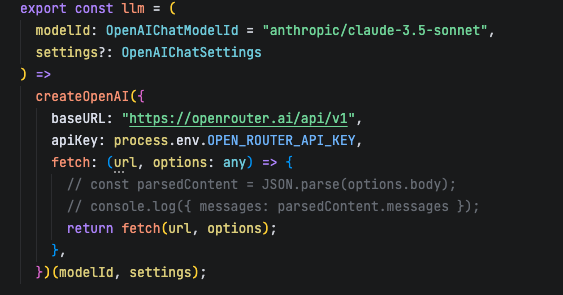

{ format: 'aisdk' } is not supported in client-js.Streaming reasoning w/ 0.14.1 and ai v5 SDK

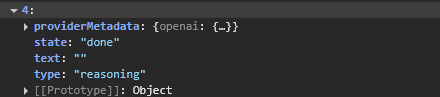

sendReasoning: true to the toUIMessageStreamResponse and do not get any actual reasoning text.

See the screenshot for the in-browser console of the part for reasoning.

I did see this post, but it makes it seem the issue is resolved....

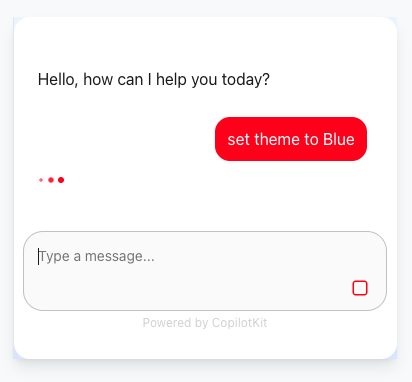

Mastra as Remote Agent for AG UI using Copilotkit seems not working?