Mastra

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinMastra

The TypeScript Agent FrameworkFrom the team that brought you Gatsby: prototype and productionize AI features with a modern JavaScript stack.

JoinNetwork Agent subagent stream text

How to handle multi-step branches

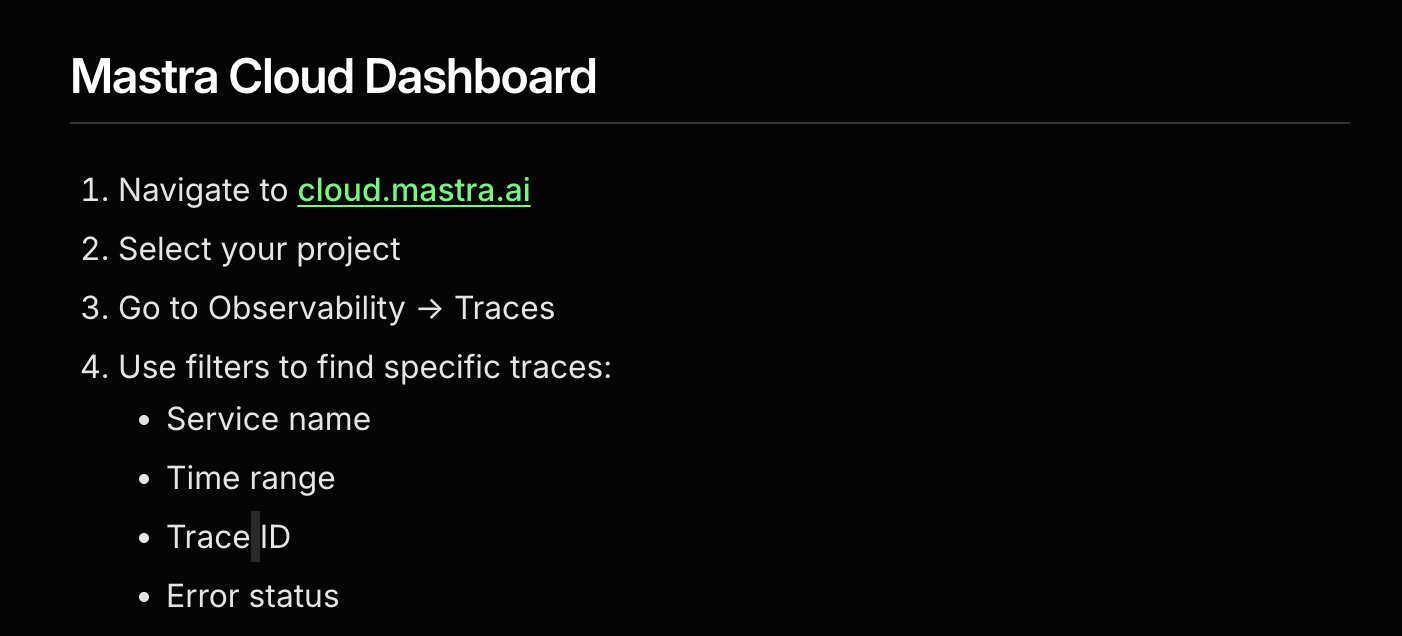

Mastra Cloud: how can I filter traces per run ID?

Prompt caching with dynamic context

Converting messages to AI-SDK format (v1 Client)

client.listThreadMessages to retrieve message history, the messages are now returned as MastraDBMessage.

To use them to populate initial messages in useChat they need to be converted to AISdkV5 format, because it expects the messages to be UI_MESSAGE.

It seems the method needed to do that exists, but is currently not exported (only the chunk-based conversion for streaming is).

...Cannot access Mastra Cloud project UI - React Query error

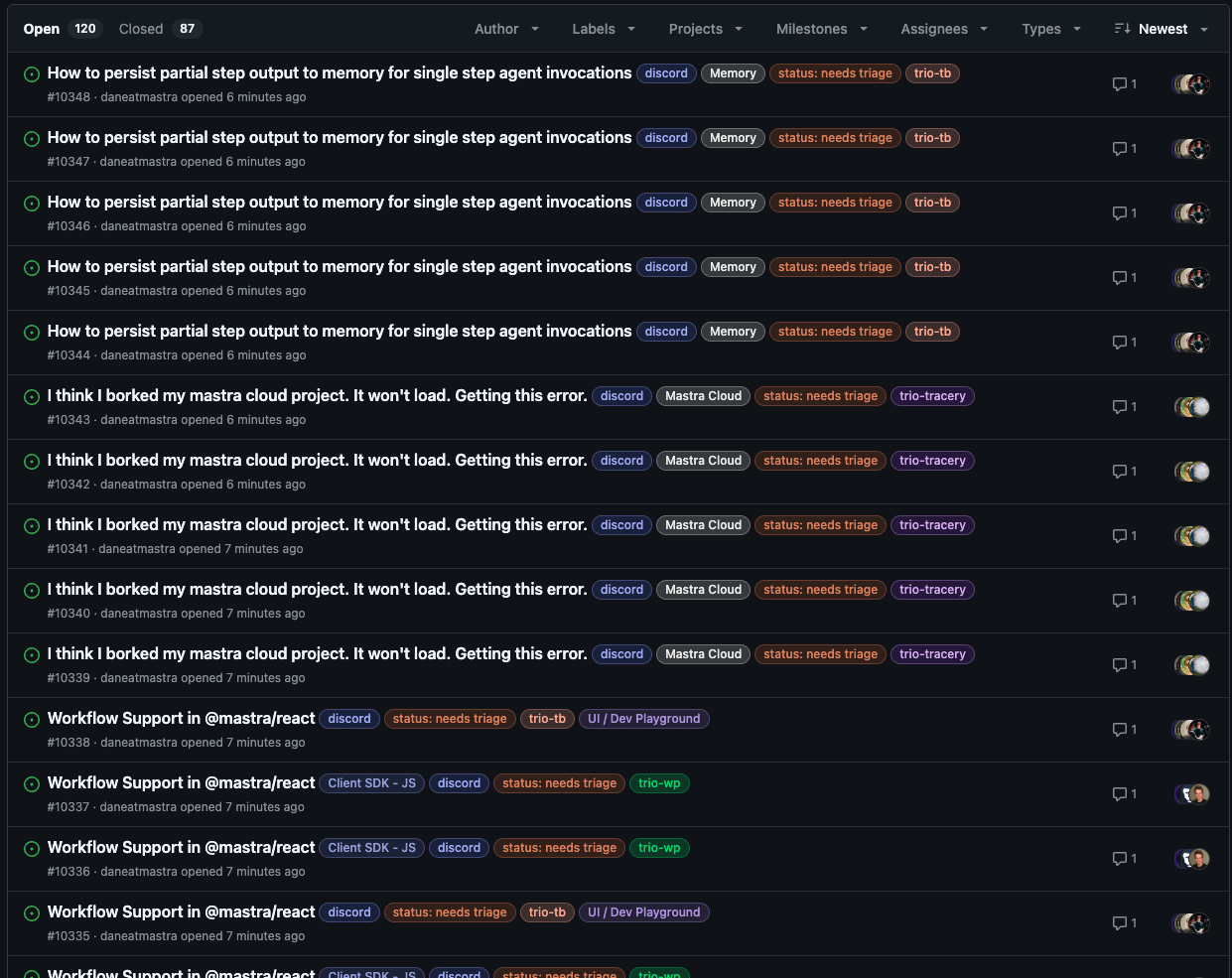

Bug in Dane creates spam

How to persist partial step output to memory for single step agent invocations

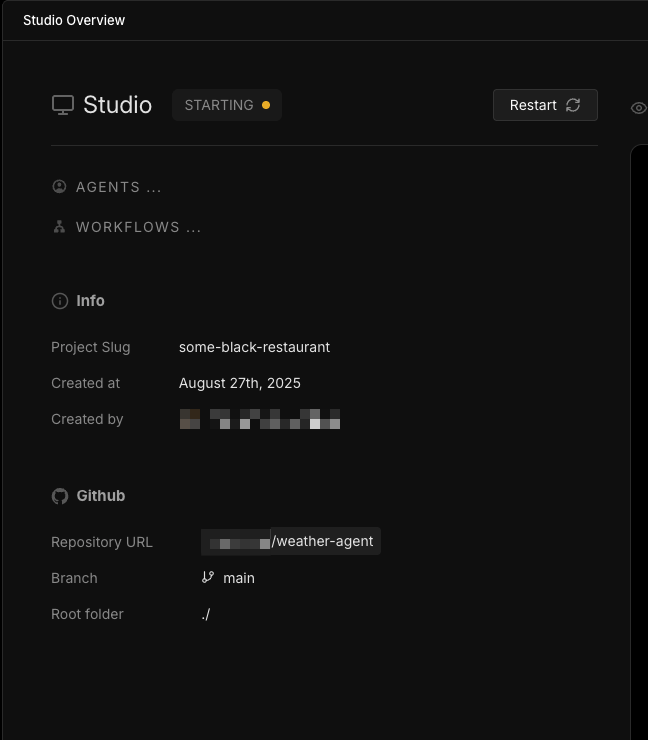

I think I borked my mastra cloud project. It won't load. Getting this error.

Workflow Support in @mastra/react

useChat in @mastra/react (discovered it through this Github Issue).

I'm glad I found that because that's something I was missing and I already started building a similar hook.

I've got some questions regarding the package:...Limits of workflows as serverless functions in NextJS

Tool output not being passed back to agent

0.24.1) where a tool’s output isn’t being passed back to the agent during execution.

What’s Happening:...

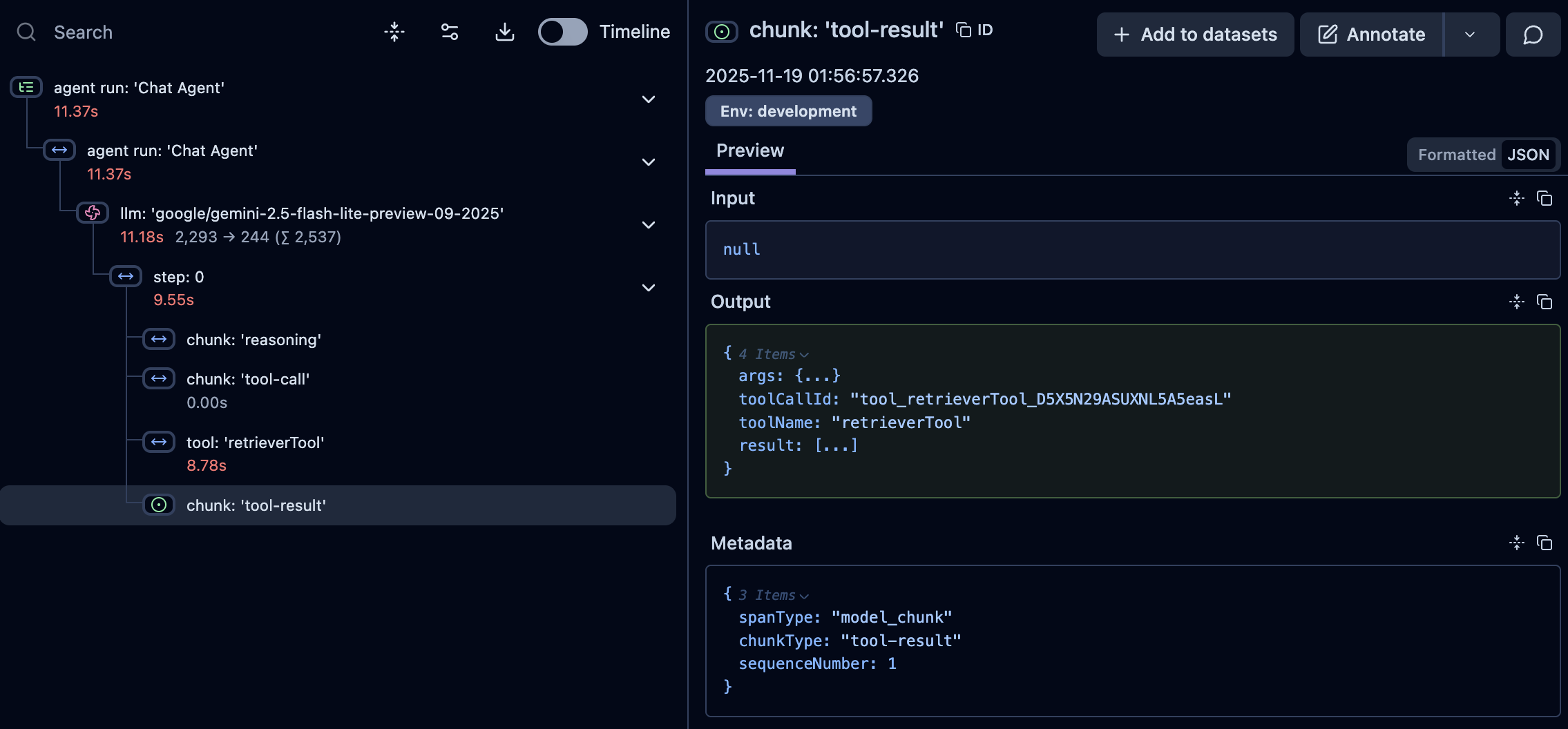

Mastra v1 observability

Tool Calling using Gemini 3

thought_signature. Here is the associated documentation. I am not sure if Mastra is handling this now or if it still goes through the ai-sdk.REST call still works even after the deployment is disabled on a Mastra Cloud project

Errors seem to escape to stdout and are not caught by the pino logger

Error executing step generate-draft-response: AI_APICallError: Rate limit reached...Mastra cloud deployment fails without logs

Re-generate a message

Impossible to pass image to any the agent, using the .generate() function with 0.24.1 version

Mastra Cloud - new account (Google) initializes with "random" repo