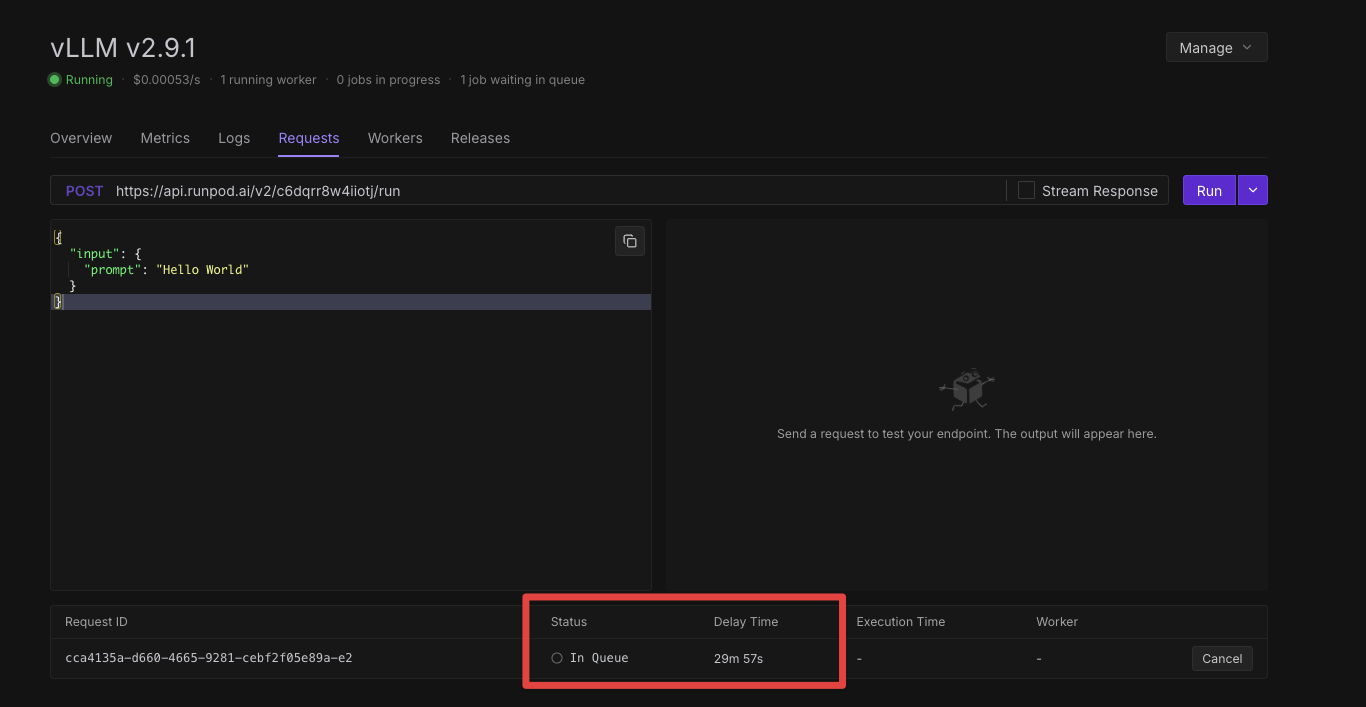

Requests not leaving queue

Request in queue are not processed

Unable use model gemma3:27b-it-q4_K_M

Using secrets in serverless

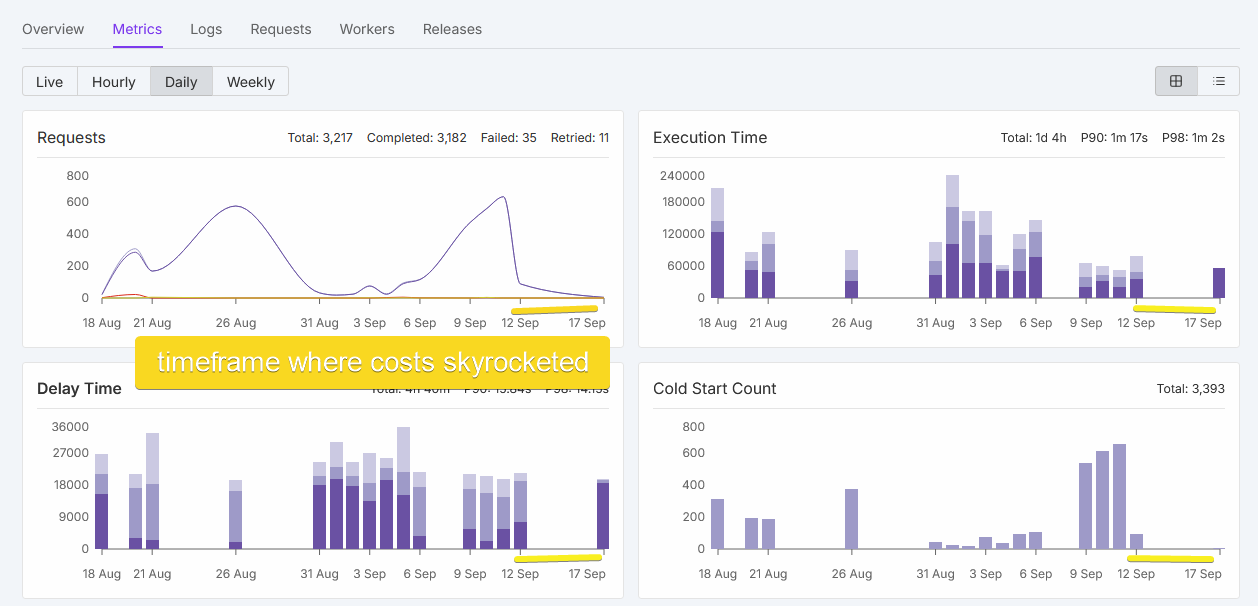

High serverless costs despite no traffic

Questions about S3 Image Uploading

Linking the upload of the image generated by comfy to Cloudflare R2 via S3 does not work

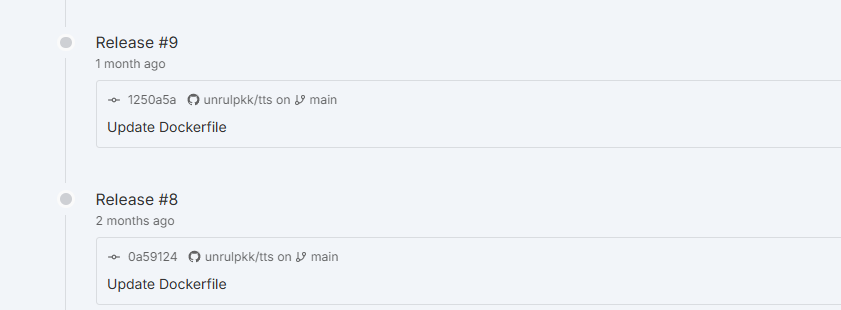

Can serverless applications be rolled back to a previous version?

Docker & ComfyUI

timeout

requirement error: unsatisfied condition: cuda>=12.8, please update your driver to a newer version, or use an earlier cuda container: unknown

requirement error: unsatisfied condition: cuda>=12.8, please update your driver to a newer version, or use an earlier cuda container: unknown

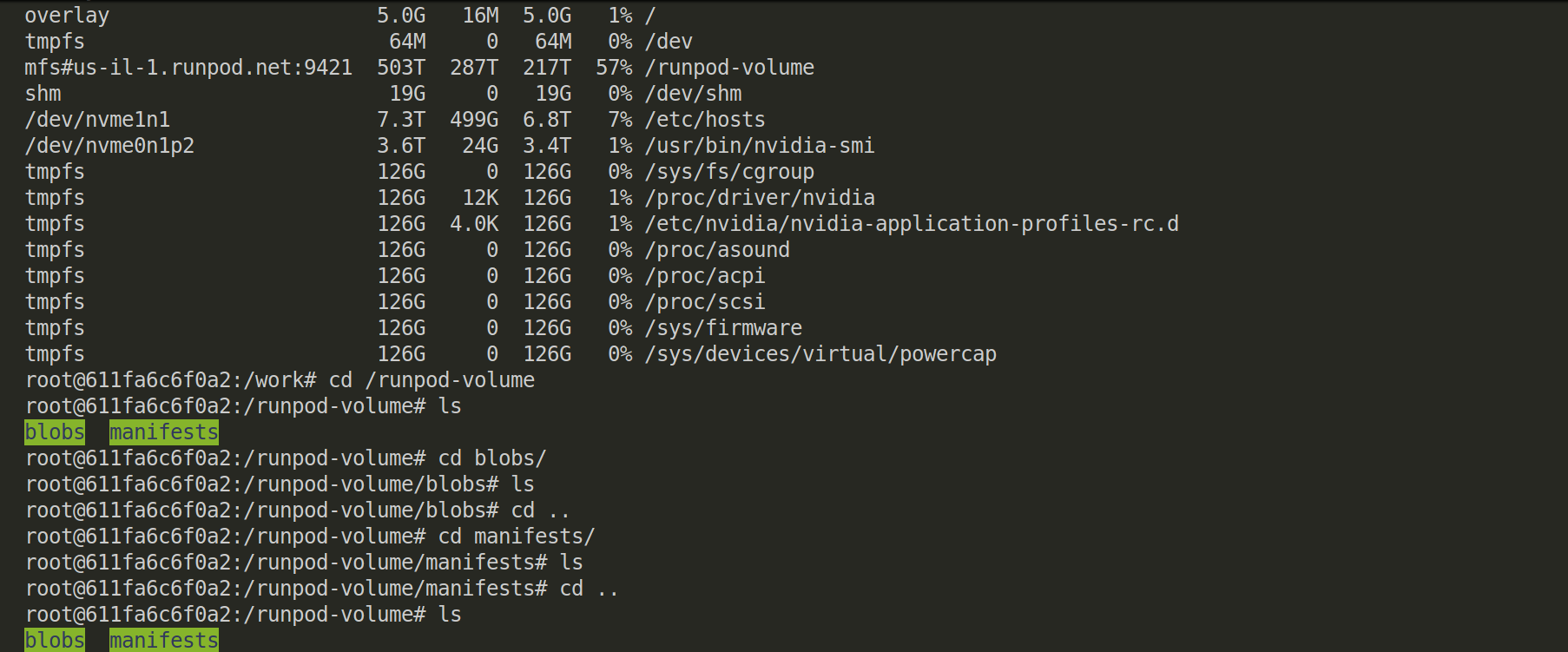

Custom checkpoint on Comfy UI Serverless

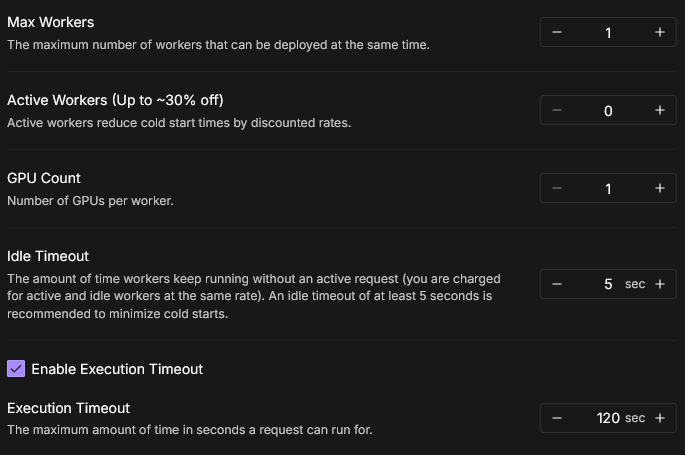

Worker in Idle despite configuration

How to do auto scaling based on request spikes?

Why is building a serverless endpoint so slow?

Issues Accessing FastAPI /docs and /health via Proxy

Is anyone else facing serious outages today?

Delay and Workers Not Starting on RunPod Serverless (SGLang Deployment,medgemma 4b VLM model)

error creating container: nvidia-smi: parsing output of line 0: failed to parse (pcie.link.gen.max)

Serverless pod error 500 when uploading build

Finishing 0--1 failed with 500, retrying

Endpoint id : ljnx57qtdbr2oa

Build id : f98bb0b5-7187-4c77-8207-606db3c556e8...Coldstart: docker private repo vs github