just I don't have a user so the 250ms to the user I don't care about

Probably coming at some point, since they built it for Vercel

and I'd think routing through cloudflare internally (ie via queue) would be much more efficient then routing via the public internet

I mean, if it is just the routing you are after, Argo Smart Routing exists?

not sure what argo smart routing would do here? This is worker <-> queue <-> worker <-> external API

I suppose doing the worker <-> external API route via Argo would be possible (?) not sure though.

But really what I'd want is one of the two other connections to do this.

Attaching either the queue or the second worker to a specific region would get me all the benefits for free as all the latency would be hidden

I suppose doing the worker <-> external API route via Argo would be possible (?) not sure though.

But really what I'd want is one of the two other connections to do this.

Attaching either the queue or the second worker to a specific region would get me all the benefits for free as all the latency would be hidden

(not talking money cost, I mean in terms of perf / latency)

For a hacky way of forcing a location, you could use a Durable Object?

You could probably do that through a Service Binding?

not sure I understand. how would I do that? (I'm already using DOs in this setup somewhat)

not sure I understand. how would I do that? (I'm already using DOs in this setup somewhat)Basically, create a few DOs as close to your origin as possible, then save their IDs. Whenever you need to run code close to the origin, forward the request to those DOs, and you are good to go

how do I create the DOs there? Also wouldn't they migrate eventually? (According to https://where.durableobjects.live/)

If a datacenter goes down, they will move, but not very far. And as of yet, automatic migration is still to be implemented.

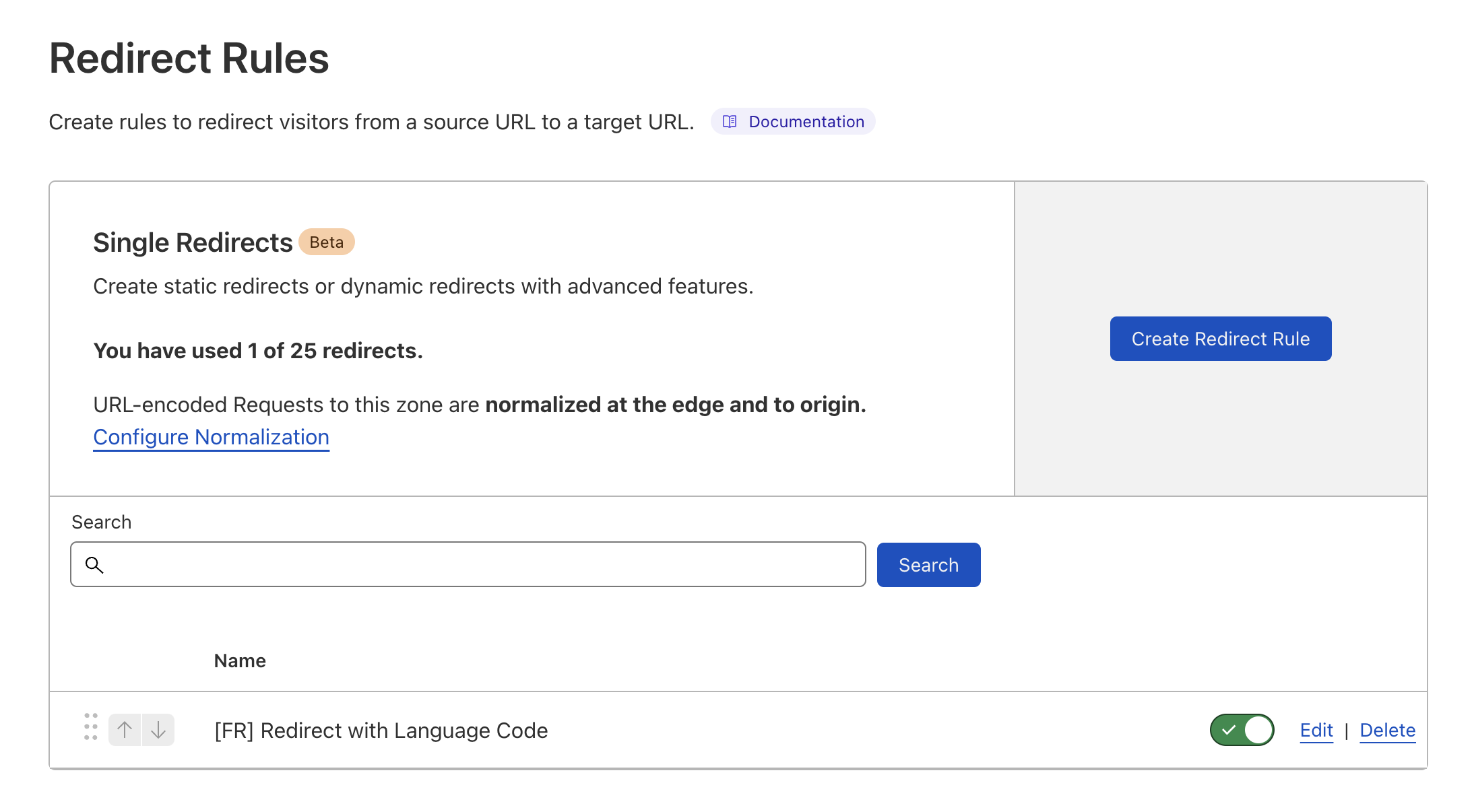

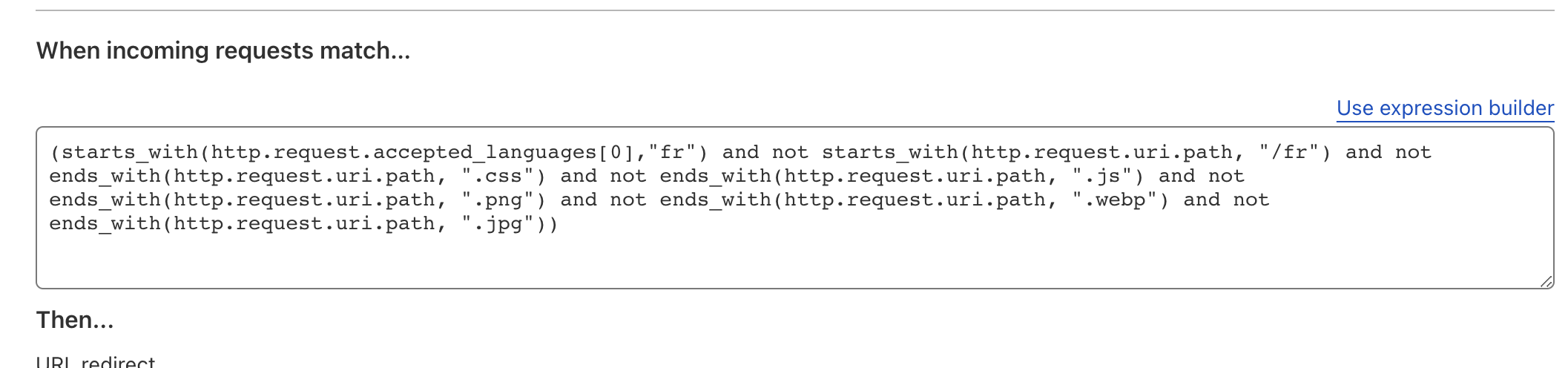

Hey, wondering if anyone is online to help with some redirect rules for language based issues

I am attempting to build a redirect at the Cloudflare Worker level to handle this /fr/ addition and avoid cache issues

fair enough, I'd learn about the datacenter going down anyways and could just "move" them back later.

How does the routing work here? It would be worker <-> DO so I'm assuming that'd be similar to Argo since the worker would still be placed by some unknown force?

How does the routing work here? It would be worker <-> DO so I'm assuming that'd be similar to Argo since the worker would still be placed by some unknown force?

The Worker is placed closest to the invoking client.

I guess that's a question for #queues-beta but not sure what the invoking client for queue / cron / alarms / pub/sub / etc. are

Oh, it's with a queue... Right...

Queue/PubSub: No Idea

Cron: Anywhere with low traffic, carbon neutral datacenter if you enable green compute

Alarms: In the Colo where your DO was created.

Cron: Anywhere with low traffic, carbon neutral datacenter if you enable green compute

Alarms: In the Colo where your DO was created.

I'll ask in Queue. I'd guess there's something similar going on to Alarms, so maybe I can somehow get the queue to be created in my respective data center. Thanks a lot for the help already!

No problem!

Can I use Transform Rules to redirect based on visitor language or do I need to use redirect?

Seems excessive to make a separate redirect rule for every language, maybe there is a better way?

And also excessive to check for every endswith

I am not sure if it is best to:

1.) Handle this problem with this solution

2.) Use Transform or use Redirect

3.) Create a separate rule for each language

1.) Handle this problem with this solution

2.) Use Transform or use Redirect

3.) Create a separate rule for each language

Just a heads-up that the #cloudflare-for-saas channel has now been archived because it didn't have much activity. If you have any questions about SaaS, feel free to ask on the Community: https://community.cloudflare.com

is it possible to make a push notification server on workers? should I just use something like Firebase Cloud Messaging or AWS SNS?

Pubsub is mqtt so its not really the same as SNS or FCM

well at least SNS not sure what FCM is

SNS and FCM both do mobile push notifications

ah I dont use sns that way

Could you use a worker to be a websocket client? Like say I make a request to wake up a worker, then that worker then connects to a websocket server and waits for messages, then when new messages come in it processes them (or a durable object doesn't really matter)

GitHub

Write Cloudflare Workers in 100% Rust via WebAssembly - GitHub - cloudflare/workers-rs: Write Cloudflare Workers in 100% Rust via WebAssembly

I think they have to be strings?

Does ORIGINS work?