I wonder what the max concurrency you can set will be

I wonder what the max concurrency you can set will be

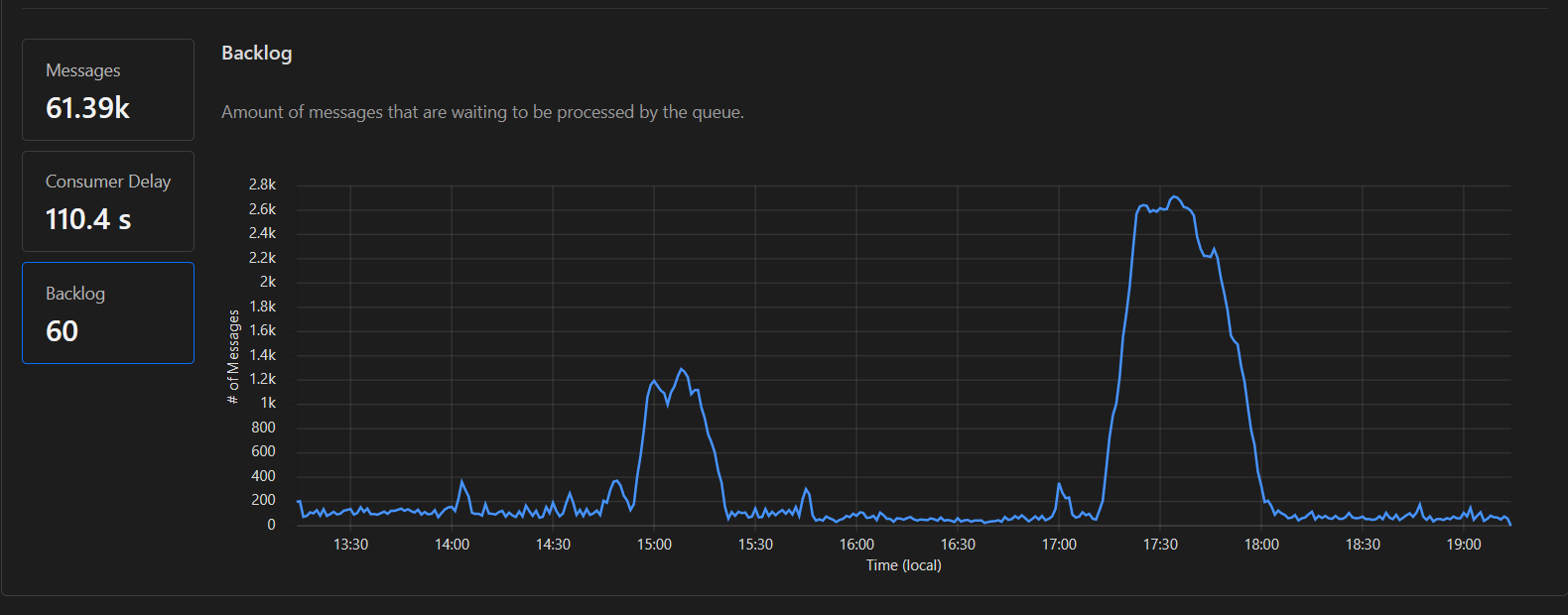

Was deploying while it was working through a 2.7k backlog and wanted to make sure I wasn't breaking it

Was deploying while it was working through a 2.7k backlog and wanted to make sure I wasn't breaking it

I could use https://repeat.dev on a cron for pinging the list() worker to keep it all in the cloud too

I could use https://repeat.dev on a cron for pinging the list() worker to keep it all in the cloud too

await scheduler.wait()this is probably the bit I'm missing? what is that? Does that sleep but isn't billable / cause timeouts?