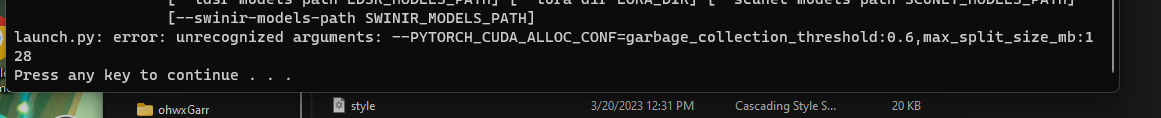

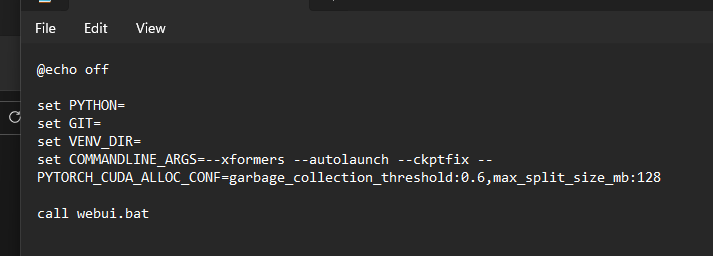

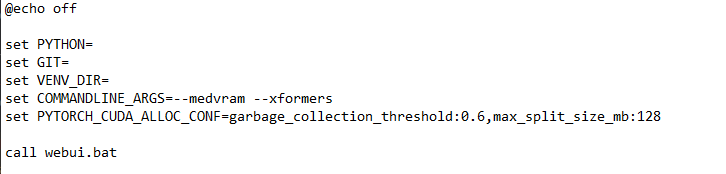

@Furkan Gözükara SECourses The command you gave does not seem to wrk

@Furkan Gözükara SECourses The command you gave does not seem to wrk

memory_efficient_attention_forwardcutlassFflshattFtritonflashattFsmallkF