They are not

.sql dumps, but I can use this and recreate it.I just save to R2

(I know I can avoid a lot of the date handling with a

.toISOString(), but it was multiple changes that led to this and I'm not bothered enough to redo it just yet ahah)still cool, nice

I ended up updating it now

I'd love to be able to get them all with

.raw() and not .all() as batch does. It'd be more space efficient than a JSON object. But doing the processing on tens and tens of thousands of rows doesn't seem useful.We're looking at instead adding a

.values() API that just returns an array of values, without column names.should i store json as a blob or text?

TEXT

New docs & updates:

- Metrics & analytics (now in the dash & via our GraphQL API): https://developers.cloudflare.com/d1/platform/metrics-analytics/

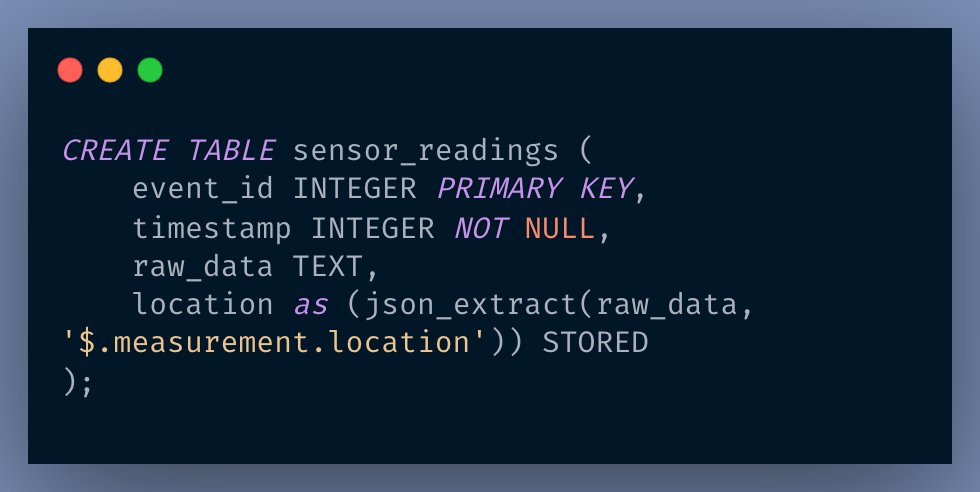

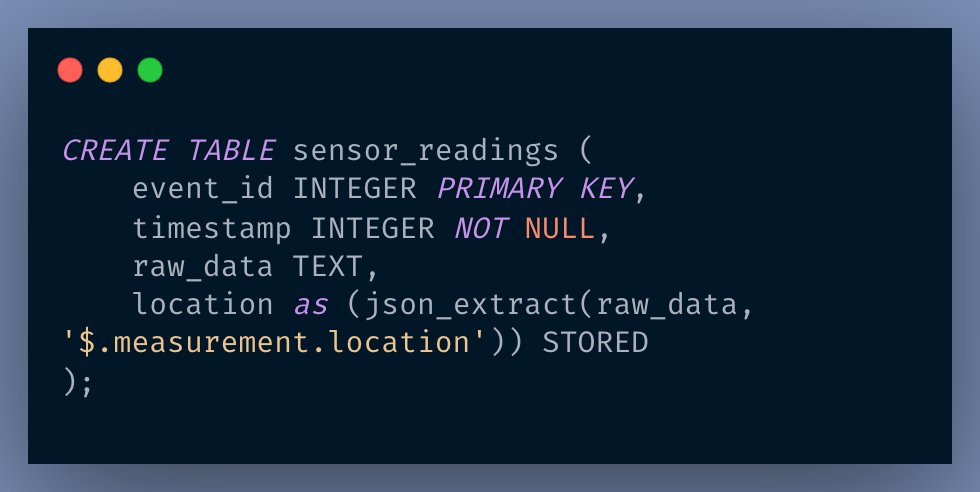

- Generated columns (very powerful way to extract + index JSON, amongst other things): https://developers.cloudflare.com/d1/learning/generated-columns/

- Deprecating

Error.cause: https://developers.cloudflare.com/d1/changelog/#deprecating-errorcause

No ETA at the moment. We are focused on metrics, Time Travel (point in time recovery), and stability.

Curious: what happened?

Matt Silverlock 🐀 (@elithrar)

Twitter

•

If you've used @Cloudflare D1, you may not realize that you can create generated (aka: virtual) columns based on properties within raw JSON objects

- indexes over those properties to (dramatically) speed up access to often-queried properties.

6/27/23, 6:02 PM

I think triggers are the right approach here and/or a formal audit logging feature.

At some point we want to expose Workers-based functions. We won’t be exposing raw SQLite APIs.

That’s the rough plan. Won’t be soon — lots of more foundational things to solve first

That would be really cool  Understand the next message, but very cool.

Understand the next message, but very cool.

Understand the next message, but very cool.

Understand the next message, but very cool.I might be really stupid here, however when using a D1 database locally it works fine, however when I push the same code to running on workers, it produces this error:

If more info is needed please lmk, I'm likely missing something really simple but thats why I'm here

If more info is needed please lmk, I'm likely missing something really simple but thats why I'm here

Do you have an example of the query you're running into issues with?

i'll get it one moment

welp nevermind, it was a PRAGMA for

user_version and I just read the docs on PRAGMA so I'll come up with a workaround - tyCurious: what are you trying to do with storing data in user_version?

It's probably a known issue, but in the metrics tab these don't show anything, in other graphs they do.

not implemented yet

Ok, "known" issue then  no problem ahah

no problem ahah

no problem ahah

no problem ahahOut of curiosity, what are your thoughts on the stability of the experimental backend vs the old backend for D1?

I have a few projects that are using the old D1 backend, is it worth switching now or should we wait for the beta?

I have a few projects that are using the old D1 backend, is it worth switching now or should we wait for the beta?

I mean both are inheritly unstable given its alpha

The new backend should be faster but doesnt have backups at the current moment afaik

Yup, asking with the assumption of the alpha tag haha. I more asked because when the new backend came out, I noticed that there were enough obvious errors that switching didn't make sense. Wondering if the situation has improved now to the point that a general happy path (simple query + insert statements with low load) isn't expected to encounter errors.

The execution times are noticeably better and there haven't really been many major issues I've seen in this channel. Do keep in mind that the new backend doesn't have backups yet

Got it, that's good to know  . I suppose once backups are available it makes sense to switch then

. I suppose once backups are available it makes sense to switch then

. I suppose once backups are available it makes sense to switch then

. I suppose once backups are available it makes sense to switch thenKeep in mind it isn't going to be a sql dumb zip but Time Travel.

https://blog.cloudflare.com/d1-turning-it-up-to-11/ search for Time Travel header

https://blog.cloudflare.com/d1-turning-it-up-to-11/ search for Time Travel header

i have a decent oltp db in mysql, about 150gb, that i would love to get into d1 so the performance is going to be a real issue

i have workers serving 500 req/sec out of kv at the moment but im really interested in having the backend db in d1, pushing aggregated data into kv

fwiw #analytics-engine-beta is ridiculously good for high rps analytics data, we're using it to power the stats in the dashboard

Hello, I planned to use d1 on a future product. I'm asking if there will be some breaking change from the alpha release to the production ready d1 ?

It is possible