Basically the cacheTTL you use for the fronting cache is the same as the cacheTTL for KV. This means

Basically the cacheTTL you use for the fronting cache is the same as the cacheTTL for KV. This means that every time you read from KV it's a cold read whereas KV takes great pains to make sure that you never see cold reads for frequently accessed keys.

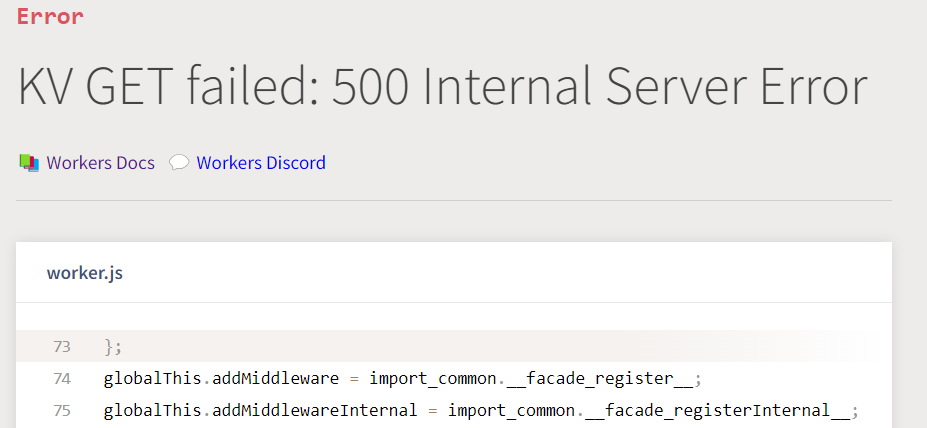

The latest version of the PR isn't building, but I'm working on docs providing guidance:

https://vlovich-kv-advanced-guide.cloudflare-docs-7ou.pages.dev/workers/learning/advanced-kv-guide/#avoid-hand-rolling-cache-in-front-of-workers-kv

I think a good change to BetterKV that follows the recommendation while cutting costs is:

The latest version of the PR isn't building, but I'm working on docs providing guidance:

https://vlovich-kv-advanced-guide.cloudflare-docs-7ou.pages.dev/workers/learning/advanced-kv-guide/#avoid-hand-rolling-cache-in-front-of-workers-kv

I think a good change to BetterKV that follows the recommendation while cutting costs is:

- Set the cache TTL in the fronting cache to ~40-50 seconds (regardless of the cacheTTL the user is requesting from KV).

- Have 1% of cache hits do a KV.get anyway in a wait until (+ update your cache with the updated value & extend the 40-50 second deadline out further).

This guide provides best practices for optimizing Workers KV latency as well as covering advanced tricks

that our customers sometimes employ for their …

that our customers sometimes employ for their …