You have to upload files to R2 separately and link them in your site. It doesn’t increase the indivi

You have to upload files to R2 separately and link them in your site. It doesn’t increase the individual file size in pages

/public/video.mp4 ?https://<your public r2 domain>/public/video.mp4.pages.dev domain like vercel?repo-name-random_string.pages.dev to something.pages.devsomething.pages.dev

something.pages.dev domain. You can use bulk redirects to make sure people don't access the main .pages.dev domain

Exceeded CPU Limits errors (requests range from 50ms to 2000ms), I thought I would just get billed extra for the additional time, but it's breaking my app/public/video.mp4https://<your public r2 domain>/public/video.mp4.pages.dev.pages.dev.pages.devrepo-name-random_string.pages.devsomething.pages.devsomething.pages.devRequestError [HttpError]: Resource not accessible by integration

at /home/runner/work/_actions/cloudflare/pages-action/v1.5.0/index.js:5857:25

at processTicksAndRejections (node:internal/process/task_queues:96:5)

at async createGitHubDeployment (/home/runner/work/_actions/cloudflare/pages-action/v1.5.0/index.js:22110:24)

at async /home/runner/work/_actions/cloudflare/pages-action/v1.5.0/index.js:22173:26 {

status: 403,

response: {

url: 'https://api.github.com/repos/<my gh account>/<my repo>/deployments',

status: 403,

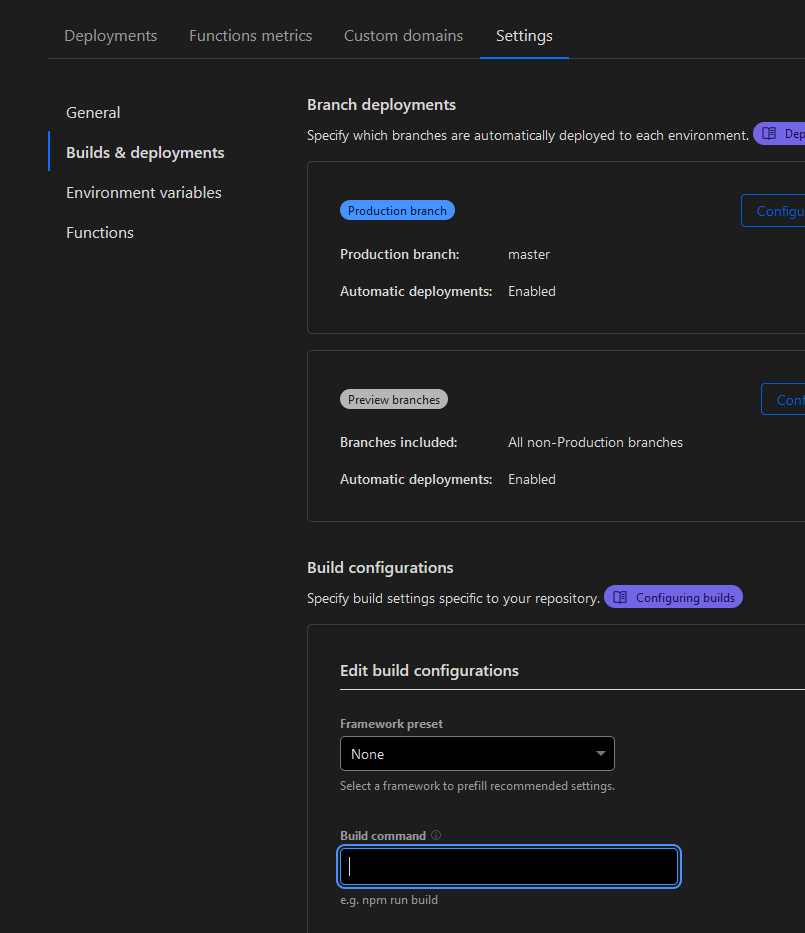

.................Exceeded CPU Limitsjobs:

push_migration_to_db_staging:

........... <push migrations to supabase>

deploy_to_cf_staging:

needs: [push_migration_to_db_staging]

env:

<a bunch of environment variables>

steps:

..... some steps

- name: build next for CF

run: npx @cloudflare/next-on-pages@1

- name: Deploy to CF pages

uses: cloudflare/pages-action@v1.5.0

with:

apiToken: ${{ secrets.CLOUDFLARE_API_TOKEN }}

accountId: ${{ secrets.CLOUDFLARE_ACCOUNT_ID }}

projectName: <proj name>

directory: .vercel/output/static

gitHubToken: ${{ secrets.GITHUB_TOKEN }}

branch: dev