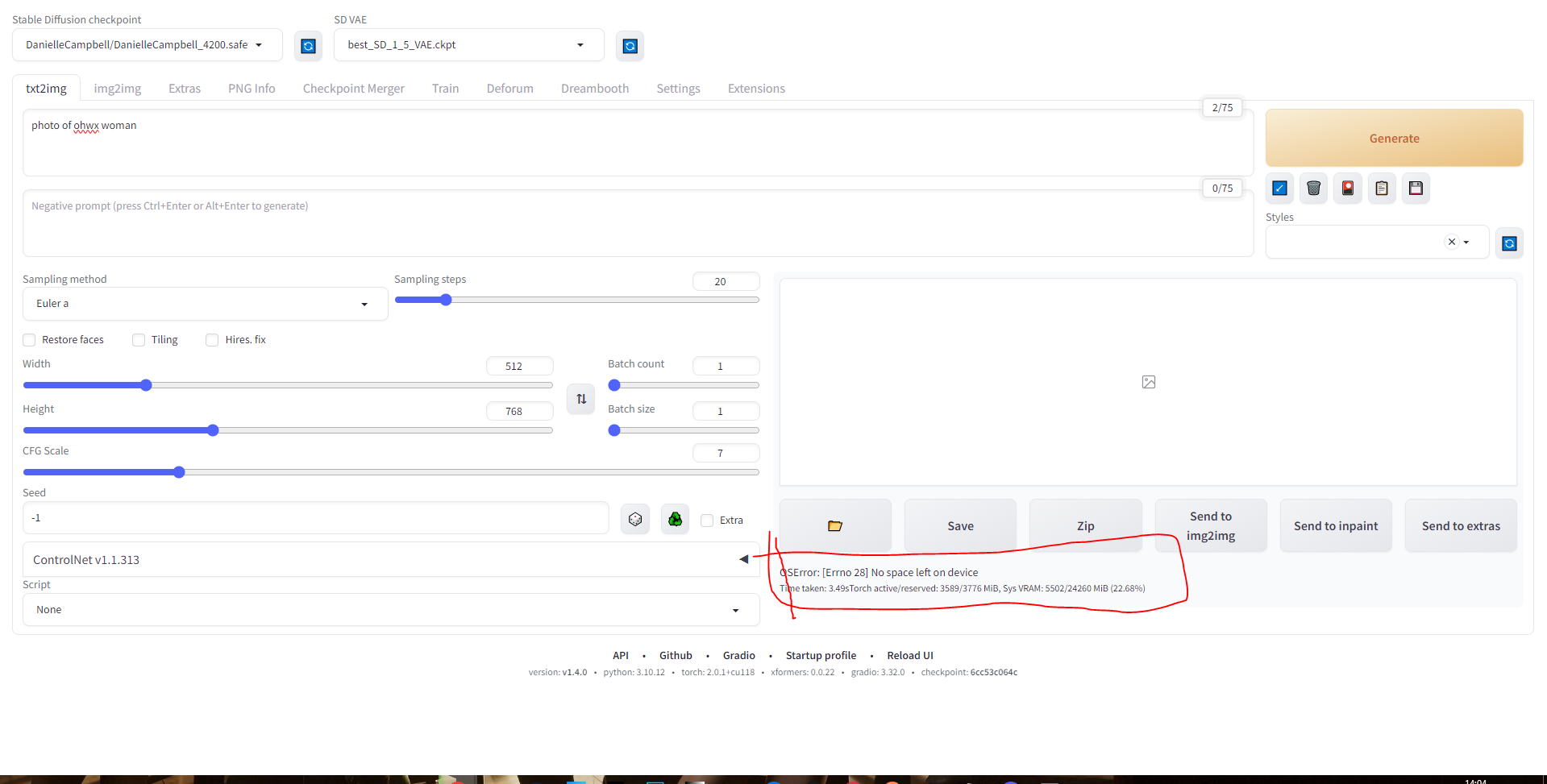

I have trained my model and try to test it but getting this error. Does anyone know how to solve it

I have trained my model and try to test it but getting this error. Does anyone know how to solve it ?

i can also make private session and explain you if you are interested in professionally

i can also make private session and explain you if you are interested in professionally