Rhetorical Consolidation of the X-Risk Debate

I am working on a research project as part of a course organised by BlueDot Impact. Submission is on the 19th of November but I intend to continue working on the project after that.

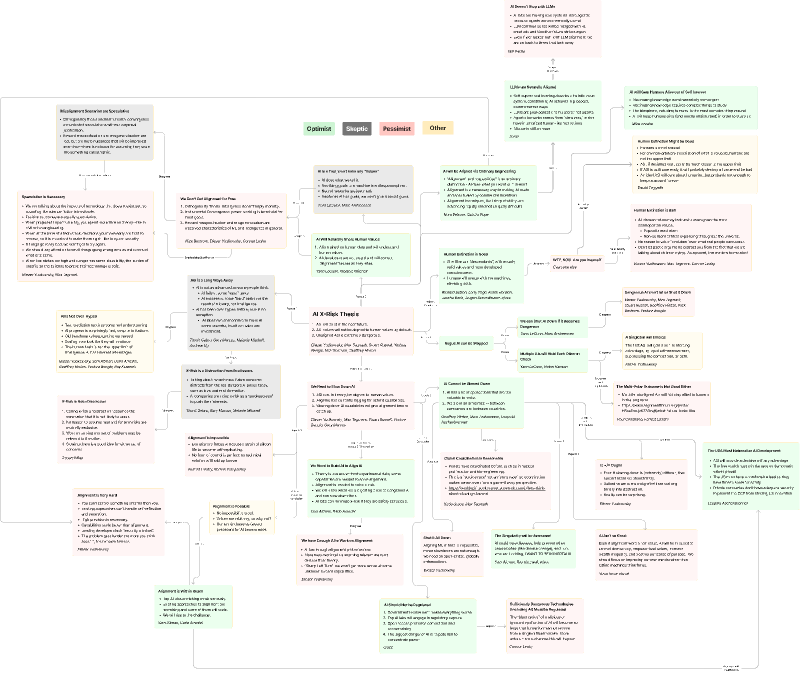

I am trying to figure out if there is a rational/argumentative asymmetry between the proponents of AI X-Risk and the detractors (my working hypothesis is that there is one, in favor of the proponents). At the same time I hope to build a hub of collaboration and a reference to analyse the arguments on each side.

I have been putting together:

[Hitting 2000 words limit, cutting post in two]

I am trying to figure out if there is a rational/argumentative asymmetry between the proponents of AI X-Risk and the detractors (my working hypothesis is that there is one, in favor of the proponents). At the same time I hope to build a hub of collaboration and a reference to analyse the arguments on each side.

I have been putting together:

- A “model” of X-Risk with 4 hypotheses. Each hypothesis makes the model stronger in terms of predictive force, but easier to falsify

- H1: ASI will be created soon (will refine the definitions of “ASI” and “soon”)

- H2: ASI will be able to wipe out humanity

- H3: ASI will have incentives to wipe out humanity

- H4: We won’t find a solution to this by the time ASI is created

- Taxonomies of arguments that falsify each hypothesis

- Taxonomies of arguments in support of each hypothesis

- Epistemic consolidations at each node of the taxonomies (some analysis of the arguments in favor, rebuttals, and a simple conclusion: debunked, unlikely, likely)

[Hitting 2000 words limit, cutting post in two]