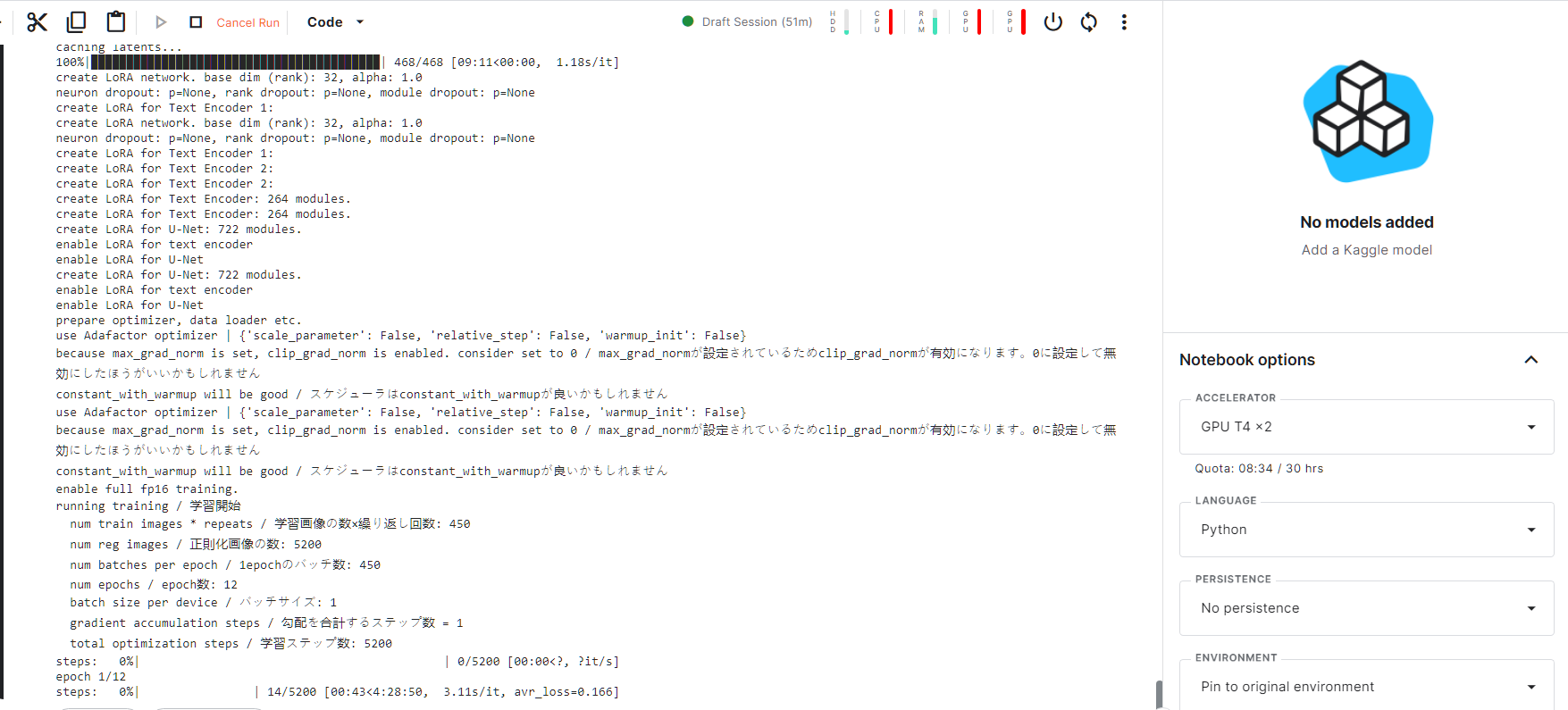

Please help with kaggle error. Dreambooth training ends with error

Please help with kaggle error. Dreambooth training ends with error

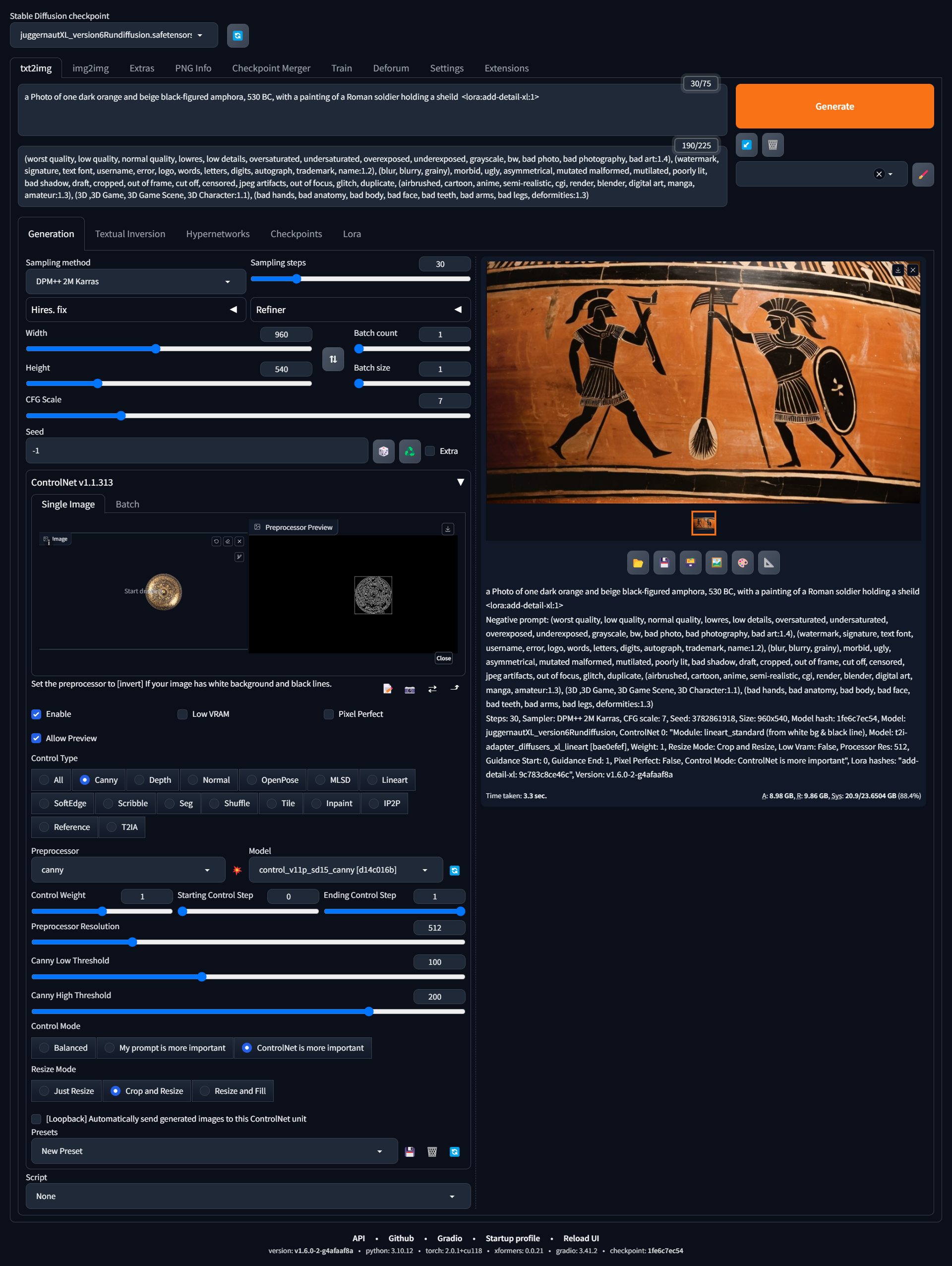

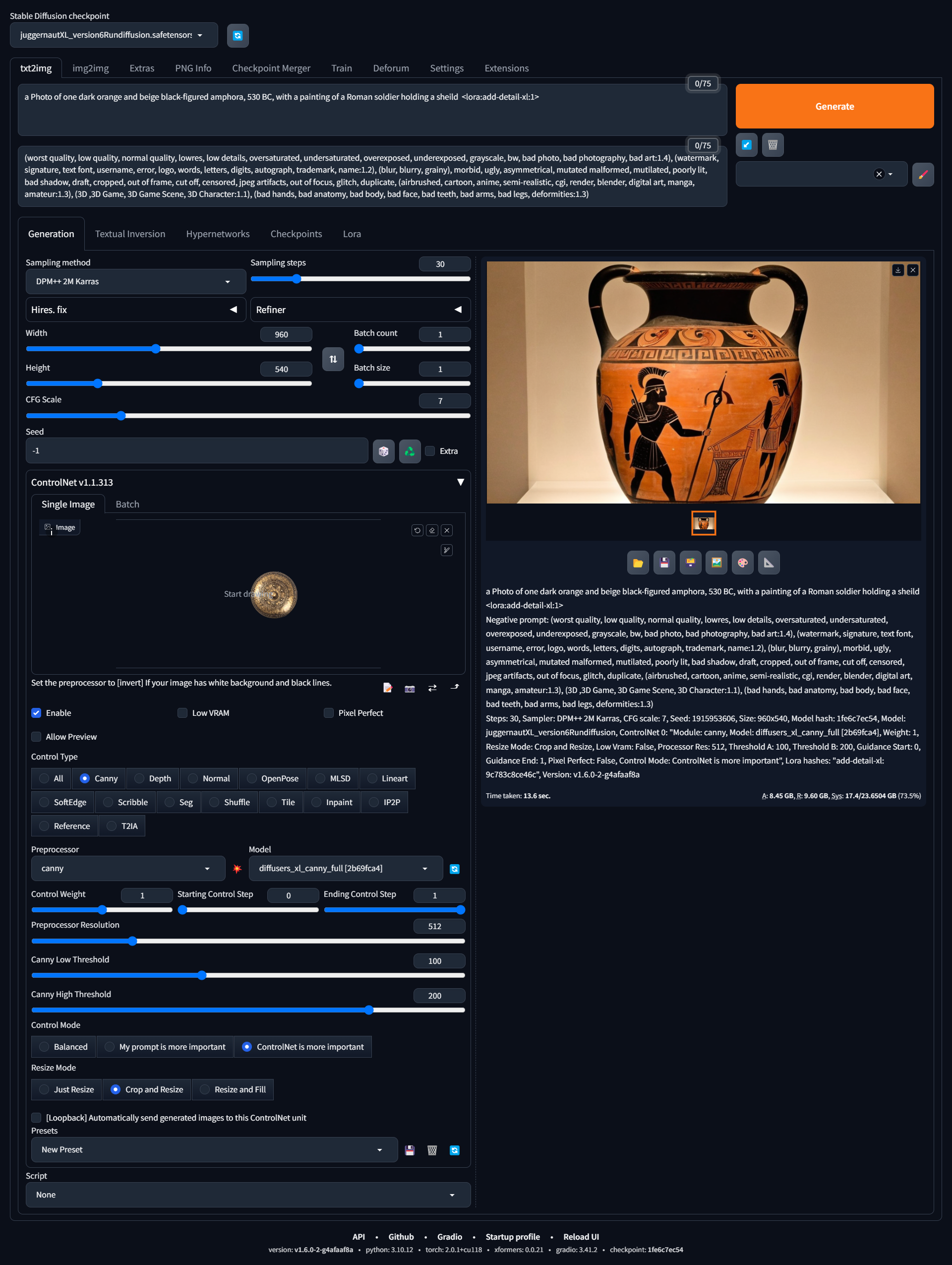

Master Stable Diffusion XL Training on Kaggle for Free!

Master Stable Diffusion XL Training on Kaggle for Free!  Welcome to this comprehensive tutorial where I'll be guiding you through the exciting world of setting up and training Stable Diffusion XL (SDXL) with Kohya on a free Kaggle account. This video is your one-stop resource for learning everything from initiating a Kaggle session with dual ...

Welcome to this comprehensive tutorial where I'll be guiding you through the exciting world of setting up and training Stable Diffusion XL (SDXL) with Kohya on a free Kaggle account. This video is your one-stop resource for learning everything from initiating a Kaggle session with dual ...

it was kinda warm in here since it's winter, but yeah. If you're not overclocking and you have a well ventilated case, I would say don't worry about it.

it was kinda warm in here since it's winter, but yeah. If you're not overclocking and you have a well ventilated case, I would say don't worry about it.