I had to write a helper that would batch them into groups of 100 as well as retry if a 500 error hap

I had to write a helper that would batch them into groups of 100 as well as retry if a 500 error happened, worked for the most part

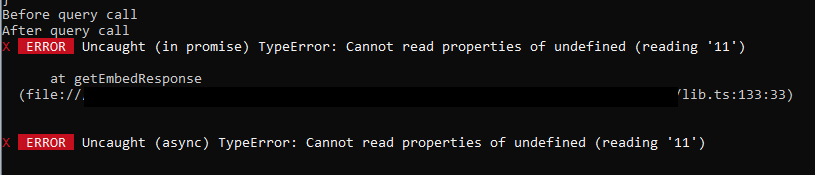

{"content":"..."} with the ...await env.<INDEX>.query(vector, { topK: 1}), in some cases it simply fails and returns the following error message: TypeError: Cannot read properties of undefined (reading '0')reading '0' isn't constant either, sometimes the number is different, and different userQueries result in different numbers.

)

)data array is sometimes undefined, not sure why but that should be my problem

includeMetadata param

{"content":"..."}await env.<INDEX>.query(vector, { topK: 1})TypeError: Cannot read properties of undefined (reading '0')reading '0'export async function getEmbedResponse(env: Environment, userQuery: string, data: string[]): Promise<{ score: number, result: string }> {

const ai = new Ai(env.AI)

const queryVector: EmbeddingResponse = await ai.run('@cf/baai/bge-base-en-v1.5', {

text: [userQuery],

})

let matches = await env.<INDEX>.query(queryVector.data[0], { topK: 1 })

const embedAiResponse = { score: matches.matches[0].score, result: data[parseInt(matches.matches[0].vectorId)] }

return embedAiResponse

}interface EmbeddingResponse {

shape: number[];

data: number[][];

}dataundefinedincludeMetadataException Thrown @ 12/1/2023, 12:06:34 PM

(log) {

count: 2,

matches: [

{ vectorId: 'hzz2v', score: 0.804275747, vector: [Object] },

{ vectorId: 'aml6o', score: 0.726804477, vector: [Object] }

]

}let contextResult = await env.DEMO_VECTORDB.query(queryVector, { topK: 5, returnMetadata: true })import { CloudflareVectorizeStore } from "langchain/vectorstores/cloudflare_vectorize";