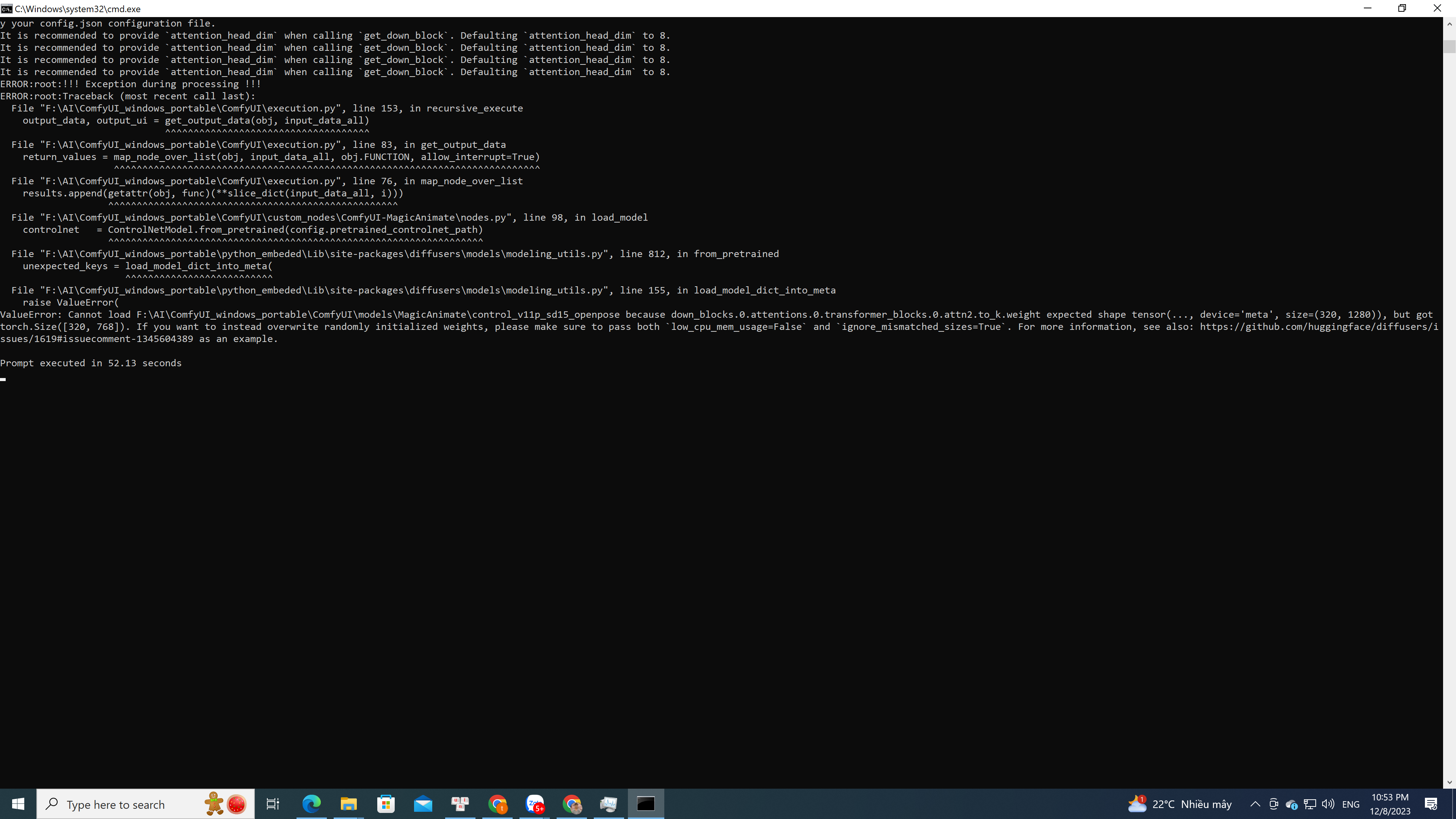

Any idea what this error is

Any idea what this error is

low_cpu_mem_usage=Falseignore_mismatched_sizes=True

the same

the same