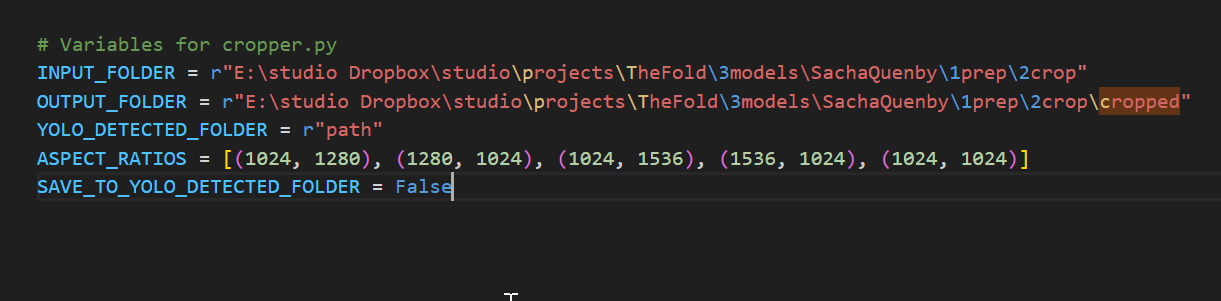

@Dr. Furkan Gözükara yolov8 is out, should we be using this model in auto-cropper instead of the yo

@Dr. Furkan Gözükara yolov8 is out, should we be using this model in auto-cropper instead of the yolov7 in your donwload?

Are you ready to embark on a journey of installing AI libraries and applications with ease?

Are you ready to embark on a journey of installing AI libraries and applications with ease?  In this video, we'll guide you through the process of installing Python, Git, Visual Studio C++ Compile tools, and FFmpeg on your Windows 10 machine.

In this video, we'll guide you through the process of installing Python, Git, Visual Studio C++ Compile tools, and FFmpeg on your Windows 10 machine.  We'll also show you ho...

We'll also show you ho...