ah yes, that brings up another question! For my use-case, caching S3 subrequests to a single Backbla

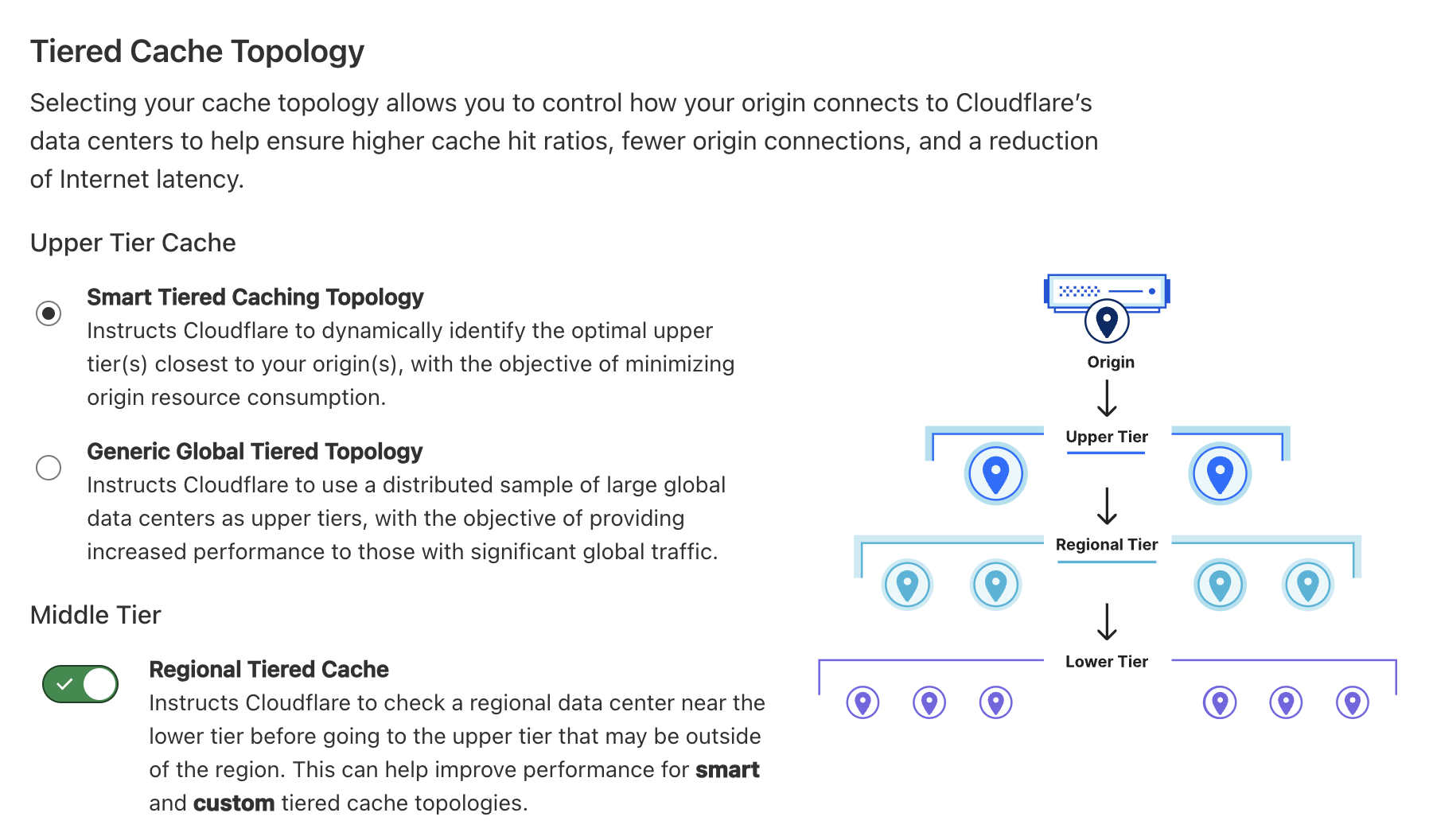

ah yes, that brings up another question! For my use-case, caching S3 subrequests to a single Backblaze, do these tier cache settings make sense?

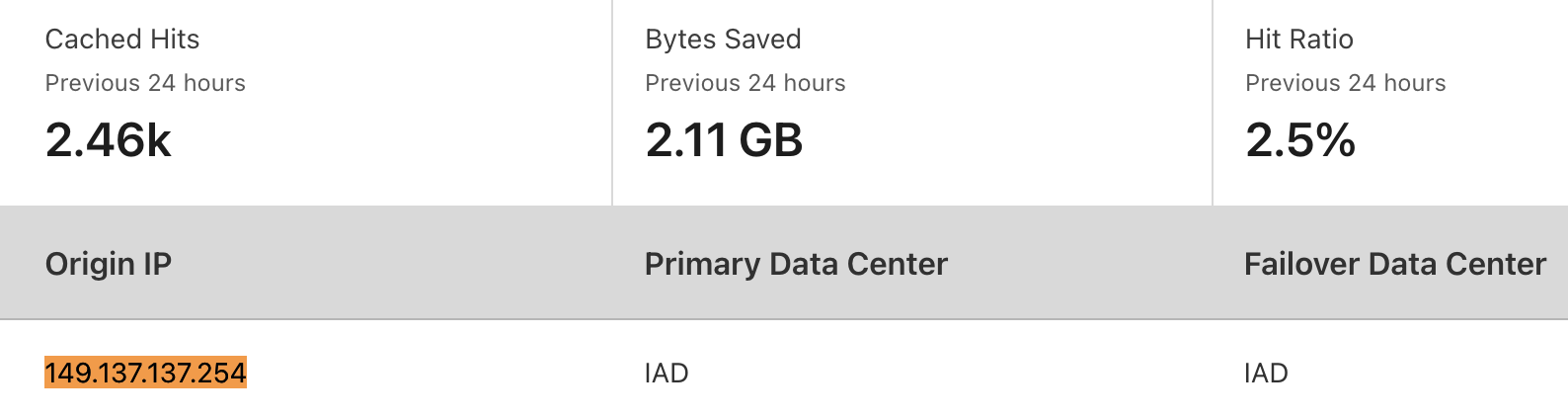

CacheTieredFill in logs will tell you whether it was used for a given request, you may need to inspect logs over an extended period before you find one because if you keep hitting the same colo its just going to serve from local colo cacheUpperTierColoID which will tell you which other colo the cache was fetched from, if not the local one

cf.worker.upstream_zone to be supported but if it isnt then no"Cache-Control": "no-cache, no-store, no-transform" on my fetch request in a Worker, will that bypass the Cache Rules?

CacheTieredFillUpperTierColoIDcf.worker.upstream_zone"Cache-Control": "no-cache, no-store, no-transform"✘ [ERROR] Error: Promise will never complete.✘ [ERROR] *** Received signal #11: Segmentation fault: 11

stack:

✘ [ERROR] Error in ProxyController: Error inside ProxyWorker

{

name: 'Error',

message: 'Network connection lost.',

stack: 'Error: Network connection lost.'

}const operation_json = await operation_result.json() as any;

if(operation_json.result === "win") {

const filename = cacheKey.pathname.split("/").pop() ?? "undefined.pdf";

const filename_json = filename.replace(/\.[^/.]+$/, "") + ".json";

const operation_json_str = JSON.stringify(operation_json, null, 2);

const custom_res = new Response(operation_json_str);

const compressedReadableStream = custom_res.body!.pipeThrough(

new CompressionStream('gzip')

);

// ...

}