On the HTTP pull , are there any updates on landing this in the beta

On the HTTP pull , are there any updates on landing this in the beta

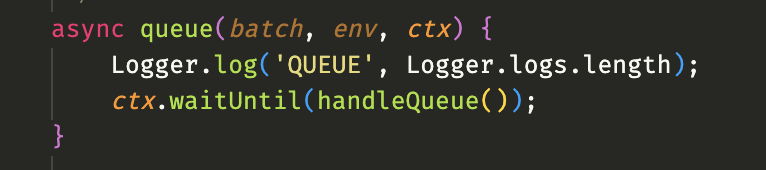

queueLogger is a simple ES6 module I wrote by myself.queuequeue

fetch()queue()queue

jsonv8 default. This will improve compatibility with pull-based consumers when we release them.2024-03-04

{ task_id, subtask_data }, and when I call sendBatch all messages in the batch have the same task_id, then can I guarantee that all messages in the consumer have the same task_id? Or will retries or max batch sizes mess with this?

.sendsend

Loggerv82024-03-04{ task_id, subtask_data }task_idtask_id