I seem to get Internal Server Error regardless of the models used. Your (?) public deployment works

I seem to get Internal Server Error regardless of the models used. Your (?) public deployment works though

[wrangler:err] InferenceUpstreamError: {"errors":[{"message":"Server Error","code":1000}],"success":false,"result":{},"messages":[]}

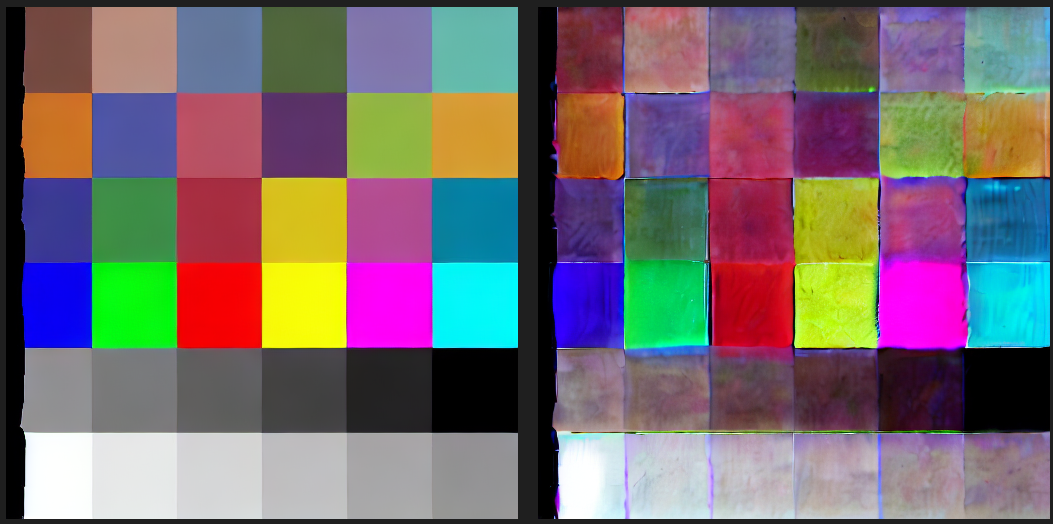

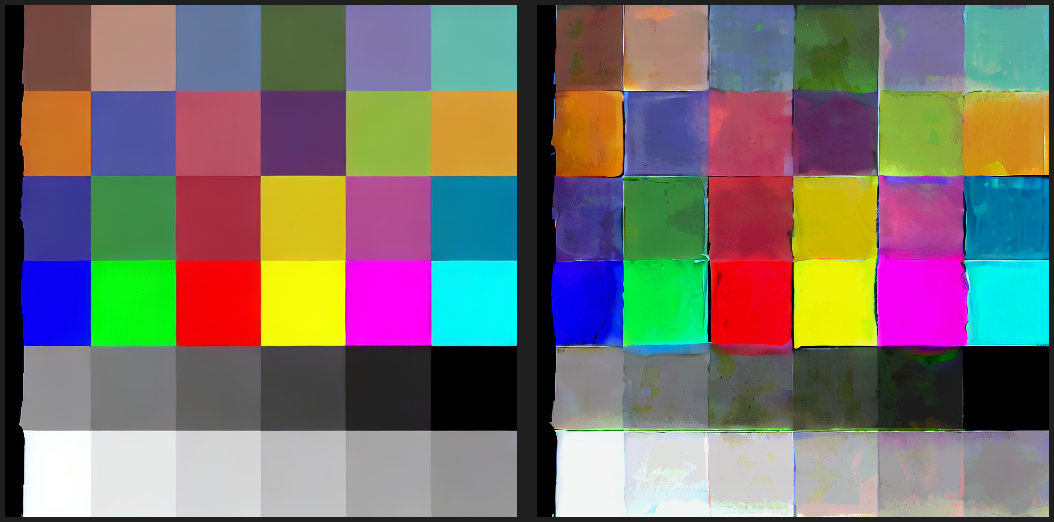

@cf/runwayml/stable-diffusion-v1-5-inpainting (and @cf/runwayml/stable-diffusion-v1-5-img2img):

I found this https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/410 which seems to match my own testing.

I found this https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/410 which seems to match my own testing.mistral-7b-instruct-v0.2 via the workers rest api ?@hf/thebloke/deepseek-coder-6.7b-instruct-awq It appears there is a character limit on the response (around 800 chars). Is this a known limit? any way to get the full response?max_tokens property in the request. But don't see it mentioned anywhere else, so it might not work...

3/25/24, 7:31 PM

npm i @cloudflare/ai. There are 3 other projects in the npm registry using @cloudflare/ai.

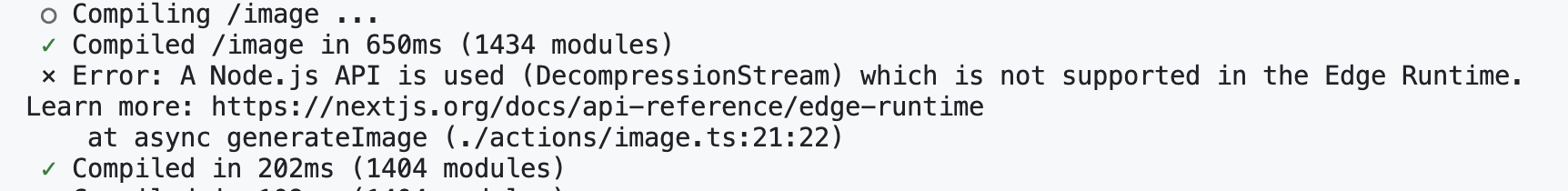

@cloudflare/next-on-pages@cloudflare/ai , so maybe it's because there is no nodejs_compatPOST https://***.pages.dev/api/chat - Ok @ 3/24/2024, 9:34:08 PM

(error) Error: Ai binding is undefined. Please provide a valid binding.[wrangler:err] InferenceUpstreamError: {"errors":[{"message":"Server Error","code":1000}],"success":false,"result":{},"messages":[]}

@cf/runwayml/stable-diffusion-v1-5-inpainting@cf/runwayml/stable-diffusion-v1-5-img2imgmistral-7b-instruct-v0.2@hf/thebloke/deepseek-coder-6.7b-instruct-awqmax_tokensnpm i @cloudflare/ai@cloudflare/ai