Drizzle ORM is a lightweight and performant TypeScript ORM with developer experience in mind.

ty worked

Are you able to use the limit request form to get increased per database now? I’m happy with no replicas as a tradeoff, just want to be future proof with more storage.

All existing databases (if you have a paid Workers plan) can grow to 10GB each - https://developers.cloudflare.com/d1/platform/changelog/#d1-is-generally-available

Cloudflare Docs

D1 is now generally available and production ready. Read the blog post for more details on new features in GA and to learn more about the upcoming D1 …

Are you able to request a limit beyond that or is it still a hard-coded value like in the beta?

We don’t currently increase that limit - it’s not that it’s hard coded, it’s that we have a very (very) high bar for performance & cold starts.

We’re continuing to work on raising it over time - and as we do it’ll just work. But also unlikely a single D1 DB will be 100GB (which is a large transactional DB by any standard)

We’re continuing to work on raising it over time - and as we do it’ll just work. But also unlikely a single D1 DB will be 100GB (which is a large transactional DB by any standard)

Keep in mind that a 10GB database storing user records @ 1KB per row = 10M users.

That's fair yeah, we're considering a serverless migration at work and our current DB is 108GB (we can't split by user with our use case) so size is the issue

does SQLite View improve query speed? I'm not planning to join view with anything else

Same here. I tried specify a region and it works.

@geelen

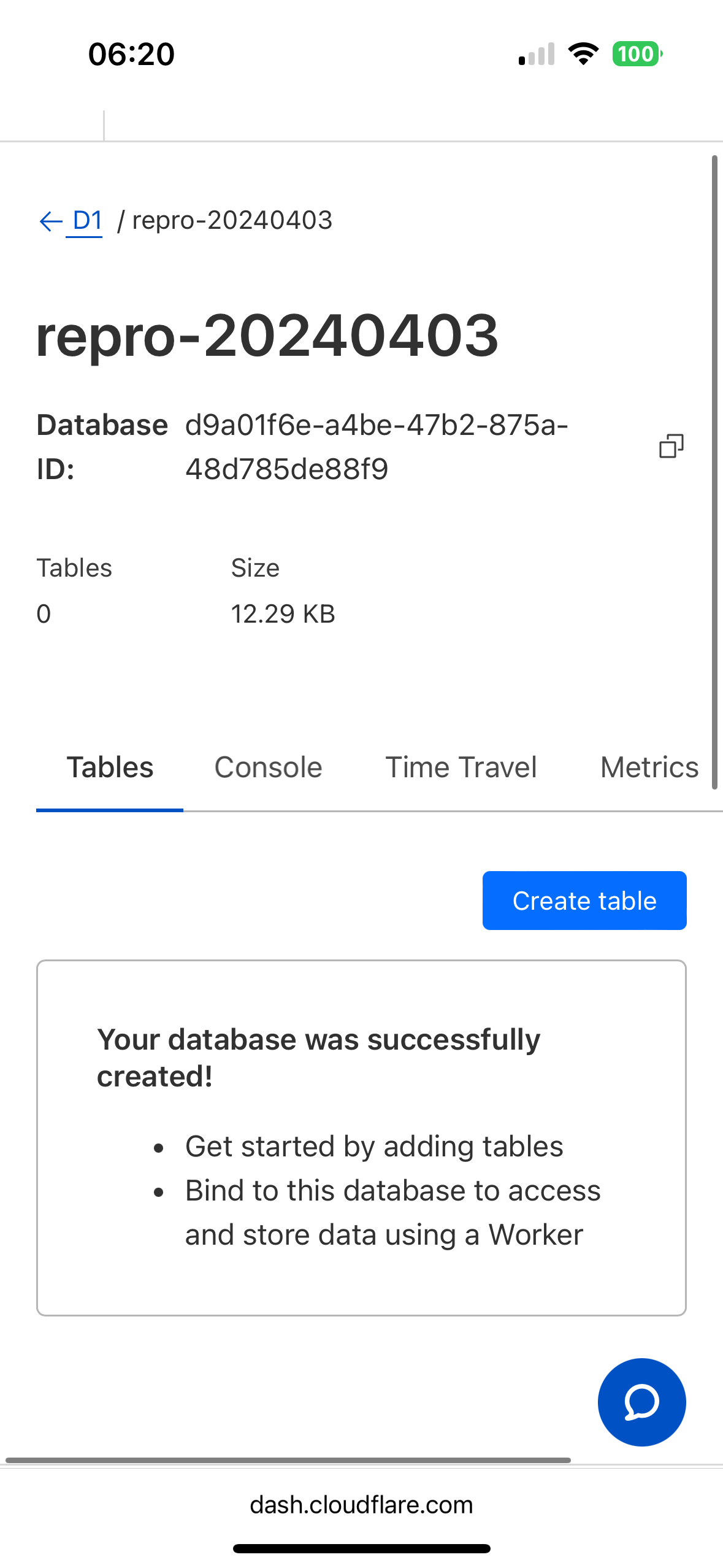

Are you still seeing this? I was able to create a new DB just now.

OK, looking into it. My new DB was automatic (based on your previous comment).

Hi guys, is there anyway to turn off foreign key check while migrating the database? Some table altering tasks needs table to be dropped, which will cascade referenced tables.

A sample scenario was mentioned in this issue https://github.com/cloudflare/workers-sdk/issues/5438

GitHub

Some table altering tasks in SQLite cannot be achieved without replacing it with a new table (by dropping - recreating the table) (like adding foreign keys, changing primary keys, updating column t...

checkout https://developers.cloudflare.com/d1/build-with-d1/foreign-keys/#defer-foreign-key-constraints

Cloudflare Docs

D1 supports defining and enforcing foreign key constraints across tables in a database.

This works with table creating or importing but not for table dropping. Matt also suggested me to bracketing the migration by that pragma but sadly it didn't work

Got another question, there's still no way to dynamically bind to databases correct? I.e. bind to all 50k DBs from a Worker instead of the ~5k that'll fit in wrangler.toml? I believe you can use the API, but that kind of defeats the purpose.

Correct

Yeah I think it's the only way to add foreign key or change column type, but whenever I drop the table, all records in tables which reference to the table dropped before are gone too because of cascading on delete.

My current approach is store the child table data to a temp table then insert it again after migrating the parent table. But when my child table also has some more children, the task turns out to be complex and wasteful

Thank you!

The REST API is significantly slower than a Worker, would recommend a simple wrapper over a Worker instead: https://github.com/elithrar/http-api-d1-example

Can you try one more time when you're back online?

Is it possible to share a d1 database between worker and pages locally?

I'm symlinking the wrangler folder, it seems to work but was curious if there is a better way

we are working on making it faster tho

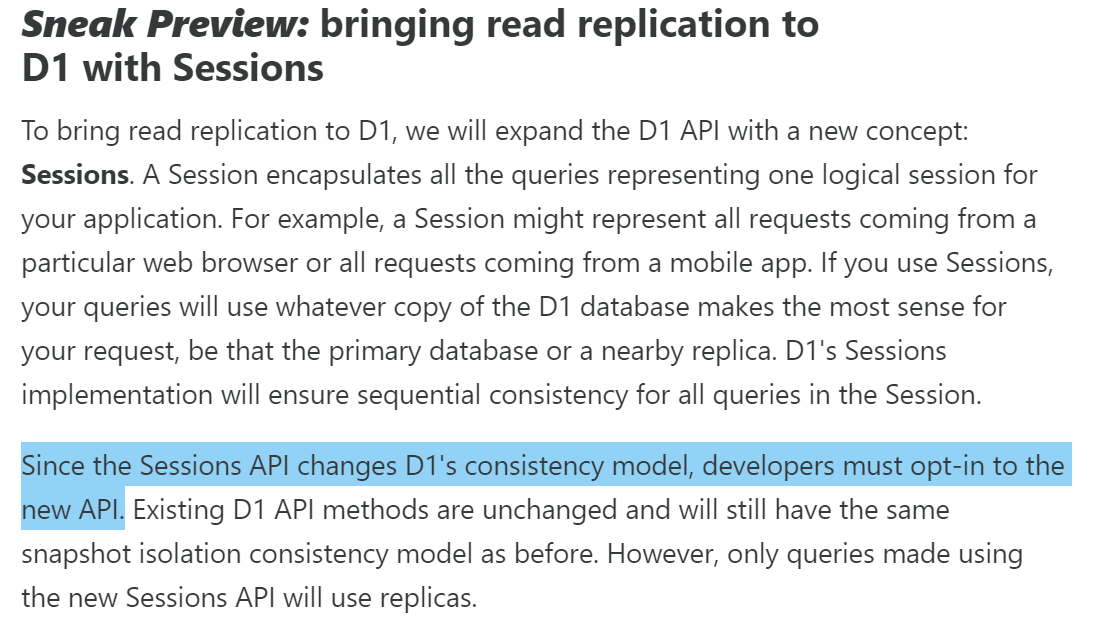

I am trying to understand how session-based consistency would work in an application such as SvelteKit and the data invalidation methods. I understand the example of consistency when executing multiple queries in the same function, but how can I guarantee that the next reload of the page will be up to date? Does anyone have any examples of this?

Yes, but how can I know that the read replicas are caught up at that point

Cloudflare Docs

Create, develop, and deploy your Cloudflare Workers with Wrangler commands.

Looks like Oceania/Australia DOs are online! https://where.durableobjects.live/colo/SYD/

Now the question is, when will we get the D1 Location Hint for Oceania? ;p

Now the question is, when will we get the D1 Location Hint for Oceania? ;p

Where Durable Objects Live

Tracking where Durable Objects are created, wherever you are in the world.

(I tried using the api and not the dash with

oc and it still rejects it lol)There is now, it was just released?

Does anyone have a comparison of D1 vs Turso?

it's an upcoming feature which breaks compatibility with previous API

It doesn't break compatibility with the old API, you can still use the old API just fine. It's just that there is no change to behaviour of the "old" API when the new API is introduced

So you can use both at the same time? Just one will make use of the deployed replicas while the other won't?

I can't confirm whether you'll be able to use them simultaneously, but D1 is a GA product, so I would not expect any of the current APIs to change in a backwards-incompatible way.

If theres any changes it will be guarded by a compat date/flag