How to fix error:

No such model @cf/meta/llama-3-8b-instruct or task ? I want to launch a text chat bot as an online assistant. It seems to me that this model is better suited, but it cannot be used due to an error.Sounds like you're using the

@cloudflare/ai npm package. It's depreciated, so use the native binding to be able to access newly added models. See https://developers.cloudflare.com/workers-ai/changelog/.how to make this work with cf embeddings instead of openai? any blog or guide?

i got this code from https://blog.cloudflare.com/langchain-and-cloudflare

has CLIP already been requested

How to avoid this error for llama-3-8b-instruct?

Can anyone provide an example of the correct typescript code to check the prompt size and trim it? I use the messages array, which I pass to the llm model call; in this array there is an arbitrary number of nested arrays with the keys “user/system/assistant” and “content”.

must have required property 'prompt', must NOT have more than 6144 characters, must match exactly one schema in oneOfCan anyone provide an example of the correct typescript code to check the prompt size and trim it? I use the messages array, which I pass to the llm model call; in this array there is an arbitrary number of nested arrays with the keys “user/system/assistant” and “content”.

https://developers.cloudflare.com/workers-ai/fine-tunes/

Any information or predictions on when LoRA Fine Tuning for llama-3-8b-instruct will be available?

Any information or predictions on when LoRA Fine Tuning for llama-3-8b-instruct will be available?

Cloudflare Docs

Learn how to use Workers AI to get fine-tuned inference.

afaik it's not documented, but I run into that error if any single message in the messages array has more than ~6144 characters. There's also a total token limit where the model (at least with llama-3-8b-instruct) will stop generating. Seems to be somewhere around 2700-2800 tokens.

You can swap out OpenAIEmbeddings with CloudflareWorkersAIEmbeddings. Definitely not a guide, but.. well, here's an example: https://gist.github.com/Raylight-PWL/50d7a20193874c6c784759001bb26e5c.

Hello I am thinking of publishing an app with workers-ai API. but I am concerned about rate limits.

can my app make thousands of requests per minute?

can my app make thousands of requests per minute?

please help

Thanks it worked :MeowHeartCloudflare:

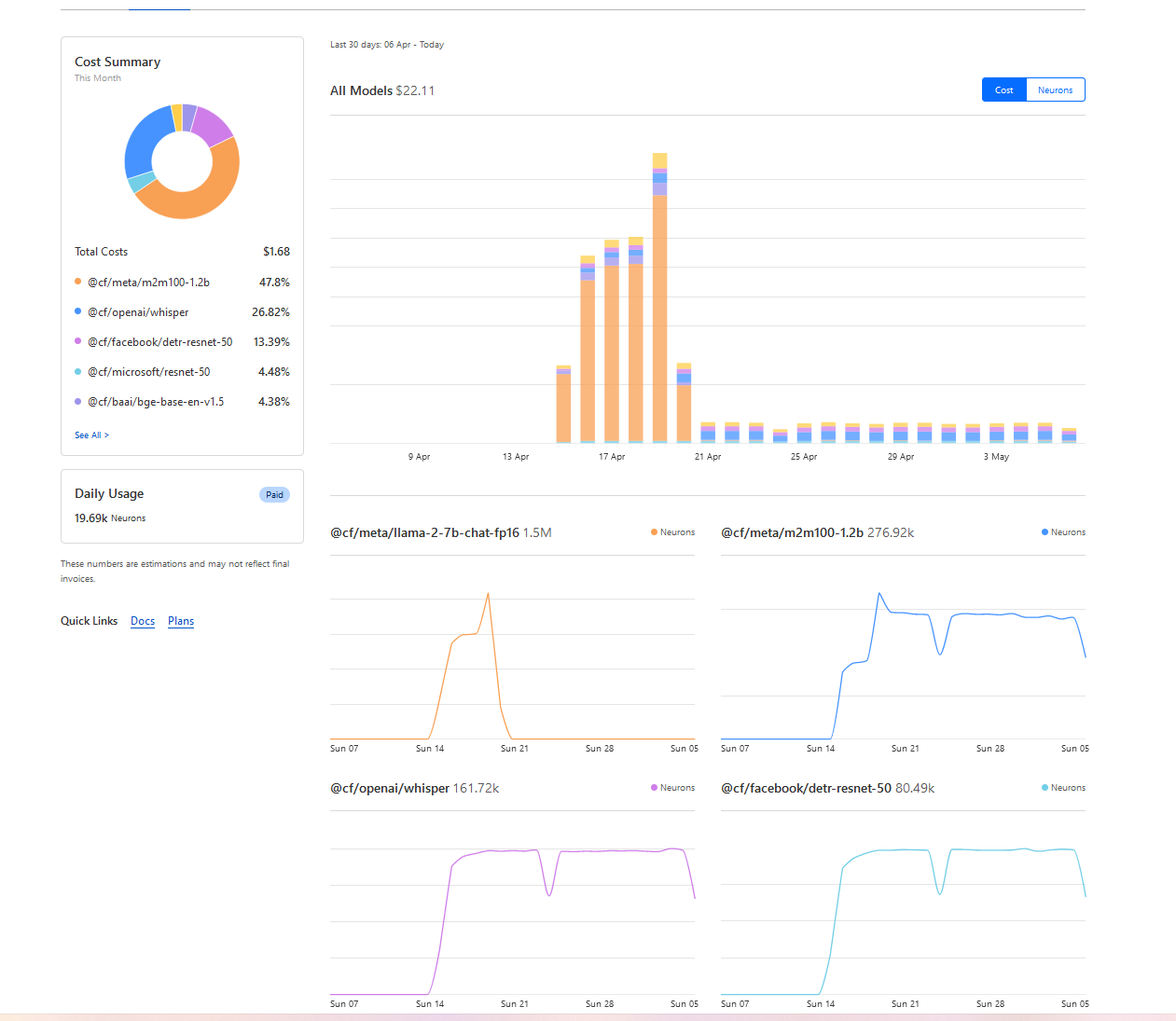

Great! Beware that the example with the Brooklyn article consumes a lot of neurons.

yeah it took 550+ neurons but i am in free tier

were those from llm or while generating embeddings?

but how do I handle more requests with than the minits specified?

hi, I try to implement the Langchain pdf loader into my application. But if I call

The part of this code is from the langchain repository https://github.com/jacoblee93/langchain-nuxt-cloudflare-template

Thanks

loader.load() I always get the error message: RangeError: Maximum call stack size exceeded Does someone know what could be the cause? I have already tried it with small pdf files, but that didn't help eitherThe part of this code is from the langchain repository https://github.com/jacoblee93/langchain-nuxt-cloudflare-template

Thanks

GitHub

Contribute to jacoblee93/langchain-nuxt-cloudflare-template development by creating an account on GitHub.

with this loader it works  :

:

:

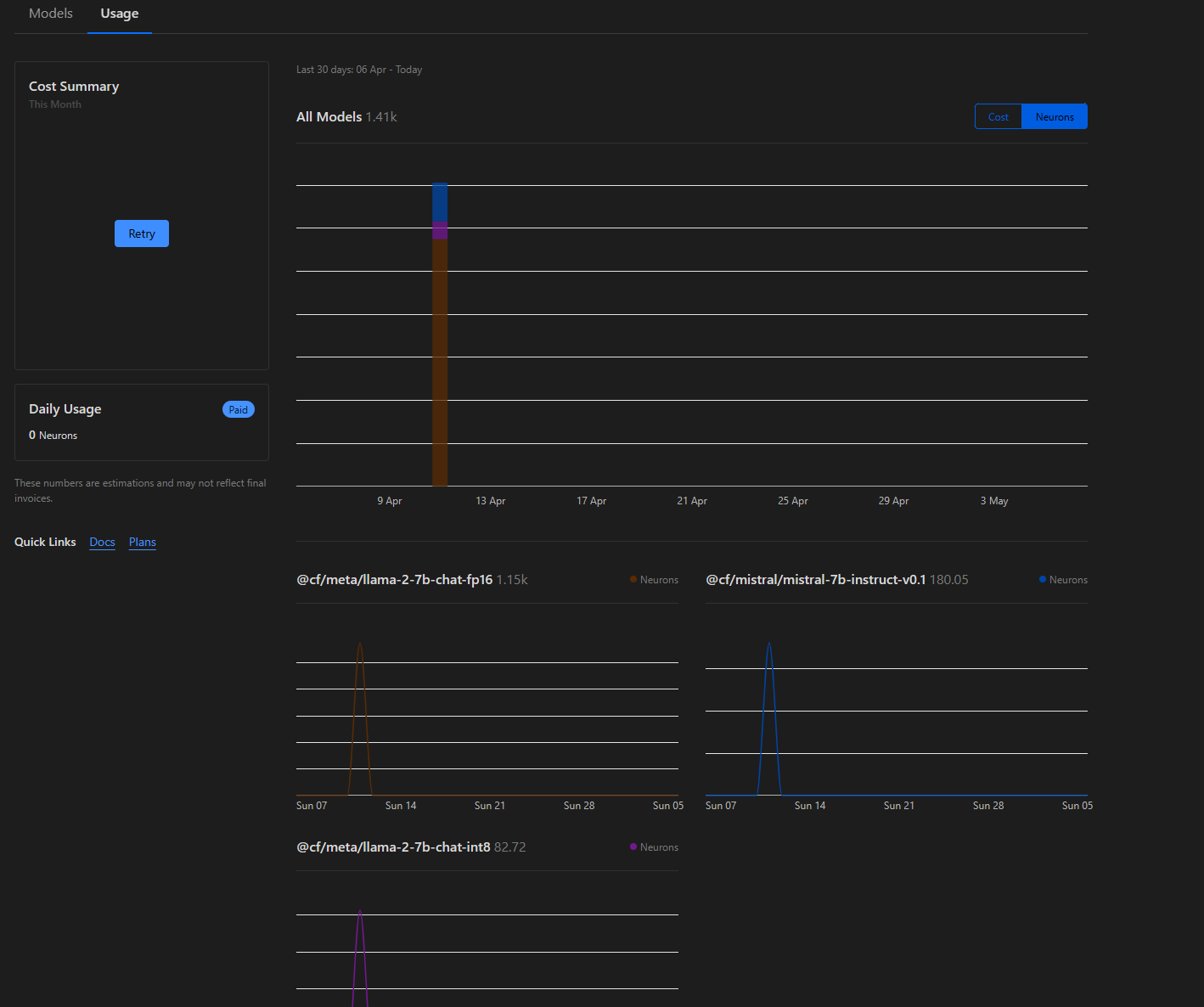

:Whats up with the workers ai usage tab? Cost summary fails to load, daily usage always says 0, the "all models" section says I have only used it on April 11th, and the model specific ones say I have only used them on April 10th. (im a time traveler I guess mustve went ahead a few days used it and broke something my bad)

oh wait I just realized april was last month I was thinking that wouldve been this month :lul:

this month is May lol

only GA models bill you, which is some of the confusion maybe

mine looks sane

hmm

Yeah I guess I only do use the beta models atm kinda stupid to not have usage data on them

Regarding this https://developers.cloudflare.com/workers-ai/changelog/.

What is the type for TypeScript? I cannot find anything on this

What is the type for TypeScript? I cannot find anything on this

Cloudflare Docs

Review recent changes to Cloudflare Workers AI.

They should be in

@cloudflare/workers-typesCan we serve custom models on Workers AI or do we have to wait for them to get added?

And if it is not currently possible to serve custom models via Cloudflare, and it's not prohibited to discuss, what are some good options? I have a list and I'm checking it twice, but curious what people think and/or use

?workers-ai-models

Workers AI currently only supports popular open-source models provided by the Cloudflare team, as well as your own LoRAs that can be applied on top of the Cloudflare-provided models. You cannot currently upload your own models or use a model from HuggingFace. See the documentation for the list of Cloudflare-provided models: https://developers.cloudflare.com/workers-ai/models/

Hi every one , Im trying to use my own LoRA adapters together with

I have already uploaded my LoRA adapters successfully , but when I try to run an inference to my LoRAS , Im getting an unknown and undocumented error:

Im using the snippet-code described in the tutorial at the following link : https://developers.cloudflare.com/workers-ai/fine-tunes/loras/

The code is working correctly without my LoRA adapters.

The adapter has been trained with rank <= 8 as per documentation , using

I know the features is in Beta , but any suggestion is appreciated.

PS:

a) The json in the tutorial code is not valid there is a missing

b) The suggested model in the same tutorial is not valid model is under the repo

tutorial at the following link https://developers.cloudflare.com/workers-ai/fine-tunes/loras/#running-inference-with-loras

@cf/mistral/mistral-7b-instruct-v0.2-lora and worker-aiI have already uploaded my LoRA adapters successfully , but when I try to run an inference to my LoRAS , Im getting an unknown and undocumented error:

InferenceUpstreamError: ERROR 3028: Unknown internal errorIm using the snippet-code described in the tutorial at the following link : https://developers.cloudflare.com/workers-ai/fine-tunes/loras/

export interface Env {

AI: any;

}

export default {

async fetch(request, env): Promise<Response> {

const response = await env.AI.run(

"@cf/mistral/mistral-7b-instruct-v0.2-lora", //the model supporting LoRAs

{

messages: [{"role": "user", "content": "Hello world"}],

raw: true,

lora: "4e900000-0000-0000-0000-000000000",

}

);

return new Response(JSON.stringify(response));

},

} satisfies ExportedHandler<Env>;

The code is working correctly without my LoRA adapters.

The adapter has been trained with rank <= 8 as per documentation , using

Mistral-7B-Instruct-v0.2 as base Model (https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.2)I know the features is in Beta , but any suggestion is appreciated.

PS:

a) The json in the tutorial code is not valid there is a missing

} after Hello world"b) The suggested model in the same tutorial is not valid model is under the repo

mistral and not mistralaitutorial at the following link https://developers.cloudflare.com/workers-ai/fine-tunes/loras/#running-inference-with-loras

Cloudflare Docs

Upload and use LoRA adapters to get fine-tuned inference on Workers AI.

Also it looks like you're specifying

raw: true but then providing the messagesIf you do

raw: true then you need to format it to ChatML yourself and provide it as an input string if I remember correctlyyes model_type is defined into the uploaded adapter_config.json

sorry , forgot to update in the sent message , but yes , I have tried to remove

The result is still the same

raw property also tried true/false using the correct message/prompt formatThe result is still the same

InferenceUpstreamError: ERROR 3028: Unknown internal errortried also to pass the fine_tune name instead of the id , same result

maybe something wrong with my adapters , for the record they were generated via mlx_lm , no problem Inferencing with them on my local machine via mlx_lm or Ollama.

Here my adapters_config.json

Here my adapters_config.json

{

"adapter_file": null,

"adapter_path": "adapters",

"batch_size": 5,

"config": "lora.yml",

"data": "./data/",

"grad_checkpoint": false,

"iters": 1800,

"learning_rate": 1e-05,

"lora_layers": 19,

"lora_parameters": {

"keys": [

"self_attn.q_proj",

"self_attn.v_proj"

],

"rank": 8,

"alpha": 16.0,

"scale": 10.0,

"dropout": 0.05

},

"lr_schedule": {

"name": "cosine_decay",

"warmup": 100,

"warmup_init": 1e-07,

"arguments": [

1e-05,

1000,

1e-07

]

},

"max_seq_length": 32768,

"model": "mistralai/Mistral-7B-Instruct-v0.2",

"model_type": "mistral",

"resume_adapter_file": null,

"save_every": 100,

"seed": 0,

"steps_per_eval": 20,

"steps_per_report": 10,

"test": false,

"test_batches": 100,

"train": true,

"val_batches": -1

}@michelle @Isaac McFadyen | YYZ01 thanks to both of you for the suggestions, appreciated

Thanks, searching "own model" I realize this is a common question. Is bring-your-own-model on the roadmap?

Also I am looking into cloud compute providers to host custom inference endpoints—does Cloudflare offer any solutions along that axis?

Also I am looking into cloud compute providers to host custom inference endpoints—does Cloudflare offer any solutions along that axis?

I'll let Michelle answer the first. As for the second, they don't currently, it's just Workers AI. I'm not sure whether it's planned but I'd doubt it since currently the focus is on improving the core Workers AI product.

Sounds good, for context there are some TTS models that run in the browser here https://hexgrad.com/ (note: may not work on iOS due to low webapp memory budget).

It's free to use but/because it runs on your own CPU. So if you want to churn through a many-hour audiobook, unless you have a solid desktop with good cooling you're probably looking at major heat issues.

Trying to find options to outsource the compute somewhere else. And also potentially combine the TTS with ASR/LLMs to produce conversational/phone agents. Not a novel idea by any stretch, but TTS tends to be a fairly expensive puzzle piece so I think both the standalone TTS and combo agents can be done at relatively competitive prices.

It's free to use but/because it runs on your own CPU. So if you want to churn through a many-hour audiobook, unless you have a solid desktop with good cooling you're probably looking at major heat issues.

Trying to find options to outsource the compute somewhere else. And also potentially combine the TTS with ASR/LLMs to produce conversational/phone agents. Not a novel idea by any stretch, but TTS tends to be a fairly expensive puzzle piece so I think both the standalone TTS and combo agents can be done at relatively competitive prices.

Speak any text in any voice on your own machine.

That website is currently hosted on Cloudflare's free tier via next-on-pages. Satisfied with the experience so far (although it is a static webpage and I saw laurent's caveats this morning)

Can I check that this issue is still current? That there's no ability to customise any LLM parameters such as temperature or output format?

https://github.com/cloudflare/cloudflare-docs/issues/11138

It was closed but to me "closed" means "we've done this" not "we're going to ignore it".

https://github.com/cloudflare/cloudflare-docs/issues/11138

It was closed but to me "closed" means "we've done this" not "we're going to ignore it".

GitHub

Which Cloudflare product(s) does this pertain to? Workers AI Subject Matter I would like to request documentation that explains how to control the AI temperature and other related AI settings when ...

any plans to increase limits of non beta model? i mean having no limits