Can we serve custom models on Workers AI or do we have to wait for them to get added?

Can we serve custom models on Workers AI or do we have to wait for them to get added?

@cf/mistral/mistral-7b-instruct-v0.2-loraInferenceUpstreamError: ERROR 3028: Unknown internal error

export interface Env {

AI: any;

}

export default {

async fetch(request, env): Promise<Response> {

const response = await env.AI.run(

"@cf/mistral/mistral-7b-instruct-v0.2-lora", //the model supporting LoRAs

{

messages: [{"role": "user", "content": "Hello world"}],

raw: true,

lora: "4e900000-0000-0000-0000-000000000",

}

);

return new Response(JSON.stringify(response));

},

} satisfies ExportedHandler<Env>;

Mistral-7B-Instruct-v0.2}Hello world"mistralmistralai

raw: truemessagesraw: truerawInferenceUpstreamError: ERROR 3028: Unknown internal error{

"adapter_file": null,

"adapter_path": "adapters",

"batch_size": 5,

"config": "lora.yml",

"data": "./data/",

"grad_checkpoint": false,

"iters": 1800,

"learning_rate": 1e-05,

"lora_layers": 19,

"lora_parameters": {

"keys": [

"self_attn.q_proj",

"self_attn.v_proj"

],

"rank": 8,

"alpha": 16.0,

"scale": 10.0,

"dropout": 0.05

},

"lr_schedule": {

"name": "cosine_decay",

"warmup": 100,

"warmup_init": 1e-07,

"arguments": [

1e-05,

1000,

1e-07

]

},

"max_seq_length": 32768,

"model": "mistralai/Mistral-7B-Instruct-v0.2",

"model_type": "mistral",

"resume_adapter_file": null,

"save_every": 100,

"seed": 0,

"steps_per_eval": 20,

"steps_per_report": 10,

"test": false,

"test_batches": 100,

"train": true,

"val_batches": -1

}

adapter_config.jsonadapter_config.json

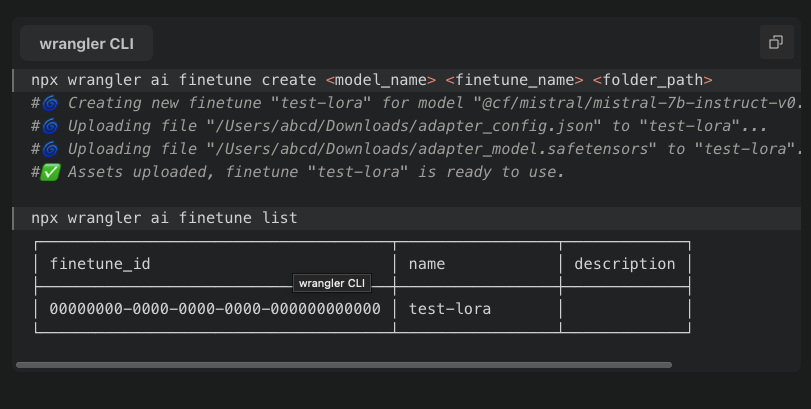

adapter_model.safetensors✘ [ERROR] 🚨 Couldn't upload file: A request to the Cloudflare API (/accounts/1111122223334444/ai/finetunes/6a4a4a4a4a4a4a4a4-a5aa5a5a-aaaaaa/finetune-assets) failed. FILE_PARSE_ERROR: 'file' should be of valid safetensors type [code: 1000], quiting...InferenceUpstreamError: ERROR 3028: Unknown internal error

rank r <=8 or quantization = None AutoTrain Advanced. Contribute to huggingface/autotrain-advanced development by creating an account on GitHub.

AutoTrain Advanced. Contribute to huggingface/autotrain-advanced development by creating an account on GitHub.

--target_modules q_proj,v_proj

@cf/meta/llama-3-8b-instruct to be broken/unavailable? I can't get a response, but @cf/meta/llama-2-7b-chat-fp16 works fineActive Models filter What is the best way to calculate neuron usage at runtime in worker?

What is the best way to calculate neuron usage at runtime in worker?FILE_PARSE_ERROR with my autotrained finetuneError: no available backend found. ERR: [wasm] RuntimeError: Aborted(both async and sync fetching of the wasm failed) . But the other files (config, onnx) are loading up fine. @Xenova I hope it's still okay to tag you! I'm so excited to get your library up and running!

export interface Env {

AI: any;

}

export default {

async fetch(request, env): Promise<Response> {

const response = await env.AI.run(

"@cf/mistral/mistral-7b-instruct-v0.2-lora", //the model supporting LoRAs

{

messages: [{"role": "user", "content": "Hello world"}],

raw: true,

lora: "4e900000-0000-0000-0000-000000000",

}

);

return new Response(JSON.stringify(response));

},

} satisfies ExportedHandler<Env>;

{

"adapter_file": null,

"adapter_path": "adapters",

"batch_size": 5,

"config": "lora.yml",

"data": "./data/",

"grad_checkpoint": false,

"iters": 1800,

"learning_rate": 1e-05,

"lora_layers": 19,

"lora_parameters": {

"keys": [

"self_attn.q_proj",

"self_attn.v_proj"

],

"rank": 8,

"alpha": 16.0,

"scale": 10.0,

"dropout": 0.05

},

"lr_schedule": {

"name": "cosine_decay",

"warmup": 100,

"warmup_init": 1e-07,

"arguments": [

1e-05,

1000,

1e-07

]

},

"max_seq_length": 32768,

"model": "mistralai/Mistral-7B-Instruct-v0.2",

"model_type": "mistral",

"resume_adapter_file": null,

"save_every": 100,

"seed": 0,

"steps_per_eval": 20,

"steps_per_report": 10,

"test": false,

"test_batches": 100,

"train": true,

"val_batches": -1

}✘ [ERROR] 🚨 Couldn't upload file: A request to the Cloudflare API (/accounts/1111122223334444/ai/finetunes/6a4a4a4a4a4a4a4a4-a5aa5a5a-aaaaaa/finetune-assets) failed. FILE_PARSE_ERROR: 'file' should be of valid safetensors type [code: 1000], quiting...@cf/meta/llama-3-8b-instruct@cf/meta/llama-2-7b-chat-fp16Active Models[wrangler:err] InferenceUpstreamError: AiError: undefined: ERROR 3001: Unknown internal error

at Ai.run (cloudflare-internal:ai-api:66:23)

at async Object.fetch (file:///C:/Users/Fathan/PetProject/cloudflare-demo/src/index.ts:15:21)

at async jsonError (file:///C:/Users/Fathan/PetProject/cloudflare-demo/node_modules/wrangler/templates/middleware/middleware-miniflare3-json-error.ts:22:10)

at async drainBody (file:///C:/Users/Fathan/PetProject/cloudflare-demo/node_modules/wrangler/templates/middleware/middleware-ensure-req-body-drained.ts:5:10)

[wrangler:inf] POST / 500 Internal Server Error (2467ms)FILE_PARSE_ERRORError: no available backend found. ERR: [wasm] RuntimeError: Aborted(both async and sync fetching of the wasm failed)