is there no way to download the database anymore?

is there no way to download the database anymore?

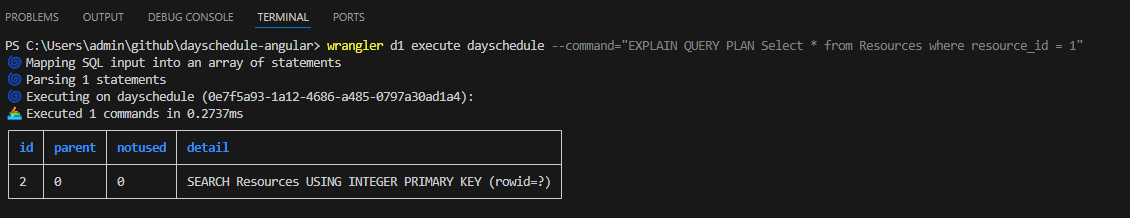

EXPLAIN QUERY PLAN

Error: Too many API requests by single worker invocation I know workers has a 1000 request invocation limit but I was wondering if there was a way insert large amounts of rows with a single request like pythons executemany command. I'm currently inserting a single row per request like this execute() on a giant sql insert statement stringError: Too many API requests by single worker invocationexecutemanyexecute()const prepared = db.prepare("INSERT OR IGNORE INTO r2_objects_metadata (object_key, eTag) VALUES (?1, ?2)");

const queriesToRun = newBucketObjects.map(bucketObject => prepared.bind(bucketObject.Key, bucketObject.ETag));

await db.batch(queriesToRun);[[d1_databases]]

binding = "DB" # available in your Worker on env.DB

database_name = "prod-d1-tutorial"

database_id = "<unique-ID-for-your-database>"

[[d1_databases]]

binding = "DB2" # available in your Worker on env.DB

database_name = "prod-d1-tutorial-v2"

database_id = "<unique-ID-for-your-database>"To execute a transaction, please use the state.storage.transaction() API instead of the SQL BEGIN TRANSACTION or SAVEPOINT statements. The JavaScript API is safer because it will automatically roll back on exceptions, and because it interacts correctly with Durable Objects' automatic atomic write coalescing.for (let i = 0; i < newBucketObjects.length; i++) {

const Key = newBucketObjects[i].Key;

const ETag = newBucketObjects[i].ETag;

await db.prepare(

`INSERT OR IGNORE INTO r2_objects_metadata

(object_key, eTag) VALUES (?, ?)`

).bind(Key, ETag).run();

}let masiveInsert = "";

for (let i = 0; i < newBucketObjects.length; i++) {

const Key = newBucketObjects[i].Key;

const ETag = newBucketObjects[i].ETag;

let insert = `INSERT OR IGNORE INTO r2_objects_metadata (object_key, eTag) VALUES ('${Key}', '${ETag}');`;

masiveInsert += '\n';

masiveInsert += insert;

}

await db.exec(masiveInsert);