I mean, we'd be running the additional SQL on our side if we added it

I mean, we'd be running the additional SQL on our side if we added it

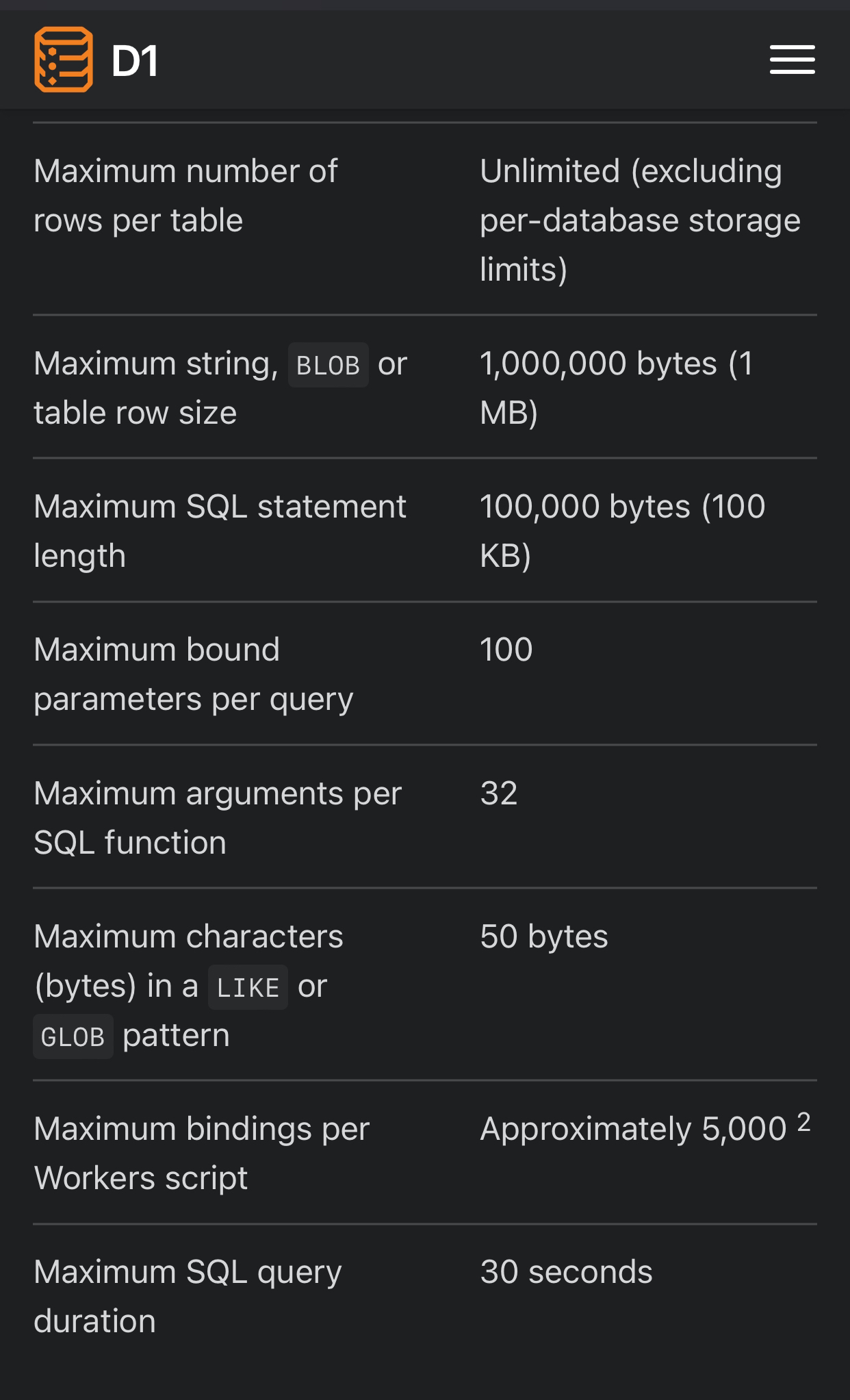

on delete and on update features because of this? Or should I dump my current db, create a new one, and upload the data?Error: D1_ERROR: The destination execution context for this RPC was canceled while the call was still running the docs just define it as Generic error.. I'm getting it while using the db.batch() command and my code has been working fine until today. Is there a limit to how many prepared statements db.batch can run?

INSERT INTO services (id,name,type,created_at) VALUES ("1", "2", "3", "4") ON CONFLICT (id) UPDATE SET name = excluded.name, type = excluded.type WHERE name != excluded.name OR type != excluded.type does not workINSERT INTO services (id,name,type,created_at) VALUES ("1", "2", "3", "4") ON CONFLICT DO NOTHINGSELECT field1, field2, field3, field4, field5 FROM table WHERE field9 = "k" OR field9 IS NULLNetwork connection lost. But great to know that you're monitoring this.

But great to know that you're monitoring this.INTEGER PRIMARY KEYROWIDWITHOUT ROWID Also, I like to prefix my IDs with a short version of the table name, e.g. an employee might be

Also, I like to prefix my IDs with a short version of the table name, e.g. an employee might be emp01FHZXHK8PTP9FVK99Z66GXQTX

on deleteon updateError: D1_ERROR: The destination execution context for this RPC was canceled while the call was still runningGeneric error.db.batch()db.batchError: D1_ERROR: The destination execution context for this RPC was canceled while the call was still running.\n at D1Database._sendOrThrow (cloudflare-internal:d1-api:66:19)\n at async D1Database.batch (cloudflare-internal:d1-api:36:23)\n at async updateTranscripts (index.js:15762:9)\n at async Object.fetch (index.js:16415:16)let r : D1Response = await DATABASE.prepare(query).bind(id,name,type,created_at).run();

console.log("Response success: " + r.success);

console.log("Response error: " + r.error);const query = "INSERT INTO services(id,name,type,created_at)"

+ " VALUES (?,?,?,?)"

+ " ON CONFLICT (id) UPDATE"

+ " SET name = excluded.name, type = excluded.type"

+ " WHERE name != excluded.name OR type != excluded.type";INSERT INTO services (id,name,type,created_at) VALUES ("1", "2", "3", "4") ON CONFLICT (id) UPDATE SET name = excluded.name, type = excluded.type WHERE name != excluded.name OR type != excluded.typeINSERT INTO services (id,name,type,created_at) VALUES ("1", "2", "3", "4") ON CONFLICT DO NOTHINGconst transcriptPrepared = db.prepare(`

INSERT INTO transcripts

(

feed_id, archive_date, archive_date_time,

object_key, segment_start, segment_end,

segment

)

VALUES (?1, ?2, ?3, ?4, ?5, ?6, ?7)

`);

const transcriptQueriesToRun = allSegments.map(segmentObj => transcriptPrepared.bind(

segmentObj.feed_id,

segmentObj.archive_date,

segmentObj.archive_date_time,

segmentObj.object_key,

segmentObj.segment_start,

segmentObj.segment_end,

segmentObj.segment

));

console.log("transcriptQueriesToRun.length: ", transcriptQueriesToRun.length);

console.log("transcriptQueriesToRun[0] ", JSON.stringify(transcriptQueriesToRun[0]));

if (transcriptQueriesToRun.length > 0) {

try {

await db.batch(transcriptQueriesToRun);

} catch (e: any) {

console.error({

message: e.message

});

if (e instanceof Error) {

return new Response(JSON.stringify({

"error": e.message,

"traceback": e.stack

}), {

status: 500,

headers: getCorsHeaders()

});

}

}

}