can I use beta llm model in prod? I need the latest model (llama 3-8b) but its not out of beta yet.

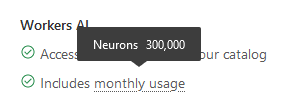

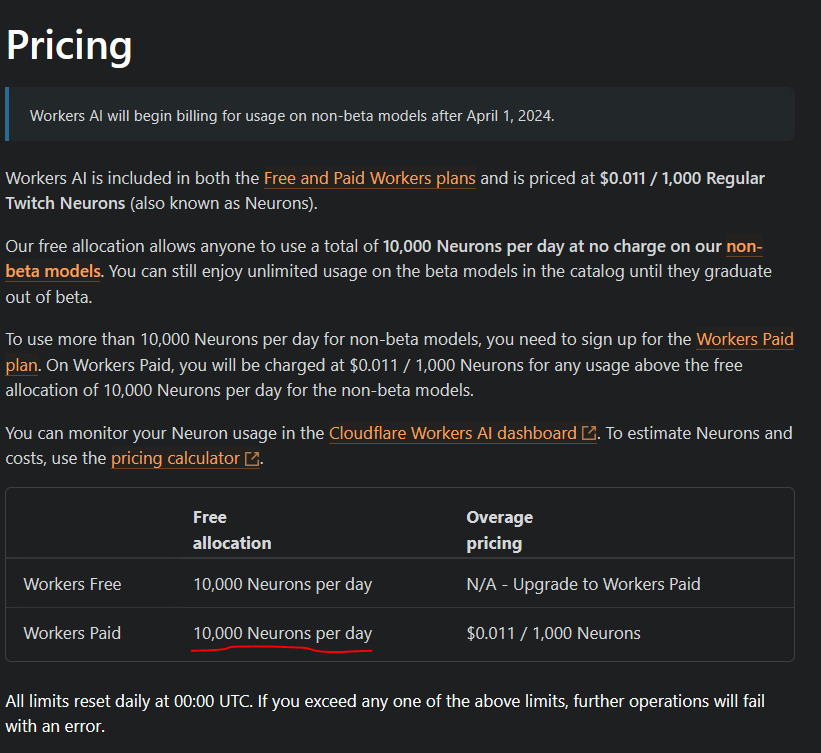

can I use beta llm model in prod? I need the latest model (llama 3-8b) but its not out of beta yet. Do you know the rate limit on free beta models?

Beta models may have lower rate limits while we work on performance and scalehttps://developers.cloudflare.com/workers-ai/platform/limits/

adapter_model.safetensors to a created finetune and got error, see thread

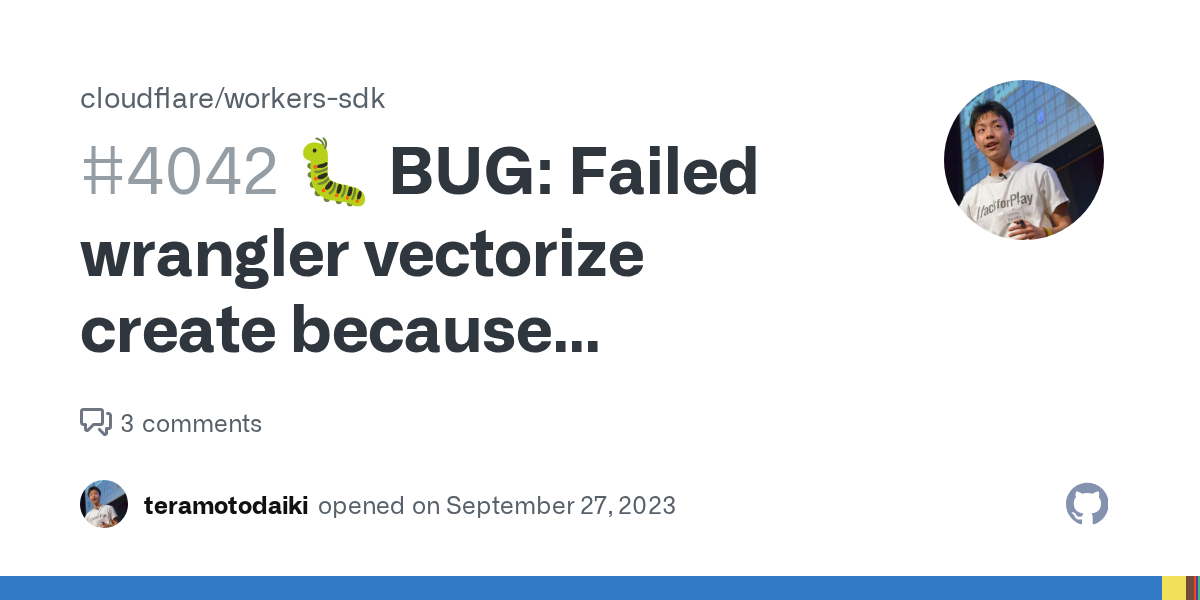

llama-3-8b-instruct with the REST API. It works somewhat but it sometimes feels like it's trying to chat, remembering context from previous HTTP calls. Has anyone ran into similar issues? I've been refining the prompts using system, user and assistant roles.bunx wrangler vectorize create --dimensions=1536 ers-v1 --metric=cosine🚧 Creating index: 'ers-v1'

✘ [ERROR] A request to the Cloudflare API (/accounts/53e0c2270158721ff328e572f56950ea/vectorize/indexes) failed.

vectorize.not_entitled [code: 1005]

If you think this is a bug, please open an issue at:

https://github.com/cloudflare/workers-sdk/issues/new/choose adapter_model.safetensorsllama-3-8b-instructconst systemContent = `You are a knowledgeable employee familiar with the company ${companyName}, responding to customer inquiries. Follow these guidelines:

- Answer in the same language as the question.

- Do not reveal your identity.

- If you don't know the answer, admit it without making anything up.

- Maintain a neutral tone.

- Do not provide opinions or personal views.

- Avoid asking for feedback.

- Keep the conversation strictly to the point; do not engage in small talk or recommendations.

- Do not apologize.

- Do not initiate or continue small talk.

- Do not use phrases like "I'm sorry" or "I apologize."`;

await got.post(`https://api.cloudflare.com/client/v4/accounts/${Env.CLOUDFLARE_ACCOUNT_ID}/ai/run/${model}`, {

headers: { Authorization: `Bearer ${Env.CLOUDFLARE_WORKERS_AI_KEY}` },

json: {

max_tokens: 350,

messages: [

{ role: 'system', content: systemContent },

{ role: 'user', content: `Question:${question}` },

{ role: 'assistant', content: context }

],

temperature: 0.5

}

});