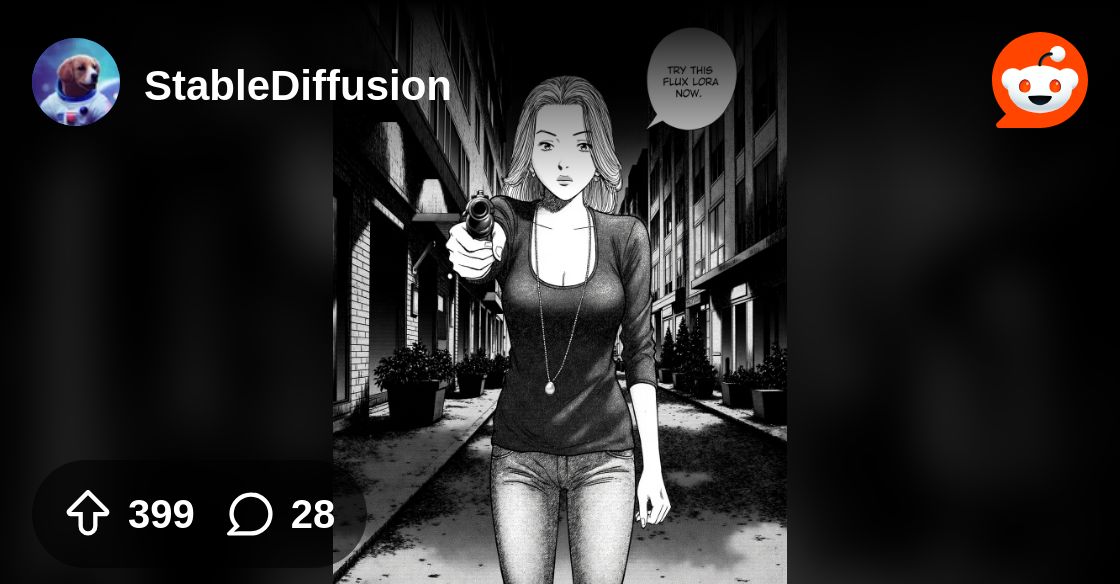

This style lora is really good: extract - I'm gonna answer here if other people wants more details a

This style lora is really good: extract - I'm gonna answer here if other people wants more details as well.

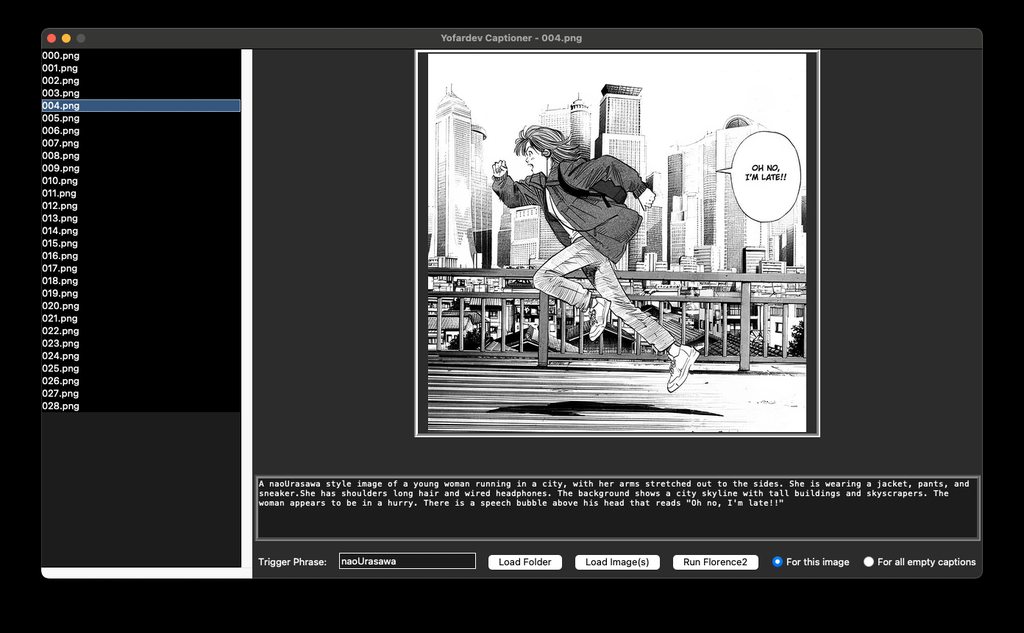

For the captioning, here are some examples : https://imgur.com/a/hxAm3fo (used Florence2 as the base caption then rewrote them)

I've used 29 images (all of different aspect ratio, I didnt crop anything). It was with Civitai Lora training tool and I barely changed anything from the default settings :

"engine": "kohya",

"unetLR": 0.0005,

"clipSkip": 1,

"loraType": "lora",

"keepTokens": 0,

"networkDim": 2,

"numRepeats": 15,

"resolution": 512,

"lrScheduler": "cosine_with_restarts",

"minSnrGamma": 5,

"noiseOffset": 0.1,

"targetSteps": 1088,

"enableBucket": true,

"networkAlpha": 16,

"optimizerType": "AdamW8Bit",

"textEncoderLR": 0,

"maxTrainEpochs": 10,

"shuffleCaption": false,

"trainBatchSize": 4,

"flipAugmentation": false,

"lrSchedulerNumCycles": 3

For the captioning, here are some examples : https://imgur.com/a/hxAm3fo (used Florence2 as the base caption then rewrote them)

I've used 29 images (all of different aspect ratio, I didnt crop anything). It was with Civitai Lora training tool and I barely changed anything from the default settings :

"engine": "kohya",

"unetLR": 0.0005,

"clipSkip": 1,

"loraType": "lora",

"keepTokens": 0,

"networkDim": 2,

"numRepeats": 15,

"resolution": 512,

"lrScheduler": "cosine_with_restarts",

"minSnrGamma": 5,

"noiseOffset": 0.1,

"targetSteps": 1088,

"enableBucket": true,

"networkAlpha": 16,

"optimizerType": "AdamW8Bit",

"textEncoderLR": 0,

"maxTrainEpochs": 10,

"shuffleCaption": false,

"trainBatchSize": 4,

"flipAugmentation": false,

"lrSchedulerNumCycles": 3