I don't think

@cf/meta/llama-3.1-8b-instruct-fp8 has 32k token limitI tried it as well, and it somewhat stopped at 3000 characters

try feeding a asisstant response starting of "{" in the mesg hustoy and itll try. I have a few triks that yield 99% success or so if you want to DM me

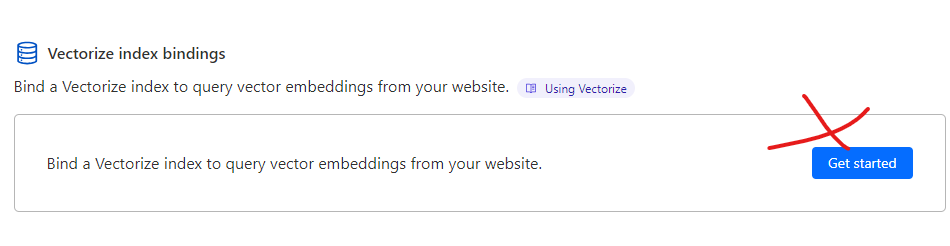

How to bind Vector index to Pages project, the button show "Get started" and redirect to /vectorize page while I aleady have an index.

I used the API directly to update bindings, the dashboard is broken I think

I am getting dimensions error in indexing. Any suggestion?

bge-large is a 1024-dimension model, so the vectorize index needs to be 1024 dimension as well. Looks like it's 768. bge-base is a 768-dimension model

Ok thanks, I will use the base model

Also, anyway to alter index to change it to 1024?

I'm getting really horrible results with all of the text to image AI models (I've tried all of the ones CF has). I'm using the prompts below and getting garbage responses like those in this image.

Does anyone have suggestions on how to actually get what I want?

Does anyone have suggestions on how to actually get what I want?

SD is bad at text, Should be better when CF support FLUX i think

Text Embeddings also useless to me, only en no multi-language model

Other model also outdate, LoRA stuck at

llama-2 , CF ai seem to be useless to me atm.This ^ plus SD uses CLIP for prompting which doesn't like natural language very much... comma seperated tags work better, for example:

Bad:

Good:

Bad:

create me an image that is 512x512, with text that says "my text here" and a red background, with yellow textGood:

red background, text saying "my text here", arial font, yellow coloryeah, that too.

Cloudflare Docs

Diffusion-based text-to-image generative model by Stability AI. Generates and modify images based on text prompts.

it says the response is a binary string but its an object

or is it a blob?

response, not input

it claims its a binary string but the type is an object with zero keys

sending a request to the api directly returns an image correctly

but using env.AI does not

ah

Then how come doing

New blob([res]) returns a blob with raw content of [object Object]?logging JSON.stringify() on the response returns

{}this logs [object Object]

well, when I log JSON.stringify(res) it prints "{}"

so...

well i'd figure it would throw or return nil, or at the bare minimum return "[]"

? wdym

if i do it logs

{"0":0}, but when i log the response from ai.run it's just {}also, doing

console.log(res instanceof Uint8Array) logs "false", so:UShrug:

im fairly certain it's an object

with the run method

here is some working code:

const resp = await this.ai.run(model, input); it is returning a ReadableStream<Uint8Array> rather than a Uint8Array like the types are saying. I'm having to coerce it into the correct type before being able to manipulate ithere is some working code:

fromUint8Array(buffer) is from js-base64so you can use that to convert your response to an actual Uint8Array. hopefully they fix the types, or the implementation to return a Uint8Array soon. its gonna break my code when they do though...

editing it to make it resilient against the inevitable bug fix

thank you much

should this be a bug report?

Slow af

Cool! An alternative way to read the stream to completion is

const buffer = await new Response(resp).arrayBuffer();.Thanks for the replies @katopz and Isaac. I was so hopeful about "Good: red background, text saying "my text here", arial font, yellow color".

Yeaaah Stable Diffusion isn't great at text sadly :(

Does cloudflare worker ai support custom models? or just using the huggingface api?

Custom models are not currently supported. Only models that are added by the Cloudflare team (so not any HuggingFace model, just the ones that are on the list)

all listed models enter on paid plan? i mean, if i pay all moedls listed will be discount of my usage?