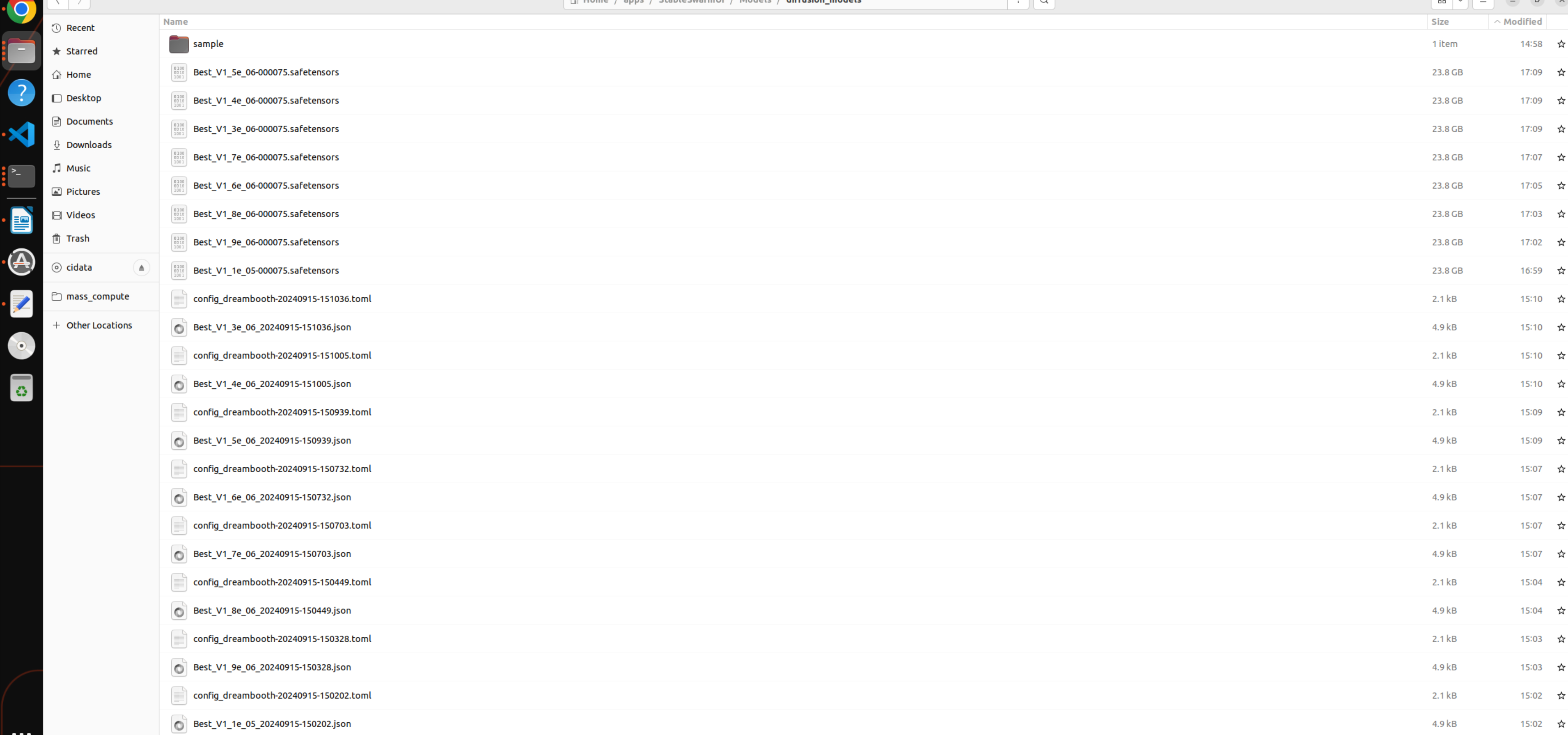

what kohya said If you specify fp8_base for LoRA training, the flux will be cast to fp8 from bf16,

what kohya said

If you specify fp8_base for LoRA training, the flux will be cast to fp8 from bf16, so the VRAM usage will be the same even with full (bf16) base model.**

If you specify fp8_base for LoRA training, the flux will be cast to fp8 from bf16, so the VRAM usage will be the same even with full (bf16) base model.**