Welcome to the official Cloudflare Developers server. Here you can ask for help and stay updated with the latest news

83,498Members

View on DiscordResources

Similar Threads

Was this page helpful?

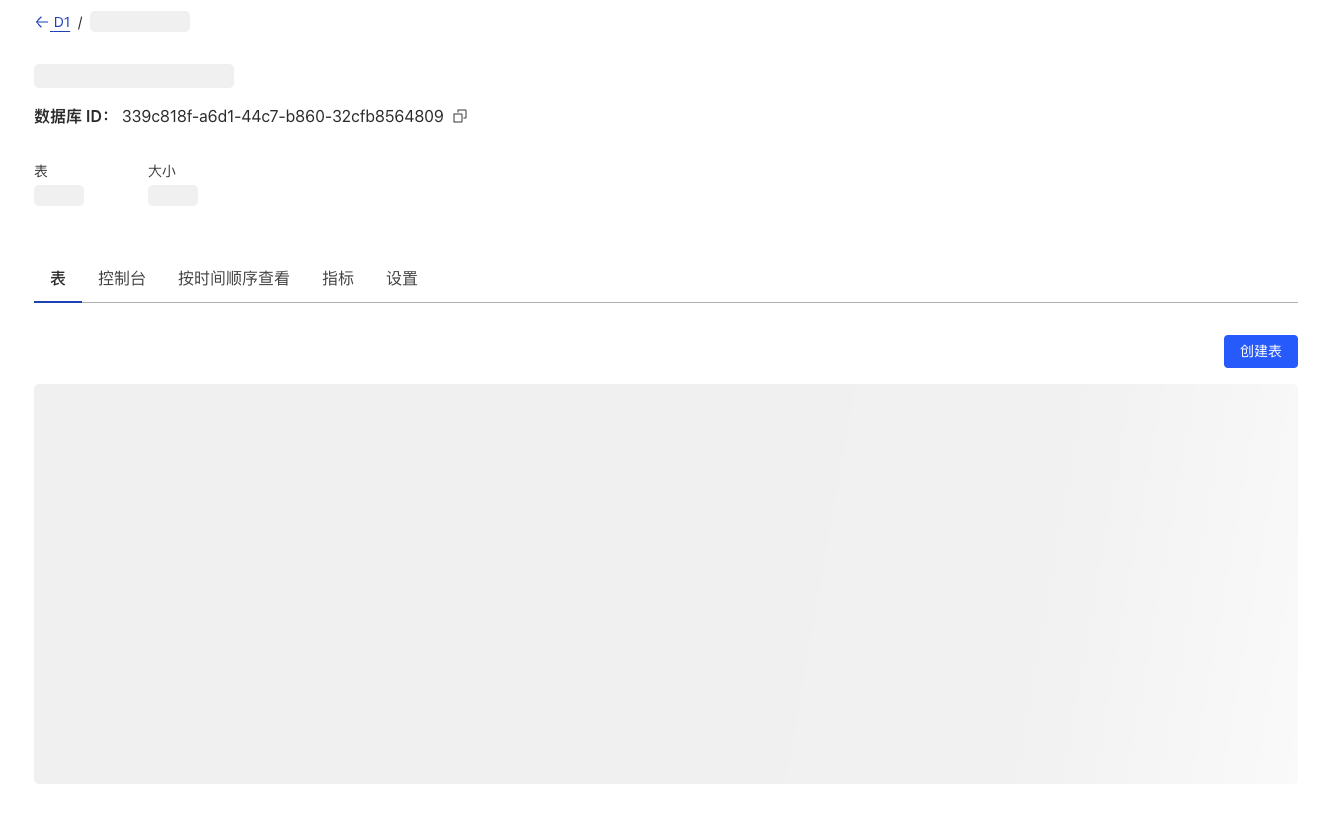

Quick question: Is there an easy way to find the most „expensive“ (most reads/writes) queries in my code (e.g. a list in the Cloudflare dashboard) without manually checking the meta data of every single query?

Quick question: Is there an easy way to find the most „expensive“ (most reads/writes) queries in my code (e.g. a list in the Cloudflare dashboard) without manually checking the meta data of every single query?

wrangler.tomllocals.runtime.env.DB