Patreon

Get more from SECourses: Tutorials, Guides, Resources, Training, FLUX, MidJourney, Voice Clone, TTS, ChatGPT, GPT, LLM, Scripts by Furkan Gözü on Patreon

that is what i am testing now :d

done 16 trainings so far training 8 more

You can try 256 if you don't see good results

yes can be improved nice idea to test

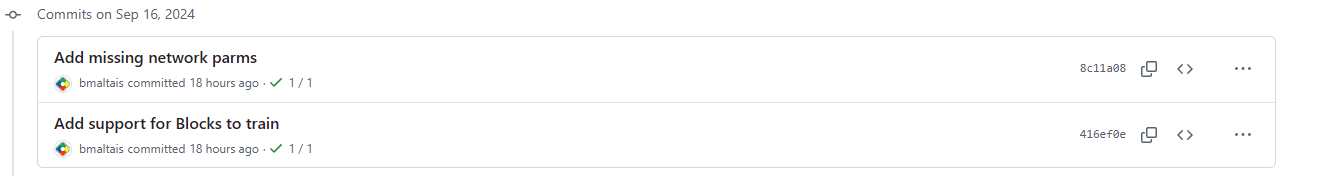

looks like individual block training might have been implemented in the sd3 flux1 kohya ss branch last night

So fine tuning is better the Lora over all you think @Dr. Furkan Gözükara ?

i am testing already

You have a video on how to fine tune training?

Can someone confirm Flux Lora Extraction in completely broken on Kohya ?

I did a finetune of flux dev, results are amazing. I use Extract Flux LoRA tab to extract the Lora from the finetuned model. When I use the extracted Lora it makes no difference with not using Lora. @Dr. Furkan Gözükara Did you try the Extract Flux LoRA feature ?

I’m confused on what fine tuning is, do we basically do the same training as in this video https://youtu.be/nySGu12Y05k?feature=shared but with the configuration from the new update on your patreon?

YouTubeSECourses

Ultimate Kohya GUI FLUX LoRA training tutorial. This tutorial is product of non-stop 9 days research and training. I have trained over 73 FLUX LoRA models and analyzed all to prepare this tutorial video. The research still going on and hopefully the results will be significantly improved and latest configs and findings will be shared. Please wat...

I'd also like to know. Though I was waiting on Kohya to refine it a bit more before delving into fine tunes.

Same here. Do you mean extracting a lora from a finetuned checkpoint?

Yeah

For me the extracted lora looks nothing like my subject, nothing like the checkpoint

I guess it is broken

@smoke yeah, thx for the confirmation

Should probably raise an issue in sd-scripts Github since it is broken

GitHub

2 Issues sometimes it works, but it looks nothing like the fine tuned model. Doesnt work and i get the error below During handling of the above exception, another exception occurred: Traceback (mos...

Ah yeah it definitely is broken

But i'm not sure it's the same issue

Because they say it works at 1.5 strength

But for me at 2.0 there's was no difference

Well for me it is, the extracted lora does not look like my finetuned checkpoint. At 1.5 strength it starts to look more like it but it created a lot of other issues and graininess, so definitely not the best approach

Atleast for me

Comfy ?

I tried in Comfy yeah but I believe the same issue happened in forge

has anyone got the only training specific layers yet to see a speed increase? im getting same speed trying to training only 2 layers as i do when i train all

what are layers?

yes true

exactly same

just use new config

checkpoints will be 23.8 gb

load config into dreambooth tab

i havent could be broken

if broken should be reported

i will try extract now

ok i think i got the best single layer found

time to deepen research

it sucks that fused back pass not works with multi gpu

48 gb not sufficient to do multi gpu training with flux fine tuning

I'm not ashamed I listen this...

@master_kawaii can you private message me

Oh am I going to jail? @Dr. Furkan Gözükara haha

no something else

oh ok xD

There ! Master Furkan