Hi there!

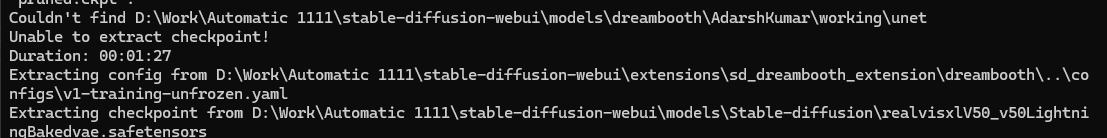

Attempting to fully train FLUX with dreambooth on runpod.

Does anybody encounter same trouble?

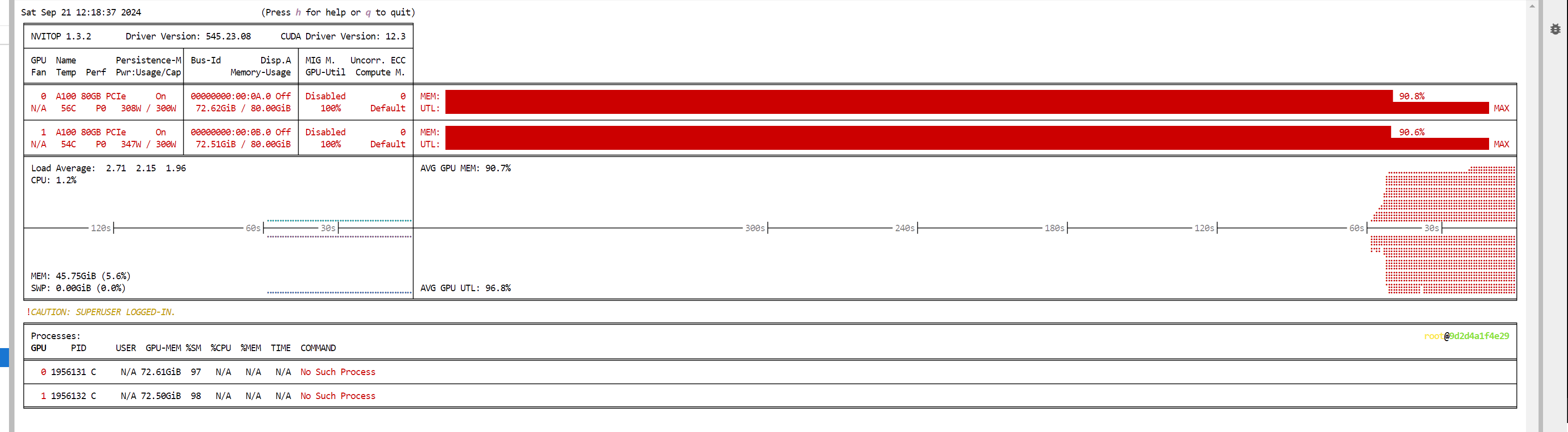

[rank1]: ValueError: DistributedDataParallel device_ids and output_device arguments only work with single-device/multiple-device GPU modules or CPU modules, but got device_ids [1], output_device 1, and module parameters {device(type='cpu')}.

While using multiple GPU getting this error. Using 2 A100 PCle. If turning off checkbox multi GPU and switching Number of processes to 1 working great.

Using Quality_1_21820MB_16_12_Second_IT.json from Best_Configs_v3