```js const response = await env.AI.run("@cf/meta/llama-3.1-8b-instruct", { prompt: "What is

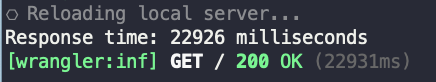

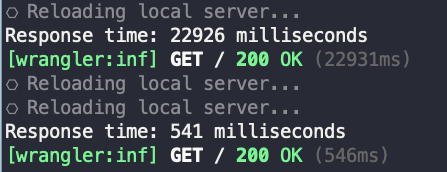

anyone know why the local example code takes 25 seconds to respond?

const response = await env.AI.run("@cf/meta/llama-3.1-8b-instruct", {

prompt: "What is the origin of the phrase Hello, World",

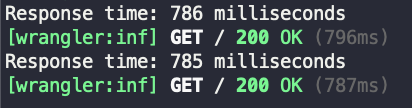

});llama-3.1-8b-instruct, and they seem all beta models. Maybe that's why? Try another model

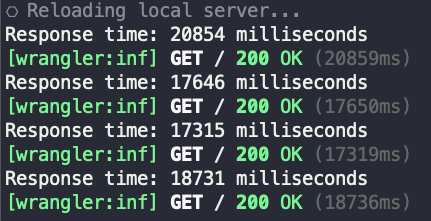

AiExternal: Couldn't fetch external AI provider response (500, Internal Server Error)Response time: 716 milliseconds

[wrangler:inf] GET / 200 OK (739ms)llama-3.1-8b-instructAiExternal: Couldn't fetch external AI provider response (500, Internal Server Error) const response = await env.AI.run("@hf/meta-llama/meta-llama-3-8b-instruct", {

prompt: "What is the origin of the phrase Hello, World",

});