thats openai

Ye so you’re running the code multiple times per code change

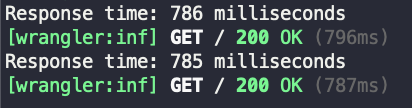

Because it says response time only once that’s why I’m wondering

cause its one request

im not sure how to tell you this, but its not that lol

Ah I see now

Well I thought that maybe it has to load the model into memory the first time it runs on a worker so it takes so long and following requests are faster

But ye that’s not the case

its not loading anything into any memory

How is it supposed to run the model then

At some point that has to be done

Even if it’s not right before you access it

you think they load an instance of a llm into some memory somewhere for every worker that invokes it?

Only if it wasn’t in memory already, ya

“Some” memory being the ram of the machine your worker code runs on

Or rather its vram

and then where is it executed?

on a vcpu in the worker?

Why a cpu? Cf has gpus in their machines

Huh

Why thumbs up

does flux work for anyone?

i'm getting

i'm getting

AiExternal: Couldn't fetch external AI provider response (500, Internal Server Error)using the code provided in the docs here: https://developers.cloudflare.com/workers-ai/models/flux-1-schnell

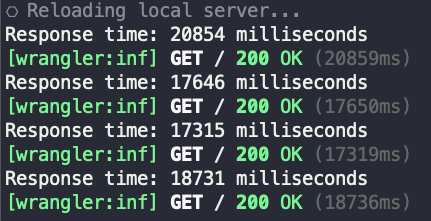

It takes a long time because you're not using streaming. You're basically waiting for the LLM to generate the whole text before returning a response.

it takes 20 seconds to write a sentence?

how’s any other external api then 30x faster?

that still doesn’t make any sense

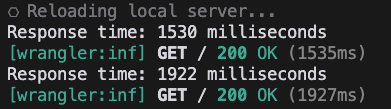

Try the playground (https://playground.ai.cloudflare.com/) with your prompt and you'll get a feel for the perfomance.

yeah takes a second

pick meta llama

prompt: What is the origin of the phrase Hello, World

prompt: What is the origin of the phrase Hello, World

alright you might be right

it’s just super ass slow lol

but streaming it won’t make the response faster, just streamed

I'm using Llama via api.cloudflare with Bearer Auth. Where can I check my usage? If I exceed 10,000 free neurons, am I charged automatically or does it stop working?

It stops working

And their pricing is no longer in neurons

Cloudflare Docs

Workers AI is included in both the Free and Paid Workers plans and is priced based on model task, model size, and units.

neurons may have been the worst idea ever conceptualized

better :)

oh btw. in the worker binding

async env.AI.run()

is there docs on this, i cant find them...

im curious if theres a seed param like openai offers in the latest models

to get more deterministic results

almost looks like sending a seed param worked somehow

Check the model page(s) (https://developers.cloudflare.com/workers-ai/models/llama-3.1-8b-instruct-fast#Parameters). Beware that for the most part, models within a category list the same set of parameters. E.g. @hf/nousresearch/hermes-2-pro-mistral-7b doesn't support temperature or seed, even though those parameters are listed on the model page. (Reported it here workers-ai a while ago. Never got any response so no idea if it's intended to be that way). Also, expect some quirks. E.g. a subset of the models will break if you set max_tokens to 597 or higher (Reported here workers-ai)