@Furkan Gözükara SECourses seems prior preservation in kohya is broken

GitHub

Replace SD3Tokenizer with the original CLIP-L/G/T5 tokenizers.

Extend the max token length to 256 for T5XXL.

Refactor caching for latents.

Refactor caching for Text Encoder outputs

Extract arch...

Extend the max token length to 256 for T5XXL.

Refactor caching for latents.

Refactor caching for Text Encoder outputs

Extract arch...

bghira responded

i think he didnt see my reply  becaue i added. but good

becaue i added. but good

becaue i added. but good

becaue i added. but goodif this get fixed nice

ah, and batch size 1!

kk

batch size 1 is best

anyway for low number of images

with and without

[removed]

[removed]

hilary doesn't look like hilary

that's the base model, I didn't train Clinton. The point is that Clinton stays as Clinton as she was

on the left

she didn't though. neither woman, in both images, look like they do on the right

the trained person also looks a bit worse but that can probably be tweaked

yeah

they don't just look worse, they look like different people

what if you try famous comic book charcters like spider man or deadpool

and then?

see how they come out with and without your anti-bleed

the base model will have no problem differentiating a woman from spiderman. even woman and man is easy. on two woman or two men have this bleeding effect

sure, and they're costumes, but the thought is - is this only affecting human faces or is it going to affect other things?

I think you might have misunderstood the samples. Here is what you've probably meant, untrained vs. trained

the training of the left person obviously isn't good, but Clinton stays mostly unchanged

removed above to avoid further misunderstanding

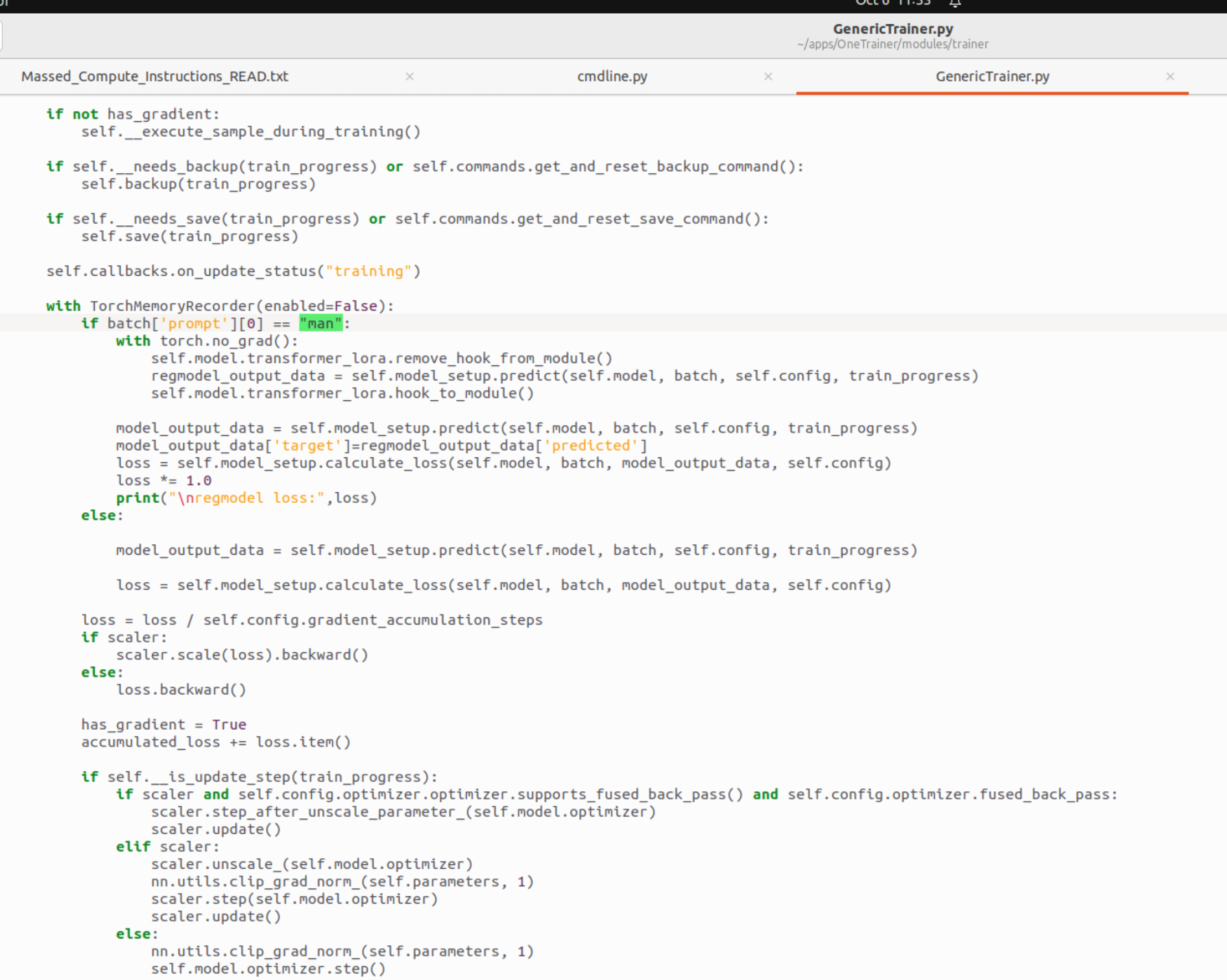

He is right, it is very similar. You could have exactly the same effect by pregenerating and therefore make this possible for full finetune with no additional vram, too. You'd have to synchronize timesteps and seed(!) between the pregenerated data and the training though, which is the difference to the current "Prior Preservation" feature. I did implement this for full finetune also, but without pregeneration so it needs a lot of VRAM for two full models, the student and the trainer model. Feel free to forward my contact.

On the second comment though, it's not only activation. In this sample, dreambooth-training, Clinton would look like the left person without regularization.

the left person in the right sample, I mean

my training completed now will test

OneTrainer strategy looks like failed for me

i will compare quality lets see if any difference

nice hair though

try a simple prompt first. if even "man" looks like you, something went wrong. That always worked for me.

ok testing

studio photo of a man wearing an amazing suit

ye all man is me

weird

this change didnt make any impact

code is accurately put

something went wrong. do you have the output of the training? was the debug print() there?

what debug print

the print("regmodel loss...

i dint make such change

i used your file :d

i see that