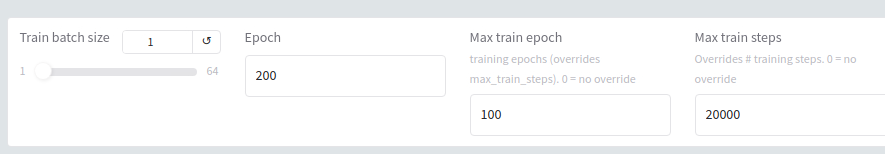

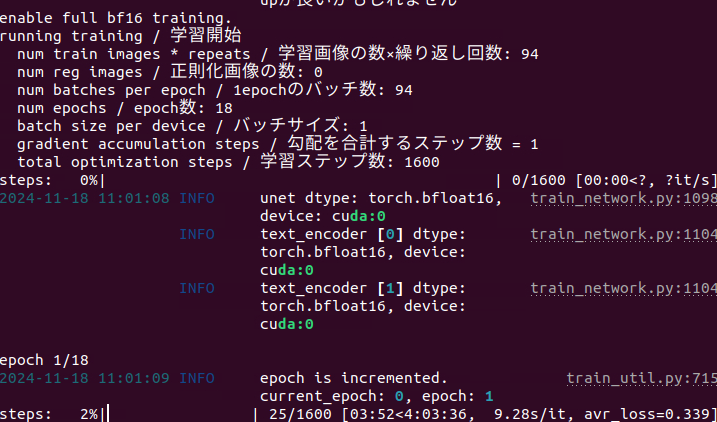

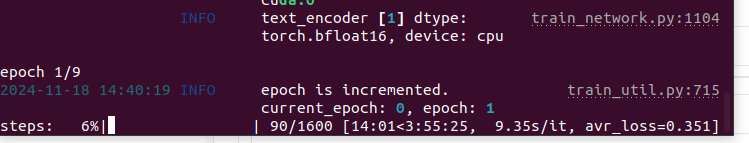

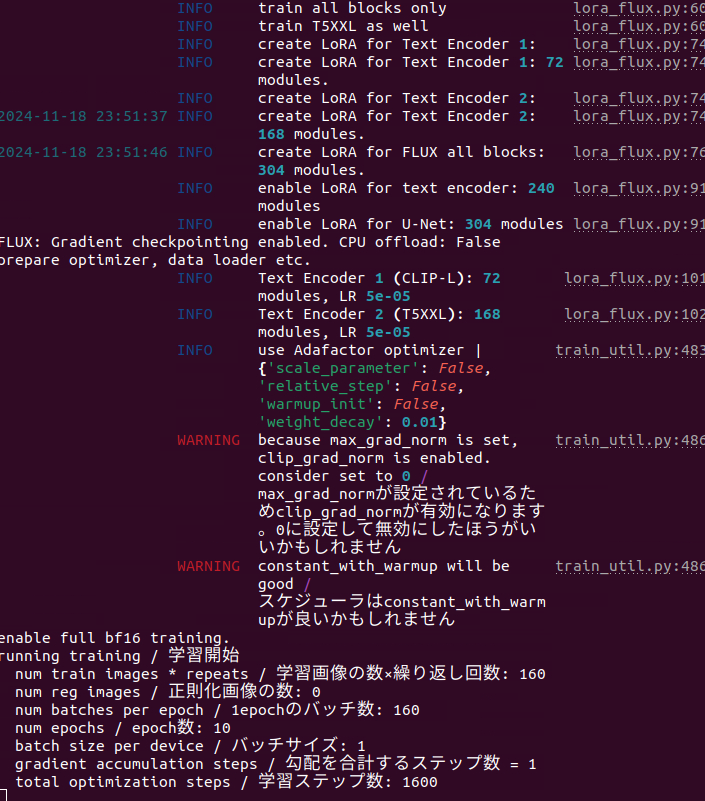

<@205854764540362752> doc, what's limiting my epochs to only 18 and 1600 steps? using the new 48gb c

@Furkan Gözükara SECourses doc, what's limiting my epochs to only 18 and 1600 steps? using the new 48gb configs in kohya.

. Had to override the default =0.

. Had to override the default =0.