Sorry, what do you mean latest version? I'm V44 files from a day ago.

Sorry, what do you mean latest version? I'm V44 files from a day ago.

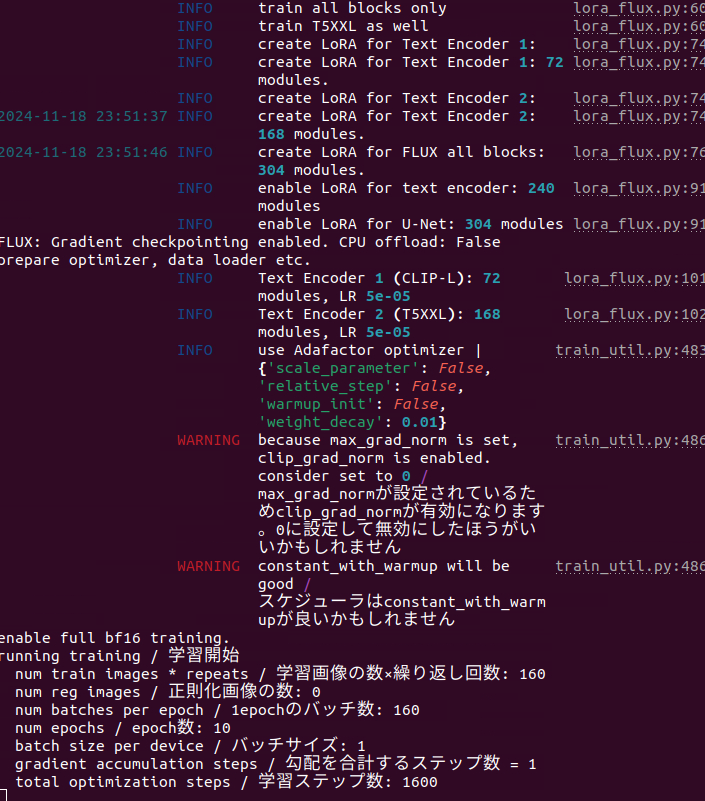

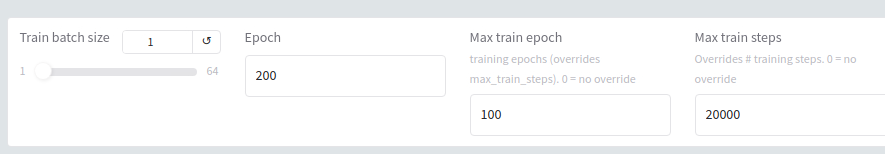

. Had to override the default =0.

. Had to override the default =0.

Got my answer quickly here. Thanks

Got my answer quickly here. Thanks Appreciate this community so much, I learn something every day!

Appreciate this community so much, I learn something every day!