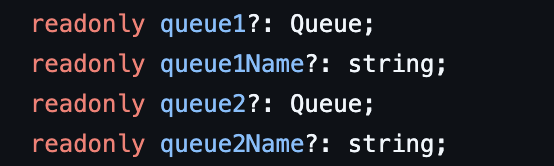

Yep, but I'm looking to do something like `batch.queue === env.FOO_QUEUE.name`

Yep, but I'm looking to do something like

batch.queue === env.FOO_QUEUE.namebatch.queue === env.FOO_QUEUE.namestartsWith uglinesssend() methods. wrangler.toml

Both max_batch_size and max_batch_timeout work together. Whichever limit is reached first will trigger the delivery of a batch.

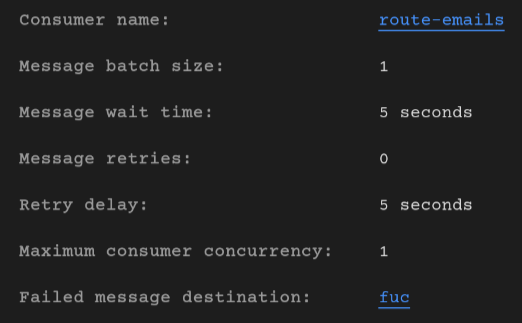

d1a94a7c4e4a4c23a6dbc74a8abbad11max_concurrencyawait wait(1000)

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

welcome-and-rules. It creates confusion for people trying to help you and doesn't get your issue or question solved any faster.

I think the issue here is that the queue is not a standard worker. It has extra limitations that nobody is mentioning anywhere. Something to add to the docs as it will save so much time

I think the issue here is that the queue is not a standard worker. It has extra limitations that nobody is mentioning anywhere. Something to add to the docs as it will save so much time

try/catch

max_concurrency1max_batch_size

startsWithsend()export default {

async queue(batch) {

const ntfyUrl = 'https://ntfy.sh/doofy';

const message = batch.messages[0];

if (message) {

try {

const response = await fetch(ntfyUrl, {

method: 'POST',

body: message.body,

});

if (response.ok) message.ack();

} catch (error) {

console.error(`Failed to process message: ${error}`);

}

}

},

};