Hello, I am using the "bge-large-en-v1.5" model from cloudflare and same model running in local that

Hello, I am using the "bge-large-en-v1.5" model from cloudflare and same model running in local that I got from huggingface via SentenceTransformer.

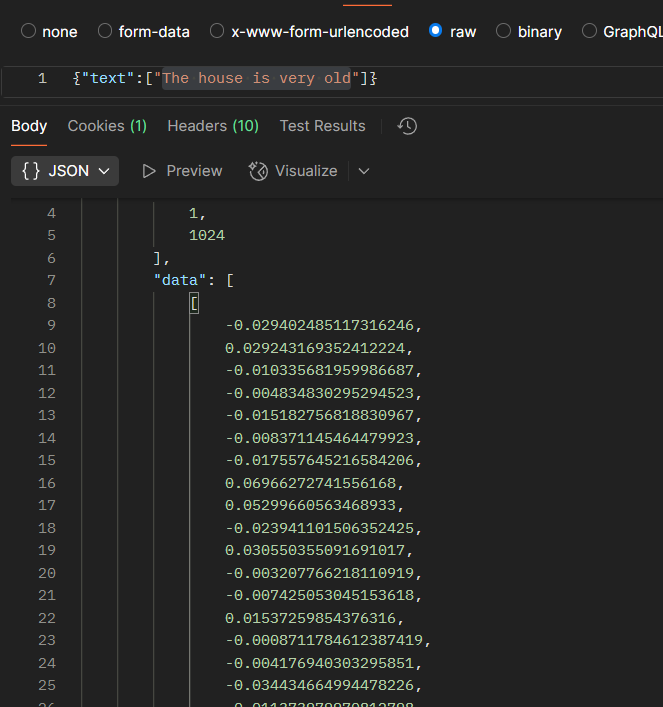

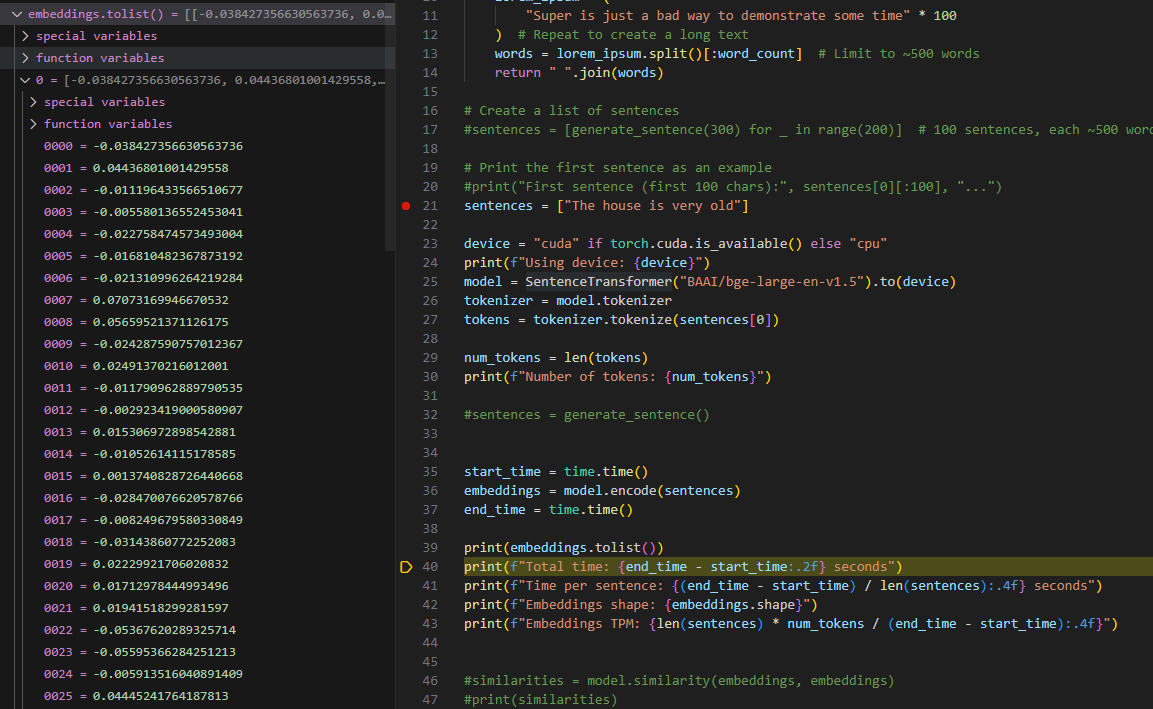

For same string I am getting different vectors between cloudflare hosted model and local (screenshots - cloudflare ai in postman vs local with https://huggingface.co/BAAI/bge-large-en-v1.5)

Shouldn't these be exactly same?

One possible reason can be that cloudflare is using a older or different version than the hugging face one.

Also, what is the strategy from cloudflare to support these models? If I use the model for my current data set, I should have access to the model for a very long time to query too.

For same string I am getting different vectors between cloudflare hosted model and local (screenshots - cloudflare ai in postman vs local with https://huggingface.co/BAAI/bge-large-en-v1.5)

Shouldn't these be exactly same?

One possible reason can be that cloudflare is using a older or different version than the hugging face one.

Also, what is the strategy from cloudflare to support these models? If I use the model for my current data set, I should have access to the model for a very long time to query too.