@Dr. Furkan Gözükara I've noticed that SwarmUI downloads t5xxl_enconly.safetensors file every time y

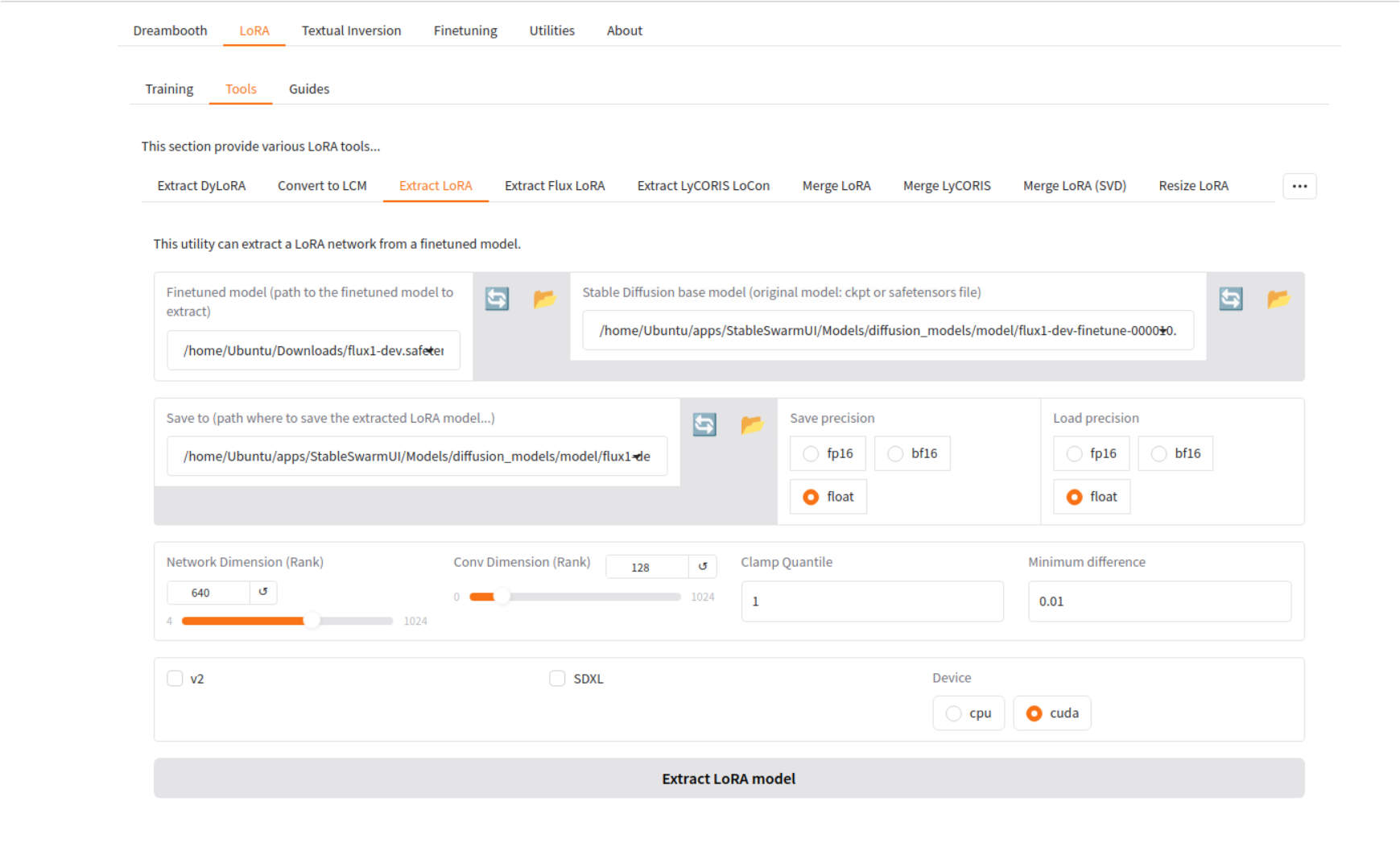

@Dr. Furkan Gözükara I've noticed that SwarmUI downloads t5xxl_enconly.safetensors file every time you try to use flux even if you set t5xxl model location manually.

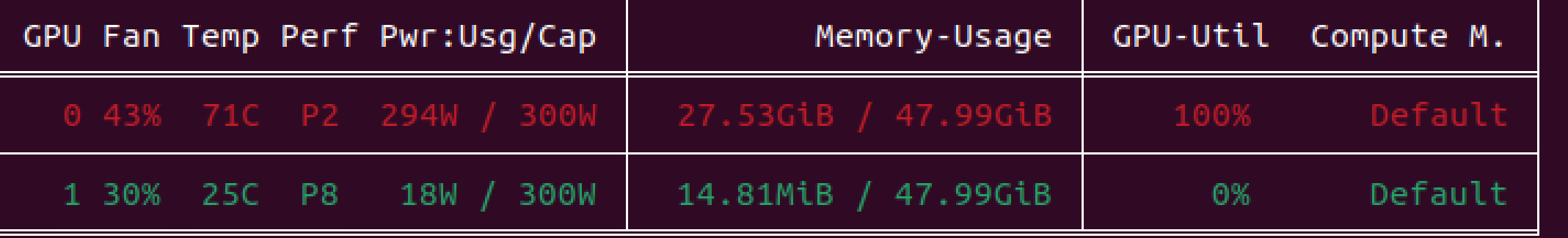

Furthermore there is a mention in debug logs that Comfy back-end is typecasting fp8_e4m3fn model into bf16 every time flux type checkpoint loads from scratch, and that typecasting takes significant CPU time.

I looked up in swarmUi code and it seems that it's downloading model from this repo: https://huggingface.co/mcmonkey/google_t5-v1_1-xxl_encoderonly/ That mdel is 4.9 gb that is consistent with VRAM usage of swarmUI when running flux inference.

My concern is that all this time we used the inferior fp8 casted t5xxl model for generations, wich according to many reports decreases output quality much more than casting to fp8 flux itself

Furthermore there is a mention in debug logs that Comfy back-end is typecasting fp8_e4m3fn model into bf16 every time flux type checkpoint loads from scratch, and that typecasting takes significant CPU time.

I looked up in swarmUi code and it seems that it's downloading model from this repo: https://huggingface.co/mcmonkey/google_t5-v1_1-xxl_encoderonly/ That mdel is 4.9 gb that is consistent with VRAM usage of swarmUI when running flux inference.

My concern is that all this time we used the inferior fp8 casted t5xxl model for generations, wich according to many reports decreases output quality much more than casting to fp8 flux itself